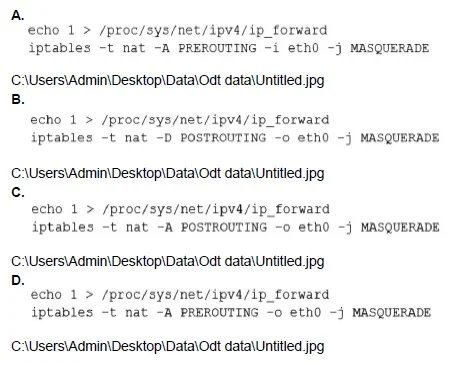

A junior administrator is setting up a new Linux server that is intended to be used as a router at a remote site. Which of the following parameters will accomplish this goal?

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

To configure a Linux server as a router (e.g., for a remote site acting as a gateway), the server must forward IP packets between interfaces and perform Network Address Translation (NAT) to allow internal clients to access external networks.

The correct option is:

C:

echo 1 > /proc/sys/net/ipv4/ip_forward:

Enables IP forwarding in the kernel, allowing the server to route packets between interfaces.

iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE: Adds a NAT rule to the POSTROUTING chain, using the MASQUERADE target on the outgoing interface (eth0, typically the WAN interface). This dynamically rewrites the source IP of outgoing packets to the server's public IP, enabling NAT for routed traffic.

This combination sets up the server as a basic NAT router. For persistence, add net.ipv4.ip_forward = 1 to /etc/sysctl.conf and use sysctl -p, and save iptables rules with iptables-save.

Why not the other options?

A:

echo 1 > /proc/sys/net/ipv4/ip_forward:

Correctly enables IP forwarding.

iptables -t nat -A PREROUTING -i eth0 -j MASQUERADE: Incorrect. PREROUTING is for incoming packets (e.g., DNAT/port forwarding), and -i eth0 applies to the input interface. MASQUERADE on PREROUTING with an input interface is invalid for routing outgoing traffic.

B:

echo 1 > /proc/sys/net/ipv4/ip_forward:

Correctly enables IP forwarding.

iptables -t nat -D POSTROUTING -o eth0 -j MASQUERADE: Incorrect. -D deletes a rule from POSTROUTING instead of adding it, disabling NAT rather than enabling it.

D:

echo 1 > /proc/sys/net/ipv4/ip_forward:

Correctly enables IP forwarding.

iptables -t nat -A PREROUTING -o eth0 -j MASQUERADE: Incorrect. PREROUTING handles pre-routing decisions, and -o eth0 specifies an output interface, which is illogical for MASQUERADE (used for source NAT on outgoing traffic). This rule is syntactically invalid for the routing goal.

References:

CompTIA XK0-005 Objectives:

1.5 (Summarize and explain common networking services/configurations) and 2.3 (Configure firewall settings using iptables or nftables).

Linux Documentation:

IP Forwarding and iptables NAT.

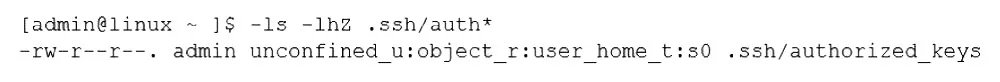

An administrator transferred a key for SSH authentication to a home directory on a remote server. The key file was moved to .ssh/authorized_keys location in order to establish SSH connection without a password. However, the SSH command still asked for the password. Given the following output:

Which of the following commands would resolve the issue?

A. restorecon .ssh/authorized_keys

B. ssh_keygen -t rsa -o .ssh/authorized_keys

C. chown root:root .ssh/authorized_keys

D. chmod 600 .ssh/authorized_keys

Summary:

The administrator has set up an SSH key in the correct location (~/.ssh/authorized_keys) but is still prompted for a password. The ls -lZ output reveals the critical issue: the permissions for the authorized_keys file are -rw-r--r-- (644). The SSH daemon is very strict about permissions for security reasons; it requires that the authorized_keys file is not writable or readable by any other user, and the recommended permission is 600 (-rw-------).

Correct Option:

D. chmod 600 .ssh/authorized_keys:

This command directly fixes the permission problem. It sets the authorized_keys file to be readable and writable only by the owner (the user), which satisfies SSH's security requirements and should enable passwordless login.

Incorrect Options:

A. restorecon .ssh/authorized_keys:

This command would reset the SELinux context. The current context unconfined_u:object_r:user_home_t:s0 is standard for a user's home directory and is not the primary issue here. The file permissions are the more common and direct cause.

B. ssh_keygen -t rsa -o .ssh/authorized_keys:

This command is invalid. The correct command is ssh-keygen, and it is used to generate a new key pair, not to fix permissions on an existing authorized_keys file. This would overwrite the current file.

C. chown root:root .ssh/authorized_keys:

This command would change the ownership of the file to root. The file is correctly owned by the user (user1). Changing it to root would break SSH key authentication for this user, as the SSH daemon running as the user would no longer have access to the file.

Reference:

OpenSSH Manual (sshd): The official documentation states that the authorized_keys file must not have write permissions for group or others to be accepted.

A Linux administrator is tasked with creating resources using containerization. When deciding how to create this type of deployment, the administrator identifies some key features, including portability, high availability, and scalability in production. Which of the following should the Linux administrator choose for the new design?

A. Docker

B. On-premises systems

C. Cloud-based systems

D. Kubernetes

Explanation:

While Docker is the foundational technology for creating and running individual containers, the question specifies key features for a production deployment: portability, high availability, and scalability.

Portability:

Kubernetes provides a consistent API to manage containerized workloads across different environments—on-premises, in the cloud, or in hybrid setups. You can define your application once and run it anywhere a Kubernetes cluster exists.

High Availability:

Kubernetes is designed for this. It can automatically restart failed containers, reschedule them to healthy nodes if a server fails, and distribute traffic across multiple replicas of your application to ensure it remains accessible even during partial failures.

Scalability:

Kubernetes can automatically scale your application up or down (a feature called the Horizontal Pod Autoscaler) based on CPU usage or other custom metrics. It can also scale the number of cluster nodes itself (Cluster Autoscaler) in cloud environments.

Kubernetes is the orchestrator that manages Docker (or other container runtime) containers to provide these enterprise-grade production features.

Analysis of Incorrect Options

A. Docker:

Docker is a containerization platform, but by itself (using Docker Swarm or just Docker Engine), it lacks the robust, automated, and extensive feature set required for complex production deployments. While Docker Swarm offers some orchestration, Kubernetes is the industry standard for achieving high availability and sophisticated scalability at scale.

B. On-premises systems:

This is a deployment model (location), not a specific containerization technology. You can run Kubernetes, Docker, and other solutions on-premises. The term is too vague and does not directly address the specific features of portability, high availability, and scalability in the context of containers.

C. Cloud-based systems:

Similar to on-premises, this is a deployment model. Major cloud providers offer managed Kubernetes services (like EKS, AKS, GKE), which would be the implementation of the correct choice. The term itself is too broad, as "cloud-based systems" could refer to simple virtual machines, serverless functions, or database services, not specifically a container orchestration solution.

Reference:

Concept:

Container Orchestration. The CompTIA Linux+ XK0-005 exam covers the need to understand the ecosystem around containers. While Docker is used to build and run images, Kubernetes is the platform for deploying and managing containerized applications in production to achieve reliability and scalability.

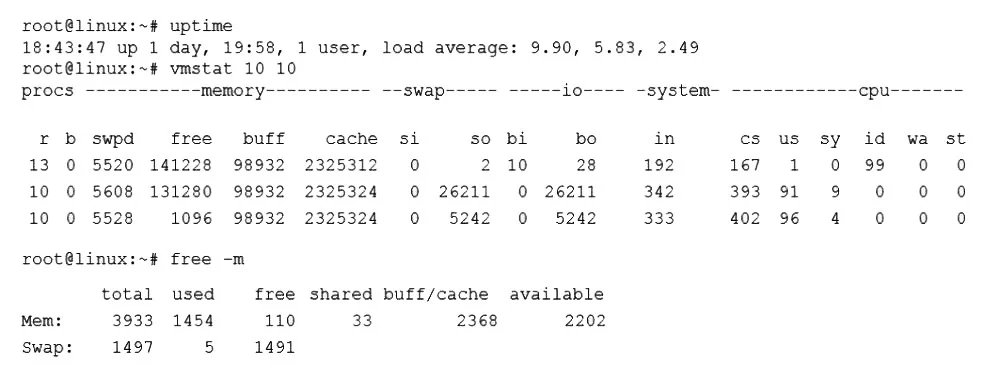

A Linux administrator was notified that a virtual server has an I/O bottleneck. The Linux administrator analyzes the following output:

Given there is a single CPU in the sever, which of the following is causing the slowness?

A. The system is running out of swap space.

B. The CPU is overloaded.

C. The memory is exhausted.

D. The processes are paging.

Explanation:

While the problem notification was about an I/O bottleneck, the data shows the primary issue is a severely overloaded CPU, which is the root cause of the system slowness. Let's break down the evidence:

1.uptime shows a very high load average:

load average: 9.90, 5.83, 2.49

The load average represents the average number of processes that are either running or waiting to run (uninterruptible, typically for I/O).

A load average of 9.90 on a single-CPU system means that, on average, 9.9 processes were competing for the CPU's time. A healthy system has a load average below 1.0 per CPU core. A value of 9.90 indicates an extreme CPU bottleneck.

2.vmstat output confirms CPU saturation:

The procs section r column shows 13 and 10 processes waiting in the run queue. This is a direct sign of processes waiting for CPU time.

The cpu section shows us (user time) at 91% and 96%. This means the CPU is spending almost all its time executing application code, with very little idle time (id is 0%). The system is completely saturated.

3.The I/O Bottleneck is a Symptom, Not the Cause:

The high load average often points to an I/O issue, but the vmstat data does not show a significant I/O wait problem.

The wa (I/O wait) column in vmstat is 0%. This metric shows what percentage of the CPU time is spent waiting for I/O. If this were a true disk I/O bottleneck, this number would be high.

The bi (blocks in) and bo (blocks out) columns show some activity but nothing consistently extreme that would explain the severe slowness on its own.

The system is slow because the CPU is 100% busy, which is caused by too many processes (a high r queue) demanding CPU time.

Analysis of Incorrect Options

A. The system is running out of swap space.

The free -m output shows Swap: 1497 5 1491, meaning only 5MB of 1.5GB swap is used. There is plenty of free swap space. The vmstat output also shows very low swap activity (si and so are 0 in the first sample and low in others), indicating the system is not swapping.

C. The memory is exhausted.

The free -m output shows available: 2202. The "available" memory is a good indicator of how much memory is free for new applications, and 2.2GB is a healthy amount. The system is not under memory pressure.

D. The processes are paging.

This is related to memory exhaustion and swapping. As shown above, there is no significant swap activity (si, so), and plenty of memory is available, so paging is not the issue.

Reference:

Commands: uptime, vmstat, free

Concept: System Performance Analysis. The key takeaway is to correlate multiple data points. A high load average must be interpreted with the CPU usage and I/O wait statistics. In this case, the high load is directly correlated with 100% CPU usage and 0% I/O wait, pointing conclusively to a CPU bottleneck.

A Linux engineer receives reports that files created within a certain group are being modified by users who are not group members. The engineer wants to reconfigure the server so that only file owners and group members can modify new files by default. Which of the following commands would accomplish this task?

A. chmod 775

B. umask. 002

C. chactr -Rv

D. chown -cf

Summary:

The issue is that new files have overly permissive default permissions, allowing users outside the file's group to modify them. The requirement is to change the system's default behavior so that newly created files have permissions that prevent "others" (non-owner, non-group users) from having write access. This is controlled by the umask value, which subtracts permissions from the default creation mask.

Correct Option:

B. umask 002:

This is the correct command. The umask (user file creation mask) defines which permissions are removed from the default settings when a new file or directory is created. A umask of 002 means the "write" permission for "others" (the final digit) is turned off. When applied, this ensures new files are created with permissions like 664 (rw-rw-r--) and directories with 775 (rwxrwxr-x), preventing non-group members from modifying them.

Incorrect Options:

A. chmod 775:

This command is used to explicitly change the permissions of existing files and directories. It does not affect the default permissions for new files that are created in the future. It is a reactive, not proactive, solution and would need to be run on every new file.

C. chattr -Rv:

This command is invalid and mixes options from different commands. chattr is used to set extended attributes like immutability (+i), and -R is for recursion. The -v option exists but this specific combination does not address default permission behavior for new files.

D. chown -cf:

The chown command is used to change the ownership of files, not their permissions. The -c flag reports when a change is made, and -f suppresses error messages. This command does nothing to control whether users can modify files based on their group membership.

Reference:

Official CompTIA Linux+ (XK0-005) Certification Exam Objectives: This scenario falls under Objective 3.2: "Given a scenario, manage storage, files, and directories in a Linux environment," which includes managing file and directory permissions. Understanding and setting the umask is a fundamental skill for controlling default permissions for newly created files.

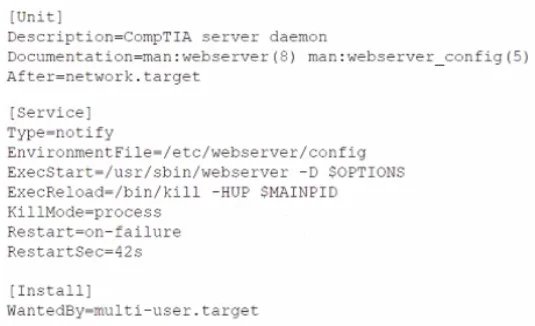

The security team has identified a web service that is running with elevated privileges A Linux administrator is working to change the systemd service file to meet security compliance standards. Given the following output:

Which of the following remediation steps will prevent the web service from running as a privileged user?

A. Removing the ExecStarWusr/sbin/webserver -D SOPTIONS from the service file

B. Updating the Environment File line in the [Service] section to/home/webservice/config

C. Adding the User-webservice to the [Service] section of the service file

D. Changing the:nulti-user.target in the [Install] section to basic.target

Explanation:

The issue identified is that the web service is running with elevated privileges (likely as the root user by default). To meet security compliance standards, the service should run with the least privileges necessary, typically under a non-privileged user account (e.g., webservice). The provided systemd service file output shows a [Service] section with ExecStart=/usr/sbin/webserver -D $OPTIONS, which does not specify a user, meaning it defaults to running as root. The remediation step is to explicitly define a non-privileged user.

C. Adding the User=webservice to the [Service] section of the service file:

This directive tells systemd to run the web service process as the webservice user instead of root. The webservice user should already exist (e.g., created with useradd -r webservice) and have appropriate permissions for the web server files (e.g., /etc/webservice/config). This aligns with security best practices by reducing the attack surface.

Why not the other options?

A. Removing the ExecStart=/usr/sbin/webserver -D $OPTIONS from the service file:

This would break the service entirely, as ExecStart defines the command to start the service. Removing it prevents the web server from running, which is not a valid remediation for privilege issues.

B. Updating the EnvironmentFile line in the [Service] section to /home/webservice/config:

Changing the EnvironmentFile path only specifies a different file for environment variables (e.g., $OPTIONS). It does not address the privilege level at which the service runs, as the user is still determined by the absence of a User= directive (defaulting to root).

D. Changing the multi-user.target in the [Install] section to basic.target:

The [Install] section defines when and how the service is started (e.g., after multi-user.target). Switching to basic.target changes the boot dependency but has no effect on the user privileges under which the service runs.

Additional Steps

After adding User=webservice, ensure:

The webservice user has read/execute permissions for /usr/sbin/webserver and the configuration file (/etc/webservice/config).

Ownership of web server files is adjusted (e.g., chown -R webservice:webservice /path/to/web/files).

Reload systemd with systemctl daemon-reload and restart the service with systemctl restart webservice.

References:

CompTIA XK0-005 Objective:

2.1 (Given a scenario, manage services using systemd) and 4.2 (Implement and configure Linux security best practices).

Systemd Documentation:

systemd.service – Details on User= directive.

Security Best Practice:

Running services as non-root users is a standard recommendation (e.g., NIST SP 800-53, CIS Benchmarks).

Which of the following commands will display the operating system?

A. uname -n

B. uname -s

C. uname -o

D. uname -m

Explanation:

The uname command is used to print system information. The -o flag specifically prints the name of the operating system.

For example, on a typical Linux system, running uname -o would output:

text

GNU/Linux

On other systems, it might output FreeBSD or Android.

Analysis of Incorrect Options

A. uname -n:

The -n flag prints the nodename (the hostname of the system), not the operating system.

B. uname -s:

The -s flag prints the system's kernel name. On a Linux system, this would output Linux. While closely related, the kernel is a core component of the operating system, but this flag does not display the full operating system name.

D. uname -m:

The -m flag prints the machine hardware name (the system architecture). This would output values like x86_64, aarch64, or i686, indicating the CPU architecture, not the OS.

Reference

Command: uname - Print system information.

Common Flags:

-a: All information (a combination of all other flags).

-s: Kernel name (e.g., Linux).

-r: Kernel release version.

-v: Kernel build version.

-m: Machine hardware architecture.

-p: Processor type.

-i: Hardware platform.

-o: Operating system.

An administrator accidentally deleted the /boot/vmlinuz file and must resolve the issue before the server is rebooted. Which of the following commands should the administrator use to identify the correct version of this file?

A. rpm -qa | grep kernel; uname -a

B. yum -y update; shutdown -r now

C. cat /etc/centos-release; rpm -Uvh --nodeps

D. telinit 1; restorecon -Rv /boot

Summary:

The /boot/vmlinuz file is the compressed Linux kernel executable. After accidentally deleting it, the administrator needs to identify the exact kernel version currently running and the corresponding kernel package installed on the system. This information is required to reinstall the correct package and restore the missing file from the package repository without forcing an unnecessary kernel update.

Correct Option:

A. rpm -qa | grep kernel; uname -a: This is the correct troubleshooting sequence.

rpm -qa | grep kernel lists all installed kernel packages, showing their full version numbers.

uname -a displays the version of the currently running kernel.

Cross-referencing these outputs allows the administrator to identify the exact package name (e.g., kernel-3.10.0-1160.el7.x86_64) that provides the missing /boot/vmlinuz file for the active kernel, enabling a precise reinstallation.

Incorrect Options:

B. yum -y update; shutdown -r now:

This command performs a full system update, which would install the latest available kernel, not necessarily the one that was deleted. This is a disruptive and incorrect approach, as it changes the system state instead of restoring the missing file for the current kernel.

C. cat /etc/centos-release; rpm -Uvh --nodeps:

The first command only shows the OS distribution and version. The second command is for manually upgrading a package with dependencies ignored, but it does not identify which package or version needs to be reinstalled.

D. telinit 1; restorecon -Rv /boot:

The telinit 1 command switches the system to single-user mode, which is unnecessary for this file operation. The restorecon command resets SELinux security contexts; it cannot restore a deleted file and is irrelevant to this issue.

Reference:

RPM Man Page: The official documentation for the rpm command, which explains the -qa (query all) option.

A systems administrator created a new Docker image called test. After building the image, the administrator forgot to version the release. Which of the following will allow the administrator to assign the v1 version to the image?

A. docker image save test test:v1

B. docker image build test:vl

C. docker image tag test test:vl

D. docker image version test:v1

Explanatio:

To assign a version (e.g., v1) to an existing Docker image named test, the administrator needs to create a tagged version of the image without rebuilding it.

The correct command is:

C. docker image tag test test:v1:

This command creates a new tag (test:v1) pointing to the same image ID as the existing test image. Tagging allows versioning without altering the original image. After tagging, the administrator can push the tagged image to a registry (e.g., docker push test:v1) if needed.

Why not the other options?

A. docker image save test test:v1:

The docker image save command exports an image to a tar archive file (e.g., test:v1.tar), not a tagged version in the Docker registry. This is used for saving images to disk, not versioning.

B. docker image build test:v1:

The docker image build command creates a new image from a Dockerfile, requiring a build context. It does not tag an existing image; it generates a new one, which isn’t the intent here since the image is already built.

D. docker image version test:v1:

There is no docker image version command in Docker. This appears to be a misunderstanding or typo, possibly confused with versioning tools outside Docker’s core commands.

Additional Steps

Verify the tag with docker images to ensure test:v1 appears.

If the original test tag is no longer needed, it can be removed with docker rmi test (optional).

References:

CompTIA XK0-005 Objective:

3.4 (Given a scenario, manage containers with Docker or Podman).

Docker Documentation:

docker tag – Official guide on tagging images.

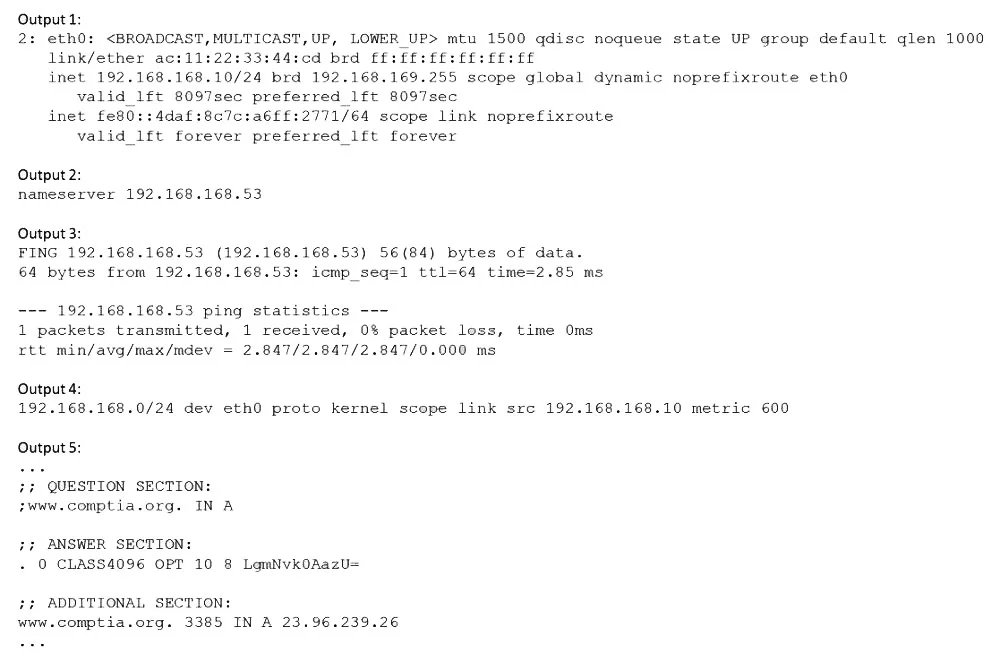

Users have been unable to reach www.comptia.org from a Linux server. A systems administrator is troubleshooting the issue and does the following:

Based on the information above, which of the following is causing the issue?

A. The name www.comptia.org does not point to a valid IP address.

B. The server 192.168.168.53 is unreachable

C. No default route is set on the server.

D. The network interface eth0 is disconnected.

Explanation:

The administrator has performed several checks. Let's see what each output tells us:

Output 1 (ip addr show eth0):

The interface eth0 is UP and has a valid IP address 192.168.168.10/24. The interface is connected and functioning at the link level.

Output 2 (cat /etc/resolv.conf):

The system is configured to use the DNS server at 192.168.168.53.

Output 3 (ping 192.168.168.53):

The system can successfully ping the DNS server. This means the local network connectivity to the DNS server is fine, and the server is online.

Output 4 (ip route show):

This is the critical output. It only shows a route for the local network: 192.168.168.0/24 dev eth0 .... There is no default route (also known as the default gateway), which would typically look like default via 192.168.168.1 dev eth0 or similar. Without a default route, the server has no path to send traffic to any network outside its own 192.168.168.0/24 subnet.

Output 5 (dig www.comptia.org):

The dig command successfully received an answer from the DNS server. The ANSWER SECTION shows that www.comptia.org correctly resolves to the IP address 23.96.239.26.

Conclusion:

The server can talk to its DNS server and resolve the domain name, but it cannot actually route traffic to the resulting external IP address (23.96.239.26) because it lacks a default gateway. The inability to reach the website is a routing problem, not a DNS or local connectivity problem.

Analysis of Incorrect Options

A. The name www.comptia.org does not point to a valid IP address.

This is incorrect. Output 5 from the dig command proves that the name successfully resolves to the IP 23.96.239.26.

B. The server 192.168.168.53 is unreachable.

This is incorrect. Output 3 from the ping command proves that the DNS server is reachable and responding.

D. The network interface eth0 is disconnected.

This is incorrect. Output 1 clearly shows the interface is in the UP state and has an IP address assigned, indicating it is connected and active.

Reference:

Command:

ip route or route -n (to view the routing table).

Concept:

Network Routing. A host needs a default route (0.0.0.0/0) to communicate with networks outside of its immediate local subnet. The absence of a default gateway is a common misconfiguration that leads to the symptom of "can resolve names but cannot reach the internet."

What is the main objective when using Application Control?

A. To filter out specific content.

B. To assist the firewall blade with handling traffic.

C. To see what users are doing.

D. Ensure security and privacy of information.

Explanation:

The main objective of using Application Control in a security context (e.g., on a Linux system or network appliance) is to ensure the security and privacy of information. Application Control allows administrators to monitor, restrict, or allow specific applications or application features based on security policies. This helps prevent unauthorized applications from running, reduces the risk of malware or data leaks, and ensures compliance with security standards by controlling how applications handle data.

Why not the other options?

A. To filter out specific content:

While Application Control can filter certain types of content (e.g., blocking file uploads in an app), this is a secondary function. Its primary goal is broader security and policy enforcement, not just content filtering.

B. To assist the firewall blade with handling traffic:

Application Control may integrate with firewalls to manage traffic, but it is not primarily designed to assist the firewall. Its focus is on application-level control, not general traffic management.

C. To see what users are doing:

Monitoring user activity is a byproduct of Application Control (e.g., logging app usage), but the main objective is to enforce security and privacy, not just visibility.

References:

CompTIA XK0-005 Objective:

4.1 (Given a scenario, implement and configure security features) – Relates to controlling application behavior for security.

General Security Practice:

Application Control is widely documented in frameworks like CIS Controls (Control 2: Inventory and Control of Software Assets) and NIST SP 800-53.

A Linux administrator has logged in to a server for the first time and needs to know which services are allowed through the firewall. Which of the following options will return the results for which the administrator is looking?

A. firewall-cmd —get-services

B. firewall-cmd —check-config

C. firewall-cmd —list-services

D. systemctl status firewalld

Explanation:

The firewall-cmd --list-services command is used to display the list of services that are currently allowed through the firewall in the default zone (or a specified zone). This directly answers the question, "which services are allowed through the firewall?"

For example,

the output might look like this:

text

ssh dhcpv6-client cockpit

This indicates that incoming connections for the ssh, dhcpv6-client, and cockpit services are permitted.

Analysis of Incorrect Options

A. firewall-cmd --get-services:

This command lists all the pre-defined service names that are available to be added to a zone. It does not show which ones are currently allowed; it just shows the full catalog of services that firewalld knows about (e.g., ssh, http, https, samba). This is not what the administrator needs.

B. firewall-cmd --check-config:

This command checks the firewalld configuration for any errors. It is a validation tool used to ensure the configuration files are syntactically correct. It does not list the active firewall rules or allowed services.

D. systemctl status firewalld:

This command checks the state of the firewalld daemon itself—whether it is running, enabled, and some high-level log messages. It does not provide a list of the services that are allowed through the firewall.

Reference:

Command:

firewall-cmd - The command-line client for the firewalld daemon.

Concept:

Managing firewalld zones and services. The --list-services flag is the primary tool for quickly auditing which services are permitted in the current runtime configuration. To see the permanent configuration (which will be active after a reload/reboot), you would use firewall-cmd --list-services --permanent.

| Page 1 out of 40 Pages |