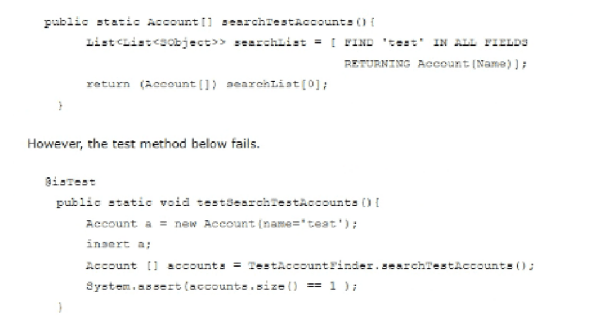

A developer wrote the following method to find all the test accounts in the org:

What should be used to fix this failing test?

A. Test. fixsdSsarchReaulta [) method to set up expected data

B. @isTest (SeeAllData=true) to access org data for the test

C. @testsetup method to set up expected data

D. Teat.loadData to set up expected data

Explanation:

The reason this test fails is because it relies on a SOQL FIND clause, which uses SOSL (Salesforce Object Search Language) to search across all fields. SOSL relies on the search index, which is not populated for test data by default in Apex unit tests. In a typical test context, even if you insert a record, it is not searchable via SOSL unless using @isTest(SeeAllData=true) — which gives access to existing org data that is already indexed.

In this situation, the inserted account record with the name "test" isn't appearing in SOSL results during the test because the SOSL engine doesn't index test-created data. By using @isTest(SeeAllData=true), you can access pre-existing indexed data that SOSL can find, which will allow the test to pass only if that data already exists in the org.

⚠️ Important Note: Using SeeAllData=true is not best practice in most cases, but it is required when you need access to the org’s actual data, including SOSL-indexed records, which test-inserted data does not qualify as.

❌ Incorrect Answers:

A) Test.fixSearchResults() method to set up expected data

There is no such method as Test.fixSearchResults() in Apex or Salesforce’s test framework. This answer seems fabricated or confused with another feature. The only ways to control data in tests are via setup methods, data loading, mocking, or SeeAllData. There’s no built-in method to "fix" or simulate SOSL behavior in unit tests.

C) @testSetup method to set up expected data

Using @testSetup is a great way to create reusable test data across multiple test methods but it doesn’t change the behavior of SOSL indexing. Records inserted in @testSetup are still not indexed for SOSL in the test context. So even if you create the account in a setup method, FIND 'test' IN ALL FIELDS will not return it. This would still result in a failed assertion unless you use SeeAllData=true.

D) Test.loadData to set up expected data

Test.loadData() is used to load data from static .csv files into your test context. Like @testSetup, this method helps set up test records, but the data is still not indexed for SOSL queries. So, even if you load an account with the name "test", it will not appear in a FIND search during a test. Only data from the real org (accessible via SeeAllData=true) is indexed and searchable via SOSL.

🔗 Reference:

Trailhead – Apex Testing

Salesforce – SeeAllData=true

A developer is asked to replace the standard Case creation screen with a custom screen that takes users through a wizard before creating the Case. The org only has users running Lightning Experience. What should the developer override the Case New Action with to satisfy the requirements?

A. Lightning Page

B. Lightning Record Page

C. Lightning Component

D. Lightning Flow

Explanation:

To build a multi-step wizard for Case creation in Lightning Experience, the best and most declarative tool is Lightning Flow (Screen Flow). Flows allow developers and admins to design guided, user-friendly wizards without needing to write extensive code. These flows can include screens, decision points, and logic to gather input and perform record creation.

In this scenario:

You need to override the "New" button for the Case object.

The org is running Lightning Experience only, which supports overriding standard actions with Screen Flows.

You can create a Screen Flow, then go to Object Manager → Case → Buttons, Links, and Actions → New → Override with Flow.

This gives you full control over the Case creation process and satisfies the need for a custom, wizard-like interface.

❌ Incorrect Answers:

A) Lightning Page

A Lightning Page is a type of layout created using the Lightning App Builder. It defines where and how components appear on a record or app page, but it cannot be used to override buttons like the "New" action. It doesn't support user interaction logic or form flow, so it can’t function as a wizard.

B) Lightning Record Page

This is a more specific type of Lightning Page assigned to an object’s record view. Like a standard Lightning Page, it's used for layout and design purposes but not for overriding button behavior or implementing guided user interactions. It won't help create a custom wizard to replace the Case creation screen.

C) Lightning Component

While Lightning Components (Aura or LWC) can be used for advanced customizations and can override buttons, building a multi-step wizard from scratch in a component requires significant code and effort. Salesforce best practice recommends using Flows for such use cases unless the flow has limitations. Also, for standard action overrides like "New," Flows are directly supported, making this a simpler and more maintainable solution.

🔗 Reference:

Salesforce Help – Customize Standard Buttons

Salesforce Flow Builder Guide

Trailhead – Build Flows with Flow Builder

A company has a web page that needs to get Account record information, such as name, website, and employee number. The Salesforce record 1D is known to the web page and it uses JavaScript to retrieve the account information. Which method of integration is optimal?

A. Apex SOAP web service

B. SOAP API

C. Apex REST web service

D. REST API

Explanation:

To determine the optimal method for a web page to retrieve Account record information (e.g., name, website, employee number) using JavaScript with a known Salesforce record ID, we need to consider integration options that are efficient, secure, and suitable for client-side JavaScript execution. The solution should allow the web page to interact with Salesforce data via a RESTful or SOAP-based interface, balancing ease of use, performance, and developer familiarity with JavaScript.

Correct Answer: D. REST API

Option D, REST API, is the optimal method for this scenario. The Salesforce REST API allows a web page to retrieve Account record information using JavaScript via HTTP requests (e.g., using fetch or axios) with a known record ID. It provides a lightweight, stateless interface with JSON responses, which is ideal for client-side integration. The API supports standard objects like Account and can be secured with OAuth, ensuring data access is controlled. This approach aligns with modern web development practices and is well-documented, making it a practical choice for the Platform Developer II exam context, especially given JavaScript's compatibility with REST.

Incorrect Answer:

Option A: Apex SOAP web service

Option A, Apex SOAP web service, involves creating a custom SOAP-based service in Apex to expose Account data. While feasible, it requires generating WSDL files and handling XML responses, which are less convenient for JavaScript developers compared to JSON. The complexity of SOAP (e.g., strict typing, larger payloads) and the need for additional client-side libraries make it less efficient for a web page. This method is better suited for enterprise integrations rather than simple client-side JavaScript calls, making it suboptimal here.

Option B: SOAP API

Option B, SOAP API, is Salesforce's native SOAP-based API for accessing data, including Account records. However, it relies on XML and requires a WSDL client, which adds overhead for JavaScript integration on a web page. Parsing XML responses and managing the API's complexity can be challenging without additional libraries, and it is less intuitive than REST for modern web development. While secure and robust, its lack of native JavaScript compatibility makes it a less optimal choice compared to REST API for this use case.

Option C: Apex REST web service

Option C, Apex REST web service, involves creating a custom REST endpoint in Apex to retrieve Account data. This allows tailored responses and business logic but requires server-side development, deployment, and maintenance within Salesforce. While it can return JSON (suitable for JavaScript), it introduces additional complexity and overhead compared to using the standard REST API, which already provides Account access. For a simple retrieval with a known ID, the native REST API is more efficient, making this option less optimal unless custom logic is strictly needed.

Reference:

Salesforce REST API Developer Guide

Salesforce SOAP API Guide

Which two best practices should the developer implement to optimize this code?

Choose 2

answers

A. Change the trigger context to after update, after insert

B. Remove the DML statement.

C. Use a collection for the DML statement.

D. Query the Driving-Structure_C records outside of the loop

Explanation:

✅ C) Use a collection for the DML statement

One of the core Apex best practices is to avoid performing repeated DML operations inside a loop. Instead, accumulate the records into a collection (e.g., List<>) and perform a single DML operation after the loop completes. This improves performance and avoids exceeding governor limits (especially the 150 DML statements per transaction limit).

Before:

for(Account acc : Trigger.new) {

update acc;

}

After:

List

for(Account acc : Trigger.new) {

accountsToUpdate.add(acc);

}

update accountsToUpdate;

✅ D) Query the Driving-Structure__c records outside of the loop

Querying records inside a loop causes SOQL inside loop issues and can easily hit the 100 SOQL queries per transaction governor limit. Instead, gather all needed IDs first, then perform one SOQL query outside the loop to fetch related records.

Before:

for(Account acc : Trigger.new) {

Driving_Structure__c ds = [SELECT Id FROM Driving_Structure__c WHERE Account__c = :acc.Id];

}

After:

Set

for(Account acc : Trigger.new) {

accountIds.add(acc.Id);

}

Map

[SELECT Id, Account__c FROM Driving_Structure__c WHERE Account__c IN :accountIds]

);

This bulkifies the code and keeps it governor-limit safe.

❌ Incorrect Answers:

A) Change the trigger context to after update, after insert

The trigger context (before vs after insert/update) depends on what you need to do in the logic:

Use before triggers when you need to modify field values before they’re saved.

Use after triggers when you need to access record IDs or make related object updates.

Changing the context without understanding the business logic could break functionality. This option is not a general best practice unless there's a clear reason based on trigger requirements.

B) Remove the DML statement

Completely removing the DML statement would likely prevent the necessary changes to the records from being saved to the database. The goal is to optimize the DML (by using collections and avoiding repetition), not eliminate it unless it’s truly unnecessary — which is unlikely in a trigger context designed to update data.

🔗 Reference:

Apex Developer Guide – Bulk Trigger Best Practices

Trailhead – Apex Triggers

SOQL Best Practices

An environment has two Apex triggers: an after-update trigger on Account and an afterupdate

trigger on Contact.

The Account after-update trigger fires whenever an Account's address is updated, and it

updates every associated Contact with that address. The Contact after-update trigger fires

on every edit, and it updates every Campaign Member record related to the Contact with

the Contact's state.

Consider the following: A mass update of 200 Account records’ addresses, where each

Account has 50 Contacts. Each Contact has one Campaign Member. This means there are

10,000 Contact records across the Accounts and 10,000 Campaign Member records

across the contacts.

What will happen when the mass update occurs?

A. There will be no error and all updates will succeed, since the limit on the number of records processed by DML statements was not exceeded.

B. The mass update of Account address will succeed, but the Contact address updates will fail due to exceeding number of records processed by DML statements.

C. There will be no error, since each trigger fires within its own context and each trigger does not exceed the limit of the number of records processed by DML statements.

D. The mass update will fail, since the two triggers fire in the same context, thus exceeding the number of records processed by DML statements.

Explanation:

To determine the outcome of a mass update of 200 Account records' addresses, where each Account has 50 Contacts (totaling 10,000 Contacts) and each Contact has one Campaign Member (totaling 10,000 Campaign Members), we need to analyze the behavior of the two Apex triggers in the context of Salesforce governor limits. The Account after-update trigger updates all associated Contacts with the Account's address, and the Contact after-update trigger updates all related Campaign Members with the Contact's state. Key governor limits to consider include the maximum number of records processed by DML statements (10,000) and the context in which triggers execute.

Correct Answer: C. There will be no error, since each trigger fires within its own context and each trigger does not exceed the limit of the number of records processed by DML statements

Option C is correct because Salesforce processes triggers in separate contexts based on the object and trigger type. The Account after-update trigger fires first, updating 10,000 Contact records in batches (due to bulk processing). Each batch respects the 10,000 DML row limit within its context. Subsequently, the Contact after-update trigger fires for the updated Contacts, updating 10,000 Campaign Member records, again within its own context and respecting the limit. Since each trigger operates independently and the total DML rows per context do not exceed 10,000, the mass update succeeds without errors. This aligns with Salesforce's bulkified trigger design and governor limit handling.

Incorrect Answer:

Option A: There will be no error and all updates will succeed, since the limit on the number of records processed by DML statements was not exceeded

Option A is incorrect because it oversimplifies the scenario. While the total number of records updated (10,000 Contacts + 10,000 Campaign Members = 20,000) might suggest a limit issue, Salesforce processes triggers in separate contexts per object. The Account trigger handles 10,000 Contact updates, and the Contact trigger handles 10,000 Campaign Member updates, each within its own 10,000-row DML limit. The explanation in Option A fails to clarify this context separation, making it misleading despite the correct outcome.

Option B: The mass update of Account address will succeed, but the Contact address updates will fail due to exceeding number of records processed by DML statements

Option B is incorrect because it assumes the Contact address updates (handled by the Account trigger) will fail due to exceeding the DML limit. However, Salesforce bulkifies the Account trigger, processing the 10,000 Contact updates in batches within the 10,000-row limit per context. The subsequent Contact trigger updates 10,000 Campaign Members, also within its limit. There is no failure point here, as each trigger's DML operations are constrained by its own context, allowing all updates to succeed.

Option D: The mass update will fail, since the two triggers fire in the same context, thus exceeding the number of records processed by DML statements

Option D is incorrect because it misinterprets trigger execution contexts. Salesforce does not execute the Account and Contact after-update triggers in the same context; each trigger runs in its own context based on the object being updated. The Account trigger's 10,000 Contact updates and the Contact trigger's 10,000 Campaign Member updates are processed separately, each adhering to the 10,000-row DML limit. Thus, the total of 20,000 records does not cause a failure, as the limits are applied per context, not cumulatively across triggers.

Reference:

Salesforce Apex Developer Guide: "Triggers" - Section on Trigger Context.

A developer is writing a Jest test for a Lightning web component that conditionally displays child components based on a user's checkbox selections. What should the developer do to properly test that the correct components display and hide for each scenario?

A. Create a new describe block for each test.

B. Reset the DOM after each test with the after Each() method.

C. Add a teardown block to reset the DOM after each test.

D. Create a new jsdom instance for each test.

Explanation:

When testing Lightning Web Components (LWC) with Jest, it's essential to ensure that each test case runs in isolation. If the DOM isn't reset between tests, leftover elements or mutated state from a previous test can cause false positives, unpredictable failures, or test flakiness.

The recommended and correct way to reset the DOM is to use Jest’s afterEach() hook to clear the DOM and component state after each test:

afterEach(() => {

// clean up DOM

while (document.body.firstChild) {

document.body.removeChild(document.body.firstChild);

}

});

This ensures that each test starts with a clean DOM, which is especially critical when testing conditional rendering (like showing/hiding child components based on checkbox selection). Failing to do this can cause multiple test cases to interfere with each other.

❌ Incorrect Answers:

A) Create a new describe block for each test

Using a separate describe block for each test is not required and not the correct way to isolate DOM behavior. describe blocks are used to group tests and apply shared beforeEach() or afterEach() logic, but they don’t themselves clean up the DOM. This approach adds unnecessary complexity and doesn't address the core problem of DOM state persistence.

C) Add a teardown block to reset the DOM after each test

There is no teardown block in Jest. Jest uses beforeEach() and afterEach() for setup and teardown, respectively. "Teardown block" is an incorrect or non-existent terminology in Jest's lifecycle methods. This option may sound valid but is not syntactically or conceptually correct for Jest.

D) Create a new jsdom instance for each test

While it's theoretically possible to spin up a new jsdom instance for each test, it's overkill and not necessary in typical Jest testing for LWC. Jest automatically runs within a single shared jsdom environment, and you can reset the DOM simply by clearing document.body. Creating a new jsdom would complicate your tests and go against Salesforce's recommended Jest testing practices.

🔗 Reference:

Salesforce LWC Jest Test Guide

Jest Documentation – Setup and Teardown

Trailhead – LWC Testing with Jest

A company accepts orders for customers in their enterprise resource planning (ERP)

system that must be integrated into Salesforce as order_ c records with a lookup field to

Account. The Account object has an external ID field, ENF_Customer_ID_c.

What should the Integration use to create new Oder_c records that will automatically be

related to the correct Account?

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

To integrate order data from an enterprise resource planning (ERP) system into Salesforce as Order__c records with a lookup field to the Account object, the integration must efficiently relate the new records to the correct Account using the external ID field ERP_Customer_ID__c. The solution should minimize manual mapping, ensure data integrity, and leverage Salesforce's data manipulation language (DML) operations that support external ID-based relationships. Let's evaluate each option based on these requirements.

Correct Answer: D. Upsert on the Account and specify the ERP_Customer_ID__c for the relationship

Option D, "Upsert on the Account and specify the ERP_Customer_ID__c for the relationship," is the optimal choice. The upsert operation in Salesforce allows the integration to create or update Order__c records based on an external ID. By specifying ERP_Customer_ID__c in the lookup relationship field of the Order__c record, Salesforce automatically matches it to the corresponding Account record using the external ID, establishing the relationship without requiring the Account ID. This method is efficient, supports bulk operations, and aligns with integration best practices, making it ideal for the Platform Developer II exam context.

Incorrect Answer:

Option A: Upsert on the Order__c object and specify the ERP_Customer_ID__c for the Account relationship

Option A suggests performing an upsert on the Order__c object and using ERP_Customer_ID__c for the Account relationship. However, upsert on Order__c would require an external ID on the Order__c object itself, not the Account object, to match existing records. Using ERP_Customer_ID__c (an Account external ID) in this context is invalid for the upsert operation, as the lookup relationship cannot directly resolve the Account ID this way. This approach would fail or require additional logic, making it unsuitable.

Option B: Insert on the Order__c object followed by an update on the related Account object

Option B proposes inserting Order__c records and then updating the related Account object. This two-step process is inefficient and impractical. Initially, the Order__c insert would lack the correct Account ID in the lookup field, requiring a separate query or update to establish the relationship using ERP_Customer_ID__c. This approach increases complexity, risks data inconsistencies, and exceeds the number of DML operations, violating governor limits in bulk scenarios. It is not a streamlined solution for this integration.

Option C: Merge on the Order__c object and specify the ERP_Customer_ID__c for the Account relationship

Option C suggests using a merge operation on Order__c with ERP_Customer_ID__c for the Account relationship. However, the merge operation in Salesforce is used to combine duplicate records within the same object (e.g., merging duplicate Accounts), not to establish relationships or create new records with lookups. Applying merge to Order__c with an Account external ID is invalid and does not support the creation of new Order__c records related to Account. This option is incorrect for the given requirement.

Reference:

Salesforce Apex Developer Guide: "Upsert" - Section on External ID Relationships.

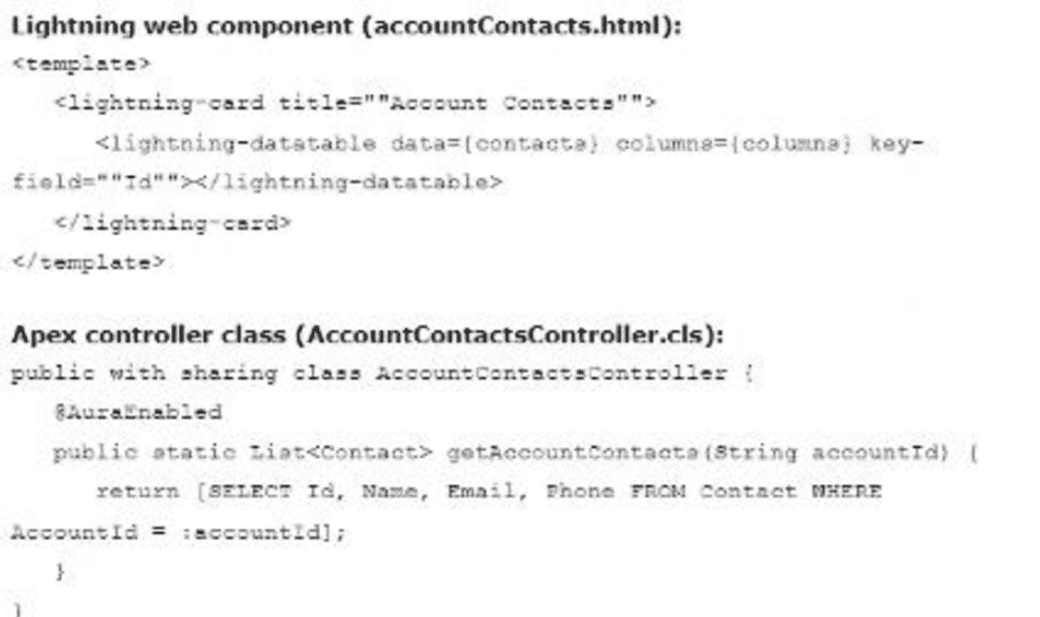

Universal Containers analyzes a Lightning web component and its Apex controller

class that retrieves a list of contacts associated with an account. The code snippets

are as follows:

Based on the code snippets, what change should be made to display the contacts’ mailing

addresses in the Lightning web component?

A. Add a new method in the Apex controller class to retneve the mailing addresses separately and modify the Lightning web component to invoke this method.

B. Extend the lightning-datatable component in the Lightning web component to include a column for the MailingAddress field.

C. Modify the SOQL guery in the getAccountContacts method to include the MailingAddress field.

D. Modify the SOQL query in the getAccountContacts method to include the MailingAddress field and update the columns attribute in javascript file to add Mailing address fields.

Explanation:

To display the contacts' mailing addresses in the Lightning web component, the developer needs to ensure that the MailingAddress field data is retrieved from Salesforce and properly rendered in the lightning-datatable. The current code retrieves Id, Name, Email, and Phone fields for Contacts via the Apex controller method getAccountContacts, but it does not include MailingAddress. The solution must update the data retrieval and configure the datatable to display this additional field effectively.

Correct Answer: D. Modify the SOQL query in the getAccountContacts method to include the MailingAddress field and update the columns attribute in JavaScript file to add Mailing address fields

Option D is the correct approach. The MailingAddress field must be added to the SOQL query in the getAccountContacts method (e.g., SELECT Id, Name, Email, Phone, MailingAddress FROM Contact WHERE AccountId = :accountId) to retrieve the data from Salesforce. Additionally, the columns attribute in the JavaScript file of the Lightning web component must be updated to include a new column definition for MailingAddress (e.g., { label: 'Mailing Address', fieldName: 'MailingAddress' }). This ensures the datatable displays the mailing addresses alongside other fields, aligning with LWC best practices and the Platform Developer II exam requirements for data presentation.

Incorrect Answer:

Option A: Add a new method in the Apex controller class to retrieve the mailing addresses separately and modify the Lightning web component to invoke this method

Option A suggests adding a separate Apex method to retrieve MailingAddress and updating the component to call it. While technically possible, this approach is inefficient, as it requires an additional server call, increasing latency and complexity. The existing getAccountContacts method can be modified to include MailingAddress in a single query, avoiding the need for a new method. This overcomplicates the solution and is unnecessary when a single SOQL update suffices, making it less optimal.

Option B: Extend the lightning-datatable component in the Lightning web component to include a column for the MailingAddress field

Option B proposes extending the lightning-datatable to include a MailingAddress column. However, lightning-datatable is a base component that cannot be extended directly; its behavior is configured via the columns and data attributes. Without updating the SOQL query to include MailingAddress, the data won't be available, and adding a column without data will result in errors or empty cells. This approach lacks the necessary backend change, rendering it incomplete and incorrect.

Option C: Modify the SOQL query in the getAccountContacts method to include the MailingAddress field

Option C correctly identifies the need to modify the SOQL query to include MailingAddress (e.g., SELECT Id, Name, Email, Phone, MailingAddress FROM Contact WHERE AccountId = :accountId), which retrieves the data. However, it does not address the frontend requirement to display this field in the lightning-datatable. The columns attribute in the JavaScript file must also be updated to define a MailingAddress column. Without this step, the retrieved data remains unused, making this option partially correct but insufficient for the full solution.

Reference:

Salesforce LWC Developer Guide: "lightning-datatable".

Salesforce Apex Developer Guide: "SOQL Queries".

Universal Containers develops a Salesforce application that requires frequent interaction

with an external REST API.

To avoid duplicating code and improve maintainability, how should they implement the APL

integration for code reuse?

A. Use a separate Apex class for each API endpoint to encapsulate the integration logic,

B. Include the API integration code directly in each Apex class that requires it.

C. Create a reusable Apex class for the AFL integration and invoke it from the relevant Apex classes.

D. Store the APT integration code as a static resource and reference it in each Apex class.

Explanation:

To implement an external REST API integration for a Salesforce application that requires frequent interaction, while avoiding code duplication and improving maintainability, the solution must centralize the integration logic in a reusable manner. The approach should align with Apex best practices, support scalability, and allow multiple classes to leverage the integration without redundant code. Let's evaluate each option based on these principles.

Correct Answer: C. Create a reusable Apex class for the API integration and invoke it from the relevant Apex classes

Option C is the optimal solution. By creating a reusable Apex class (e.g., ApiIntegrationService) to handle the REST API calls, including methods for authentication, request construction, and response parsing, Universal Containers can centralize the integration logic. Other Apex classes can then invoke this class's methods as needed, reducing duplication and ensuring consistent behavior. This approach enhances maintainability, as updates to the API logic are made in one place, and it aligns with object-oriented design principles. For the Platform Developer II exam, this demonstrates proficiency in reusable code design.

Incorrect Answer:

Option A: Use a separate Apex class for each API endpoint to encapsulate the integration logic

Option A suggests creating a separate Apex class for each API endpoint, which encapsulates the integration logic but leads to code duplication if common functionality (e.g., authentication, error handling) is repeated across classes. This approach increases maintenance overhead, as changes to the API structure require updates to multiple classes. While it provides encapsulation, it lacks the reusability needed for frequent interactions, making it less efficient and not ideal for a scalable Salesforce application.

Option B: Include the API integration code directly in each Apex class that requires it

Option B involves embedding the API integration code directly into each Apex class that needs it. This results in significant code duplication, as the same REST call logic (e.g., HTTP requests, error handling) is replicated across classes. Such an approach complicates maintenance, as any API change requires updating every class, increasing the risk of errors and violating DRY (Don't Repeat Yourself) principles. This is highly inefficient and unsuitable for a maintainable Salesforce solution.

Option D: Store the API integration code as a static resource and reference it in each Apex class

Option D proposes storing the API integration code as a static resource (e.g., JavaScript or a script) and referencing it in Apex classes. However, Apex cannot directly execute JavaScript from static resources, and this approach is impractical for server-side logic. Static resources are better suited for client-side code or assets, not for reusable Apex logic. This method would require complex workarounds (e.g., Visualforce bridges), making it inefficient and incompatible with the requirement for Apex-based integration.

Reference:

Salesforce Apex Developer Guide: "Calling External Objects Using REST API".

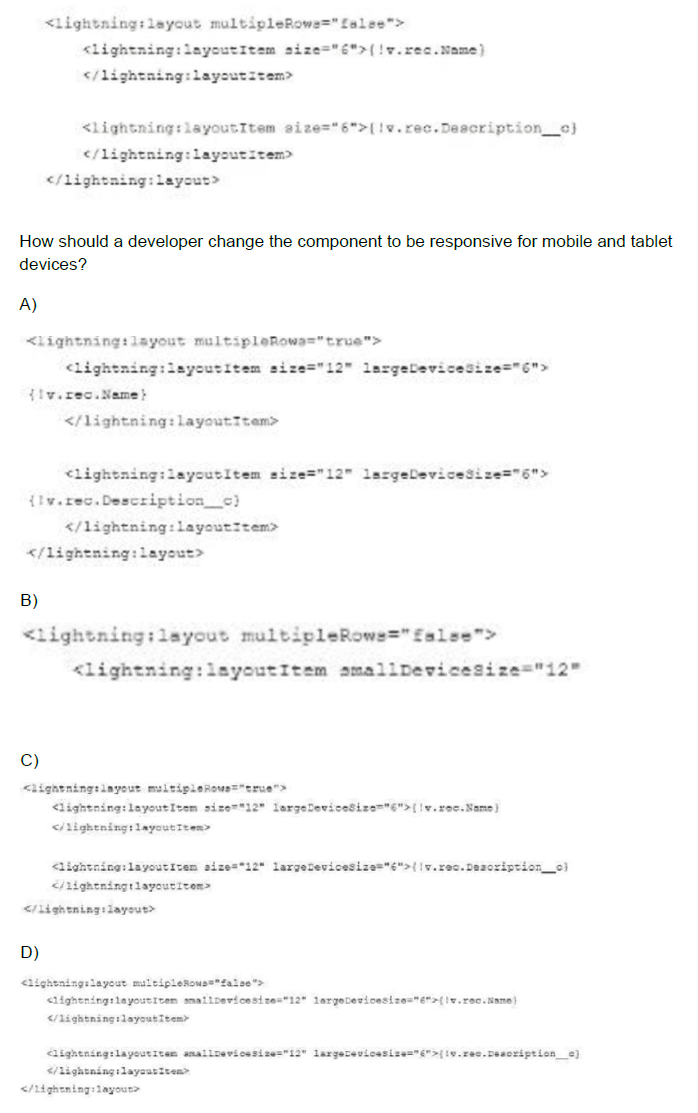

An Aura component has a section that displays some information about an Account and it works well on the desktop, but users have to scroll horizontally to see the description field output on their mobile devices and tablets.

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

The Aura component uses a lightning:layout to display Account information, including Name and Description, within lightning:layoutItem components. The current setup with multipleRows="false" and fixed size="12" for each item causes the layout to span a single row, which works on desktops but requires horizontal scrolling on mobile and tablet devices due to limited screen width. To make the component responsive, the layout must adapt to smaller screen sizes by adjusting the size attribute based on device type, ensuring content fits without scrolling.

Correct Answer: Option D

Option D is the correct solution. By setting multipleRows="false" and using smallDeviceSize="12" and largeDeviceSize="6" on each lightning:layoutItem, the layout adjusts dynamically. On mobile and tablet devices (classified as small devices), each item takes the full 12-column width, stacking them vertically to avoid horizontal scrolling. On larger devices (e.g., desktops), each item uses 6 columns, fitting both Name and Description side by side. This responsive design leverages Salesforce's built-in breakpoints, ensuring usability across devices, which is critical for the Platform Developer II exam.

Incorrect Answer:

Option A:

Option A uses a fixed size="12" for the Name item and size="6" for the Description item with multipleRows="false". This configuration forces both items into a single row, but the total size (12 + 6 = 18) exceeds the 12-column grid, causing overflow and horizontal scrolling on smaller screens. The lack of device-specific sizing (smallDeviceSize or largeDeviceSize) prevents responsiveness, making it ineffective for mobile and tablet devices, thus unsuitable for the requirement.

Option B:

Option B sets smallDeviceSize="12" for the Name item but leaves the Description item without a specified size, defaulting to the parent layout size. With multipleRows="false", both items are forced into one row, and the unspecified size for Description can lead to inconsistent rendering, potentially causing overflow or truncation on small devices. This approach lacks a comprehensive device-specific strategy, failing to ensure the layout is fully responsive for mobile and tablet users.

Option C:

Option C uses multipleRows="true" with size="12" and largeDeviceSize="6". On small devices, size="12" stacks the items vertically, which is responsive, but multipleRows="true" can lead to unpredictable wrapping behavior depending on content. On large devices, largeDeviceSize="6" fits both items side by side, but the initial size="12" overrides responsiveness on smaller screens if not consistently applied. This hybrid approach lacks clarity and precision, making it less reliable than Option D for consistent mobile and tablet responsiveness.

Reference:

Salesforce Aura Components Developer Guide: "lightning:layout".

Universal Containers wants to notify an external system, in the event that an unhandled exception occurs, by publishing a custom event using Apex. What is the appropriate publish/subscribe logic to meet this requirement?

A. Publish the error event using the Eventrus.publish() method and have the external system subscribe to the event using CometD.

B. Publish the error event using the addError () method and write a trigger to subscribe to the event and notify the external system.

C. Have the external system subscribe to the event channel. No publishing is necessary.

D. Publish the error event using the addError () method and have the external system subscribe to the event using CometD.

Explanation:

To notify an external system when an unhandled exception occurs by publishing a custom event using Apex, the solution must leverage Salesforce's publish/subscribe model, which supports real-time event messaging. This requires publishing the event from Apex and enabling the external system to subscribe to it, typically using a mechanism like CometD, a popular library for real-time communication. The approach should align with Salesforce's event-driven architecture and ensure the external system can react to the exception.

Correct Answer: A. Publish the error event using the Eventbus.publish() method and have the external system subscribe to the event using CometD

Option A is the correct approach. The Eventbus.publish() method in Apex is designed to publish custom Platform Events, which can carry details of an unhandled exception (e.g., error message, stack trace) as a payload. The external system can subscribe to this event channel using CometD, a JavaScript library that supports the Bayeux protocol for real-time updates over a long-polling or WebSocket connection. This setup enables asynchronous notification, adheres to Salesforce's event-driven model, and is ideal for integrating with external systems, making it suitable for the Platform Developer II exam context.

Incorrect Answer:

Option B: Publish the error event using the addError() method and write a trigger to subscribe to the event and notify the external system

Option B is incorrect because the addError() method is used to display error messages to users within Salesforce (e.g., on a record or page) and does not publish events for external consumption. Writing a trigger to subscribe to an error is not feasible, as triggers react to DML operations, not error events, and cannot directly notify an external system. This approach misunderstands the publish/subscribe model and lacks a mechanism for external integration, rendering it invalid.

Option C: Have the external system subscribe to the event channel. No publishing is necessary

Option C suggests that the external system can subscribe to an event channel without any publishing from Apex. However, the publish/subscribe model requires an event to be published (e.g., via Eventbus.publish()) before a subscriber can receive it. Without Apex publishing the unhandled exception event, the external system has nothing to subscribe to, making this approach ineffective. This option overlooks the necessity of event generation, which is critical for the requirement.

Option D: Publish the error event using the addError() method and have the external system subscribe to the event using CometD

Option D is incorrect because addError() does not publish events; it only sets an error state within Salesforce for user feedback. Even with CometD, the external system cannot subscribe to an event that isn’t published via a mechanism like Platform Events. This approach confuses error handling with event publishing, and without a valid publish step (e.g., Eventbus.publish()), the external system cannot receive notifications. This makes it an unsuitable solution for the given requirement.

Reference:

Platform Events Developer Guide

Salesforce CometD Documentation

Universal Containers is using a custom Salesforce application to manage customer support cases. The support team needs to collaborate with external partners to resolve certain cases. However, they want to control the visibility and access to the cases shared with the external partners. Which Salesforce feature can help achieve this requirement?

A. Role hierarchy

B. Criteria-based sharing rules

C. Apex managed sharing

D. Sharing sets

Explanation:

To manage customer support cases in a custom Salesforce application where the support team collaborates with external partners while controlling visibility and access, the solution must allow selective sharing with external users (e.g., partners) based on specific conditions. The feature should integrate with Salesforce's security model, support external access (e.g., via Community or Partner portals), and provide granular control over case sharing.

Correct Answer: C. Apex managed sharing

Option C, Apex managed sharing, is the appropriate feature. Apex managed sharing allows developers to programmatically share records, such as cases, with specific users or groups, including external partners, using custom logic. This is ideal for controlling visibility and access based on complex criteria (e.g., case type, priority, or partner role) that standard sharing rules might not handle. By writing Apex triggers or classes, Universal Containers can dynamically grant access to external partners via sharing rules, ensuring security and collaboration. This aligns with the Platform Developer II exam's focus on advanced security customization.

Incorrect Answer:

Option A: Role hierarchy

Option A, Role hierarchy, defines access based on an organization's internal user roles (e.g., managers vs. subordinates) and automatically grants access to records owned by users below in the hierarchy. However, it is designed for internal users and does not natively support sharing with external partners, such as those in a Community or Partner portal. While it can influence visibility, it lacks the flexibility to control access for external collaborators, making it unsuitable for this requirement.

Option B: Criteria-based sharing rules

Option B, Criteria-based sharing rules, allows sharing records with users or groups based on field values (e.g., case status or priority). While useful for internal sharing, it is limited to predefined criteria and does not directly support sharing with external partners unless they are part of a Community with specific profiles or roles. This feature lacks the dynamic, programmatic control needed to manage external partner access on a case-by-case basis, rendering it less effective here.

Option D: Sharing sets

Option D, Sharing sets, are used in Communities to grant access to records (e.g., cases) for Community users based on their profile and a common field (e.g., Account ID). While this can share cases with external partners in a Community, it provides broad access based on predefined rules rather than granular control per case or partner. It is less flexible for managing visibility and access dynamically, making it less suitable than Apex managed sharing for Universal Containers' specific collaboration needs.

Reference:

Salesforce Security and Sharing Guide: "Apex Managed Sharing".

| Page 3 out of 17 Pages |

| Previous |