A company is planning a disaster recovery site and needs to ensure that a single natural disaster would not result in the complete loss of regulated backup data. Which of the following should the company consider?

A. Geographic dispersion

B. Platform diversity

C. Hot site

D. Load balancing

Explanation

The core requirement is to protect regulated backup data from a "single natural disaster." This implies that the company's primary data center and its backup location could both be vulnerable if they are located close to each other (e.g., both in the same city or seismic zone). The goal is to create a strategy where the backup is immune to the same regional disaster that affects the primary site.

Why A. Geographic Dispersion is the Correct Answer

Geographic dispersion (or geographic diversity) is the practice of physically separating IT resources, such as data centers and backup vaults, across different geographic regions.

How it addresses the requirement:

By storing backup data at a site located a significant distance away from the primary site, the company ensures that a single natural disaster (e.g., hurricane, earthquake, flood, wildfire) is highly unlikely to impact both locations simultaneously. A hurricane striking the East Coast of the United States will not affect a backup facility on the West Coast or in a different country.

Regulatory Compliance:

The question specifically mentions "regulated backup data." Many regulations (e.g., financial, healthcare) explicitly require or strongly recommend geographic dispersion of backups as a control to ensure data availability and integrity in the face of a major disaster. This makes it not just a best practice, but often a compliance necessity.

Why the Other Options Are Incorrect

B. Platform diversity

What it is:

Platform diversity involves using different types of hardware, operating systems, or software vendors to avoid a single point of failure caused by a vendor-specific vulnerability or outage.

Why it's incorrect:

While valuable for mitigating risks like software zero-day exploits or hardware failures, platform diversity does not protect against a large-scale physical threat like a natural disaster. If both the primary and backup systems are in the same geographic area, a flood will destroy them regardless of whether one runs on Windows and the other on Linux.

C. Hot site

What it is:

A hot site is a fully operational, ready-to-use disaster recovery facility that has all the necessary hardware, software, and network connectivity to quickly take over operations. It is a type of recovery site, not a strategy for protecting the backups themselves.

Why it's incorrect:

The question is about preventing the loss of backup data. A hot site is a consumer of backups; it needs recent backups to restore services. If the backup data itself is stored at or near the hot site, and that site is hit by the same disaster, the data is lost. The company must first use geographic dispersion to protect the backup data, and then they can decide to use a hot site (which should also be geographically dispersed) for recovery.

D. Load balancing

What it is:

Load balancing distributes network traffic or application requests across multiple servers to optimize resource use, maximize throughput, and prevent any single server from becoming a bottleneck.

Why it's incorrect:

Load balancing is a technique for enhancing performance and availability during normal operations. It is not a disaster recovery or data protection mechanism. It does nothing to protect the actual data from loss in a disaster. If the data center is destroyed, the load balancer and all the servers behind it are gone.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 4.4: Explain the importance of disaster recovery and business continuity concepts and exercises.

Specifically, it tests knowledge of Recovery Sites and the strategies that make them effective. A core principle of disaster recovery planning is ensuring that recovery sites are geographically distant from primary operations to mitigate the risk of large-scale regional disasters. This is a fundamental concept in ensuring business continuity.

A company would like to provide employees with computers that do not have access to the internet in order to prevent information from being leaked to an online forum. Which of the following would be best for the systems administrator to implement?

A. Air gap

B. Jump server

C. Logical segmentation

D. Virtualization

Explanation

The company's goal is absolute: to ensure certain computers "do not have access to the internet" to prevent data exfiltration to online forums. This requires a solution that creates a total, physical barrier to external networks.

Why A. Air Gap is the Correct Answer

An air gap is a security measure that involves physically isolating a computer or network from any other network that could pose a threat, especially from unsecured networks like the internet.

How it works:

An air-gapped system has no physical wired connections (like Ethernet cables) and no wireless interfaces (like Wi-Fi or Bluetooth cards) enabled or installed. It is a standalone system with no network connectivity whatsoever.

Meeting the Requirement:

This is the only method that 100% guarantees the computer cannot access the internet. Since there is no path for data to travel to an external network, it is physically impossible for information to be leaked directly from that machine to an online forum. Data can only be transferred via physical media (e.g., USB drives), which can be controlled with strict policies.

Why the Other Options Are Incorrect

B. Jump server

What it is:

A jump server (or bastion host) is a heavily fortified computer that provides a single, controlled point of access into a secure network segment from a less secure network (like the internet). Administrators first connect to the jump server and then "jump" from it to other internal systems.

Why it's incorrect:

A jump server is itself connected to the internet. Its purpose is to manage access to segregated networks, not to prevent internet access. The computers behind the jump server would still have a network path to the internet, even if it's controlled. This does not meet the requirement of having "no access."

C. Logical segmentation

What it is:

Logical segmentation (often achieved through VLANs or firewall rules) involves dividing a network into smaller subnetworks to control traffic flow and improve security. It restricts communication between segments.

Why it's incorrect:

Logical segmentation is a software-based control. It relies on configurations that can be changed, misconfigured, or bypassed through hacking techniques like VLAN hopping. While it can be used to block internet access with a firewall rule, it does not physically remove the capability. The network interface is still active and presents a potential attack surface. It does not provide the absolute, physical guarantee required by the scenario.

D. Virtualization

What it is:

Virtualization involves creating a software-based (virtual) version of a computer, server, storage device, or network resource.

Why it's incorrect:

A virtual machine (VM) is entirely dependent on the host system's network configuration. If the host computer has internet access, the VM can also be given internet access through virtual networking. Virtualization is a method of organizing compute resources, not a method of enforcing network isolation. An administrator could easily create a VM with no internet access, but that would be achieved through logical segmentation (virtual network settings), not virtualization itself.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 3.3: Given a scenario, implement secure network designs.

Part of secure network design is understanding isolation techniques. Network Segmentation (logical) and Air Gapping (physical) are key concepts. The exam requires you to know the difference and apply the correct one based on the security requirement. An air gap is the most secure form of isolation and is used for highly sensitive systems (e.g., military networks, industrial control systems, critical infrastructure).

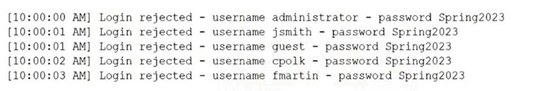

A security analyst is reviewing the following logs:

Which of the following attacks is most likely occurring?

A. Password spraying

B. Account forgery

C. Pass-t he-hash

D. Brute-force

Explanation

To identify the attack, we must analyze the pattern of the login attempts shown in the log:

Multiple Usernames:

The attacker is trying many different usernames (administrator, jsmith, guest, cpoik, frnartin).

Single Password:

Every single login attempt is using the exact same password: Spring2023.

Rapid Pace:

The attempts are happening in rapid succession, one per second.

This pattern is the definitive signature of a password spraying attack.

Why A. Password Spraying is the Correct Answer

Password spraying is a type of brute-force attack that avoids account lockouts by using a single common password against a large number of usernames before moving on to try a second common password.

How it works:

Instead of trying many passwords against one username (which quickly triggers lockout policies), the attacker takes one password (e.g., Spring2023, Password1, Welcome123) and "sprays" it across a long list of usernames. This technique flies under the radar of lockout policies that are triggered by excessive failed attempts on a single account.

Matching the Log:

The log shows the attacker using one password (Spring2023) against five different user accounts in just a few seconds. This is a classic example of a password spray in progress.

Why the Other Options Are Incorrect

B. Account forgery

What it is:

This is not a standard term for a specific attack technique. It could loosely refer to creating a fake user account or spoofing an identity, but it does not describe a pattern of automated authentication attempts. It is a distractor.

C. Pass-the-hash

What it is:

Pass-the-hash is a lateral movement technique used after an initial compromise. An attacker who has already compromised a system steals the hashed version of a user's password from memory. They then use that hash to authenticate to other systems on the network without needing to crack the plaintext password.

Why it's incorrect:

This attack does not involve repeated login attempts with a plaintext password like Spring2023. It uses stolen cryptographic hashes and would not generate a log of "Login rejected - username - password" events.

D. Brute-force

What it is:

A brute-force attack is a general term for trying all possible combinations of characters to guess a password.

Why it's mostly incorrect:

While password spraying is technically a type of brute-force attack, it is a very specific subset. In standard security terminology, a "brute-force attack" typically implies trying many passwords against a single username (e.g., trying password1, password2, password3... against jsmith).

The Key Difference:

The pattern in the log shows one password against many usernames, which is the definition of spraying. A traditional brute-force would show many passwords against one username. Therefore, Password spraying (A) is the most accurate and specific description of the attack pattern observed.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 1.4: Given a scenario, analyze potential indicators to determine the type of attack.

Specifically, it tests your ability to analyze authentication logs to identify the TTP (Tactic, Technique, and Procedure) of an attacker. Recognizing the pattern of a password spraying attack is a critical skill for a security analyst, as it requires a different mitigation strategy (e.g., banning common passwords, monitoring for single passwords used across multiple accounts) than a traditional brute-force attack (which is best mitigated by account lockout policies).

A systems administrator would like to deploy a change to a production system. Which of the following must the administrator submit to demonstrate that the system can be restored to a working state in the event of a performance issue?

A. Backout plan

B. Impact analysis

C. Test procedure

D. Approval procedure

Explanation

The question centers on a key principle of change management: ensuring that any modification to a critical system can be safely reversed if it causes unexpected problems, such as performance degradation or system failure.

Why A. Backout Plan is the Correct Answer

A backout plan (also known as a rollback plan) is a predefined set of steps designed to restore a system to its previous, known-good configuration if a change fails or causes unacceptable disruption.

Purpose:

Its sole purpose is to provide a clear, documented path to reverse the change and return the system to a fully functional state as quickly as possible. This minimizes downtime and business impact.

Directly Addressing the Requirement:

The question asks for what demonstrates the system "can be restored to a working state in the event of a performance issue." A backout plan is explicitly this document. It details:

The specific steps to undo the change (e.g., run a specific script, restore from a backup, reboot with a previous configuration)

The personnel responsible for executing the rollback.

The conditions that trigger the backout procedure (e.g., "if CPU usage remains above 95% for more than 15 minutes").

The estimated time to complete the rollback.

Submitting a backout plan as part of a change request provides the Change Advisory Board (CAB) with the confidence that the administrator has considered the risks and has a concrete strategy for mitigation.

Why the Other Options Are Incorrect

B. Impact analysis

What it is:

An impact analysis is a document that assesses the potential consequences of the proposed change. It identifies which systems, services, and business processes might be affected, and to what degree (e.g., "This change will cause a 5-minute outage for the billing department").

Why it's incorrect:

While an impact analysis is a critical component of a change request, it does not, by itself, demonstrate how to fix a problem. It explains what might break, but not how to restore service. The backout plan is the actionable procedure that follows from a well-done impact analysis.

C. Test procedure

What it is:

A test procedure outlines the steps that will be taken to validate that the change works correctly before it is deployed to production. This is often done in a staging or development environment that mimics production.

Why it's incorrect:

The test procedure is about proving the change works as intended. The question, however, is about what to do when it doesn't work. The test procedure helps reduce the likelihood of needing a backout plan but does not serve as the plan to restore the system if the change fails.

D. Approval procedure

What it is:

The approval procedure is the workflow that a change request must follow to be authorized. It defines who has the authority to approve different types of changes (e.g., a major change may require CIO approval, while a minor one may only need a team lead's approval).

Why it's incorrect:

This is the process for getting permission to make the change. It is unrelated to the technical steps required to restore the system to a working state if the change causes an issue.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 5.5: Explain the importance of change management processes and controls.

A formal change management process is a key operational security control. The backout plan is a mandatory element of any well-structured change request for a production system. It is a fundamental risk mitigation tool that ensures the organization can maintain availability (a core tenet of the CIA triad) even when implementing necessary changes.

Which of the following scenarios describes a possible business email compromise attack?

A. An employee receives a gift card request in an email that has an executive's name in the display field of the email.

B. Employees who open an email attachment receive messages demanding payment in order to access files.

C. A service desk employee receives an email from the HR director asking for log-in credentials to a cloud administrator account.

D. An employee receives an email with a link to a phishing site that is designed to look like the company's email portal.

Explanation

A Business Email Compromise (BEC) is a sophisticated scam targeting businesses that conduct wire transfers and have suppliers with foreign origins. It is also known as "CEO Fraud" or "Executive Whaling." The goal is to trick an employee into transferring money or sensitive data to a fraudulent account.

Why A. is the Correct Answer

This scenario is a near-textbook example of a common BEC tactic:

Spoofing Authority:

The attacker impersonates a high-level executive (e.g., CEO, CFO) by spoofing the display name in the email. The email may appear to come from CEO's Name

Urgent, Seemingly Benign Request:

The request is for gift cards, a common BEC theme. The attacker often provides a plausible reason ("need them for employee rewards," "client gifts") and creates a sense of urgency ("I'm in a meeting, need this done now"). This pressures the employee to bypass normal verification procedures and comply quickly.

Goal:

The ultimate goal is financial fraud. The employee is instructed to purchase the gift cards and send the codes to the attacker, resulting in an untraceable financial loss for the company.

This option perfectly captures the social engineering and impersonation elements that define a BEC attack.

Why the Other Options Are Incorrect

B. Employees who open an email attachment receive messages demanding payment in order to access files.

What this describes:

This is a classic ransomware attack. The malicious attachment (e.g., a macro-laden Word document) executes code that encrypts the user's files. The demand for payment (usually in cryptocurrency) to decrypt them is the hallmark of ransomware. While delivered via email, the objective and method are different from BEC.

C. A service desk employee receives an email from the HR director asking for log-in credentials to a cloud administrator account.

What this describes:

This is a standard phishing or spear-phishing attack aimed at credential theft. The attacker is impersonating a trusted figure to steal login information. While this shares the impersonation element with BEC, the ultimate goal is different. BEC is primarily focused on financial fraud (e.g., wire transfers, gift cards), not credential theft. Credential theft can be a step in a BEC campaign, but the scenario described is more directly a credential phishing attempt.

D. An employee receives an email with a link to a phishing site that is designed to look like the company's email portal.

What this describes:

This is another clear example of a general phishing attack. The goal is to steal the employee's username and password by tricking them into entering their credentials on a fake login page. Again, while credential theft can enable further attacks (including BEC), this scenario itself describes the mechanics of a standard phishing operation, not the specific financial fraud objective of a BEC.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 1.1: Compare and contrast common social engineering techniques.

BEC is a high-impact form of social engineering that relies heavily on pretexting (creating a fabricated scenario) and impersonation (spoofing a high-authority figure). Understanding the nuances between different email-based threats like phishing, ransomware, and BEC is a critical skill for the exam and for real-world security analysis.

Which of the following vulnerabilities is associated with installing software outside of a manufacturer’s approved software repository?

A. Jailbreaking

B. Memory injection

C. Resource reuse

D. Side loading

Explanation

The question describes the act of bypassing the official, curated source for applications (like the Apple App Store or Google Play Store) to install software from a third-party or unofficial source.

Why D. Side loading is the Correct Answer

Side loading is the specific term for installing an application on a device without using the manufacturer's official app store or approved distribution mechanism.

How it's done:

On Android, this involves enabling "Unknown sources" in the security settings. On iOS, it is much more difficult without first jailbreaking the device

Associated Vulnerability:

Side loading bypasses the security review processes (like sandboxing, code signing, and malware scanning) that official app stores enforce. This creates a significant vulnerability, as users can inadvertently install:

Malware:

Malicious software disguised as a legitimate app.

Spyware:

Software that steals personal information.

Poorly secured apps:

Applications that have not been vetted for security best practices, potentially introducing vulnerabilities to the device.

The vulnerability is the introduction of unvetted, potentially malicious code onto the system.

Why the Other Options Are Incorrect

A. Jailbreaking

What it is:

Jailbreaking (on iOS) or rooting (on Android) is the process of removing software restrictions imposed by the operating system manufacturer to gain privileged control (root access) over the device.

Relationship to Side loading:

Jailbreaking is often a prerequisite for side loading on heavily restricted devices like iPhones. However, they are not the same thing. Jailbreaking is the act of removing restrictions; side loading is one of the actions you can perform after those restrictions are removed. The question is about the vulnerability associated with installing the software itself, not the act of unlocking the device to allow it.

B. Memory injection

What it is:

Memory injection (e.g., buffer overflow attacks, SQL injection) is a class of software vulnerabilities where an attacker exploits a flaw in a program's handling of input to insert and execute malicious code in the memory space of a running process.

Why it's incorrect:

This is a low-level technical software vulnerability. It is not directly related to the method of software installation (official store vs. third-party). An app from an official store could have a memory injection vulnerability if it was poorly coded.

C. Resource reuse

What it is:

Resource reuse refers to vulnerabilities that can occur when a system fails to properly clear sensitive information from memory, storage, or other resources before reallocating them to a new process or user. An attacker could potentially access the residual data.

Why it's incorrect:

This is another low-level technical vulnerability related to how a system manages its memory and resources. Like memory injection, it is unrelated to the source of an application's installation.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 2.4: Explain the purpose of mitigation techniques used to secure the enterprise.

A key mitigation technique for endpoint security, especially on mobile devices, is to restrict software installation sources. Enforcing policies that prevent side loading is a fundamental security control to reduce the risk of malware infection and data compromise. Understanding the term "side loading" and its associated risks is essential for managing modern device security.

A security manager is implementing MFA and patch management. Which of the following would best describe the control type and category? (Select two).

A. Physical

B. Managerial

C. Detective

D. Administrator

E. Preventative

F. Technical

Explanation

To answer this, we need to understand the two common ways security controls are classified:

Control Type (Category):

This describes what the control is. The main categories are Technical, Managerial, and Physical.

Control Function:

This describes what the control does. The main functions are Preventative, Detective, Corrective, Deterrent, and Compensating.

Let's analyze the two security measures being implemented:

1. Multi-Factor Authentication (MFA)

What it is:

A technical system that requires a user to provide two or more verification factors to gain access to a resource.

Control Category (What it is):

MFA is implemented through software, hardware, or protocols. Therefore, it is a Technical control.

Control Function (What it does):

The primary purpose of MFA is to stop unauthorized access before it happens. It prevents an attacker from gaining access even if they have a username and password. Therefore, its function is Preventative.

2. Patch Management

What it is:

The process of acquiring, testing, and installing patches (code changes) on systems to fix vulnerabilities.

Control Category (What it is):

While the policy that mandates patching is a Managerial control, the act of implementing the patches involves deploying software updates to systems, servers, and applications. This is a hands-on, technical process. Therefore, the implementation is a Technical control.

Control Function (What it does):

The primary purpose of patching is to fix known software vulnerabilities. By fixing these holes, it prevents attackers from being able to exploit them. Therefore, its function is Preventative.

Why the Other Options Are Incorrect

A. Physical:

Physical controls are tangible, real-world objects like fences, locks, security guards, and CCTV cameras. Neither MFA nor patch management are physical items; they are digital processes.

B. Managerial:

Managerial controls are administrative in nature. They are the policies, procedures, and guidelines that govern how security is implemented. The policy requiring MFA or a patching schedule would be managerial. However, the question specifies the security manager is "implementing" them, which is the technical execution of those policies.

C. Detective:

Detective controls are designed to identify and alert on security events as they are happening or after they have occurred. Examples include intrusion detection systems (IDS) and log monitoring. MFA and patching do not detect incidents; they work to stop them from happening in the first place.

D. Administrator:

This is a distractor. "Administrator" is a job role, not a standard category or function for classifying security controls.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 5.1: Explain the importance of security concepts in an enterprise environment.

A core security concept is understanding and applying security controls. You must be able to classify controls by their type (Technical, Managerial, Physical) and by their function (Preventative, Detective, Corrective, etc.). MFA and patch management are two of the most critical technical, preventative controls in any security program.

A security engineer is installing an IPS to block signature-based attacks in the environment. Which of the following modes will best accomplish this task?

A. Monitor

B. Sensor

C. Audit

D. Active

Explanation

An Intrusion Prevention System (IPS) is a network security technology that examines network traffic to identify and respond to malicious activity. A key feature that distinguishes it from its cousin, the Intrusion Detection System (IDS), is its ability to actively block threats.

Why D. Active is the Correct Answer

The question explicitly states the goal is to "block" signature-based attacks. This requires a mode of operation where the IPS can take automated, corrective action.

Active Mode:

In this mode, the IPS is placed inline with the network traffic (meaning all traffic must pass through it). When it detects a packet that matches the signature of a known attack (like a specific exploit or malware), it can immediately take actions to block the threat. These actions include:

Dropping the malicious packets.

Resetting the connection (sending a TCP RST packet to both ends).

Blocking all future traffic from the offending source IP address for a period of time.

"Best Accomplish This Task":

Because the requirement is to block attacks, the Active mode is the only one designed to perform this function automatically and in real-time.

Why the Other Options Are Incorrect

A. Monitor

What it is:

Monitor mode is typically used by an Intrusion Detection System (IDS). The sensor is connected to a network port configured for port mirroring (SPAN) and sees a copy of all traffic. It analyzes this traffic and generates alerts for suspicious activity.

Why it's incorrect:

An IDS in monitor mode is a passive, detective control. It "monitors" and "alerts" but does not block traffic. It cannot stop an attack; it can only tell you that one is happening. This does not meet the requirement to "block."

B. Sensor

What it is:

A "sensor" is not an operational mode; it is the physical or virtual appliance itself that performs the monitoring or prevention. You install a sensor and then configure it to operate in a specific mode (e.g., Active or Monitor).

Why it's incorrect:

This is a distractor. The question asks for the mode the sensor should be configured for, not what is being installed.

C. Audit

What it is:

Audit mode is similar to monitor mode. It is a passive mode where the IPS/IDS records events and generates alerts for later analysis and auditing. Its primary function is logging and alerting, not blocking.

Why it's incorrect:

Like monitor mode, audit mode is passive. It might write to a log or send an email to a security analyst, but it will not actively block the malicious traffic. It does not fulfill the requirement to "block."

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 3.2: Given a scenario, implement secure network designs.

Part of secure network design is understanding the placement and function of security appliances like firewalls, IPS, and IDS. A key distinction is between preventative controls (IPS in Active mode) and detective controls (IDS in Monitor/Audit mode). The exam requires you to know that an IPS must be inline and in an Active mode to block threats.

Which of the following alert types is the most likely to be ignored over time?

A. True positive

B. True negative

C. False positive

D. False negative

Explanation

This question tests your understanding of security alert fatigue and the real-world implications of different types of alerts generated by security systems like IDS, IPS, or SIEM.

Let's first define the terms:

True Positive:

A legitimate attack that is correctly detected and alerted. (Good)

True Negative:

Normal, legitimate activity that is correctly ignored. (Good)

False Positive:

Normal, legitimate activity that is incorrectly flagged as an attack. (Bad)

False Negative:

A legitimate attack that is not detected. (Very Bad)

Why C. False Positive is the Correct Answer

False positives are the primary cause of alert fatigue.

What is Alert Fatigue?

This is a phenomenon where security analysts become desensitized to security alerts due to the high volume of notifications, many of which turn out to be harmless.

Why False Positives Cause It:

When a security tool constantly generates alerts for benign activity (e.g., alerting on a user's legitimate remote login, flagging a safe application as malware), analysts waste time and resources investigating non-issues.

The Result of Alert Fatigue:

Over time, analysts begin to subconsciously assume that new alerts are also false alarms. This leads to them:

Prioritizing alerts lower.

Investigating them more slowly.

Ignoring them altogether.

The Ultimate Danger:

This complacency creates an environment where a true positive (an actual attack) could easily be missed because it looks identical to the hundreds of false positives that came before it.

Why the Other Options Are Incorrect

A. True positive

A true positive is a correctly identified attack. This is what security teams are paid to find. Analysts will not ignore a confirmed attack; they will investigate and remediate it. These alerts validate the purpose of the security team.

B. True negative

This is normal activity that the system correctly does not generate an alert for. Since no alert is produced, there is nothing for an analyst to see or ignore. This is the ideal, silent operation of a well-tuned system.

D. False negative

A false negative is the most dangerous type of alert failure, but it is not "ignored" by analysts—it is completely unseen. The security system failed to generate any alert at all, so the analyst has no opportunity to investigate or ignore it. The attack proceeds undetected.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 4.3: Given an incident, implement appropriate response.

A key part of incident response is the analysis phase. Alert fatigue, caused predominantly by false positives, directly hinders effective analysis. The exam expects you to understand the operational challenges of managing security tools and the importance of tuning them (adjusting rules and sensitivity) to reduce false positives and make the alert queue manageable and actionable.

A U.S.-based cloud-hosting provider wants to expand its data centers to new international locations. Which of the following should the hosting provider consider first?

A. Local data protection regulations

B. Risks from hackers residing in other countries

C. Impacts to existing contractual obligations

D. Time zone differences in log correlation

Explanation

Expanding a cloud business internationally is a complex legal and operational undertaking. While all the options are important considerations, one is a fundamental prerequisite that governs all others and carries significant legal and financial consequences if not addressed first.

Why A. Local Data Protection Regulations is the Correct Answer

Data sovereignty and data protection laws are the most critical and immediate concerns when establishing a physical data center presence in a new country.

Legal Compliance:

Every country (and sometimes regions within countries) has its own unique set of laws governing how data must be stored, processed, and protected. The most famous example is the European Union's General Data Protection Regulation (GDPR), which imposes strict rules on data transfer outside the EU and severe fines for non-compliance. Other countries have similar regulations (e.g., Brazil's LGPD, China's PIPL).

Impact on Business Model:

These regulations can dictate:

Where data can be stored:

Some countries mandate that certain types of data (e.g., citizen data, financial records, government data) must reside on servers physically located within the country's borders.

How data can be transferred:

Regulations may prohibit or restrict moving data out of the country.

Data subject rights:

Laws grant individuals specific rights (e.g., right to be forgotten, right to access) that the provider must be able to operationally support.

First Consideration:

Understanding these local laws is the first and most important step because they will determine:

If the provider can even offer services in that location.

What architectural and security controls must be in place from the start.

Who they can sell to and what data they can handle.

Failure to consider this first can lead to massive fines, legal battles, and an inability to operate in that market.

Why the Other Options Are Incorrect (or Secondary)

B. Risks from hackers residing in other countries

This is a general cybersecurity threat that exists everywhere. While the threat landscape may vary by region, it is a constant. The provider will need to implement a robust security framework regardless of location. This is an operational security concern that is addressed after the legal and regulatory framework is established.

C. Impacts to existing contractual obligations

This is a very important secondary consideration. The provider must review existing Service Level Agreements (SLAs) and contracts to ensure that moving data or services to a new international location doesn't violate terms promised to current customers (e.g., regarding data jurisdiction, performance, or availability). However, you cannot even begin to assess this impact until you first understand the local regulations that will shape your new offerings.

D. Time zone differences in log correlation

This is an operational and technical challenge for the Security Operations Center (SOC). While correlating logs across multiple time zones requires careful planning and tool configuration, it is a solvable engineering problem. It is a tactical concern that is addressed long after the strategic legal and business decisions have been made.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 5.3: Explain the importance of policies to organizational security.

This objective covers compliance and legal considerations. Understanding the impact of jurisdictional laws and regulations on data is a fundamental aspect of organizational security policy. A company's security policies must be designed to ensure compliance with all applicable local laws, making this the paramount concern when expanding internationally.

A company relies on open-source software libraries to build the software used by its customers. Which of the following vulnerability types would be the most difficult to remediate due to the company's reliance on open-source libraries?

A. Buffer overflow

B. SQL injection

C. Cross-site scripting

D. Zero day

Explanation

The company's development model introduces a specific challenge: it does not have full control over the entire codebase it uses. It depends on external, third-party open-source libraries. The difficulty in remediating a vulnerability depends heavily on where that vulnerability exists and who is responsible for fixing it.

Why D. Zero Day is the Correct Answer

A Zero-day vulnerability is a flaw in software that is unknown to the vendor or developer who should be mitigating it. There are "zero days" of advance warning or time to prepare a patch.

Why it's the most difficult to remediate in this scenario:

Dependency on Upstream Fix:

If a zero-day is discovered in one of the open-source libraries the company uses, the company is completely dependent on the external maintainers of that library to:

Become aware of the issue.

Develop a patch.

Release a fixed version.

No Control Over Timeline:

The company has no control over how quickly this happens. The library maintainers might be volunteers with limited time, or the vulnerability might be complex and require a long time to fix properly.

Immediate Exploitation Risk:

Once the zero-day becomes public knowledge (or is discovered by attackers), it can be exploited immediately. During the window of time between public disclosure and the library maintainers releasing a patch, the company's software is critically vulnerable, and there is absolutely nothing the company's developers can do to fix the root cause themselves. They are forced to wait, making remediation extremely difficult.

This lack of control and the unpredictable timeline for an external fix make a zero-day in a dependency the most difficult scenario to remediate.

Why the Other Options Are Incorrect

A. Buffer overflow, B. SQL injection, C. Cross-site scripting

These are all common vulnerability types (e.g., CWE-120, CWE-89, CWE-79). The key differentiator is that these are typically introduced by the company's own developers in the custom code they write.

Why they are easier to remediate:

Because the vulnerability exists in code the company controls, its developers can:

Immediately diagnose the problem.

Write a patch.

Test the fix.

Deploy an update to their software.

The remediation timeline is entirely within the company's control. They don't have to wait for an external party. While fixing any bug takes effort, the process is straightforward and manageable compared to the helpless waiting involved in a third-party zero-day scenario.

Reference to Exam Objectives

This question aligns with the CompTIA Security+ (SY0-701) Exam Objective 1.5: Explain different threat actors, vectors, and intelligence sources.

Zero-day vulnerabilities are a premier threat vector. The exam requires you to understand the unique challenge they pose, especially in modern software development which heavily relies on software supply chains (like open-source libraries). Managing this risk involves processes like Software Composition Analysis (SCA) to inventory dependencies and vigilant monitoring of sources for new vulnerability disclosures.

A spoofed identity was detected for a digital certificate. Which of the following are the type of unidentified key and the certificate mat could be in use on the company domain?

A. Private key and root certificate

B. Public key and expired certificate

C. Private key and self-signed certificate

D. Public key and wildcard certificate

Explanation:

A "spoofed identity" in the context of a digital certificate means an attacker has created a certificate that fraudulently claims to represent a legitimate domain or entity (e.g., yourcompany.com).

C. Private key and self-signed certificate is correct.

This is the most likely scenario for a spoofed identity.

Self-Signed Certificate:

Anyone can create a self-signed certificate for any domain name without any validation or approval from a Certificate Authority (CA). This makes it trivial for an attacker to generate a certificate that spoofs a company's identity.

Private Key:

The attacker would possess the private key that corresponds to the public key in the fraudulent, self-signed certificate. This allows them to complete the TLS handshake and make the spoofed certificate appear valid to a user's browser, which would then display a trust error (as it's not signed by a trusted CA).

A. Private key and root certificate is incorrect.

A root certificate is the top-most, highly protected certificate in a PKI hierarchy that belongs to a trusted Certificate Authority (CA). If an attacker had the private key for a legitimate root certificate, they could sign any certificate and it would be trusted by every browser and operating system. This is called a "compromised CA" and is an extremely severe, but much rarer, event than simple self-signed certificate spoofing.

B. Public key and expired certificate is incorrect.

The public key is not a secret; it is designed to be publicly shared. An expired certificate would cause a browser warning but would not inherently indicate a spoofed identity—it would just be an old, legitimate certificate.

D. Public key and wildcard certificate is incorrect.

A wildcard certificate (e.g., for *.yourcompany.com) is a legitimate type of certificate issued by a trusted CA. Like Option B, the public key is not secret. While an attacker might try to steal the private key for a wildcard certificate, the certificate itself is not inherently spoofed; it's a valid certificate that has been misused.

Reference:

CompTIA Security+ SY0-701 Objective 3.9: "Explain public key infrastructure (PKI) concepts." This objective covers certificate types (like root, wildcard, and self-signed), the role of public/private keys, and common certificate issues, which include trust errors and identity spoofing.

| Page 4 out of 60 Pages |

| Previous |