A company wants to track modifications to the code used to build new virtual servers. Which of the following will the company most likely deploy?

A. Change management ticketing system

B. Behavioral analyzer

C. Collaboration platform

D. Version control tool

Explanation:

The requirement is to track modifications to the code used to build servers. This is specifically about managing changes to source code or configuration scripts (e.g., Infrastructure as Code scripts like Terraform, Ansible, or Puppet).

D. Version control tool is correct.

A version control tool (e.g., Git, Subversion) is specifically designed to track every change made to code files. It records who made the change, when it was made, and what exactly was modified. This provides a complete history and audit trail of all modifications, which is essential for troubleshooting, rollback, and understanding the evolution of the codebase used to build infrastructure.

A. Change management ticketing system is incorrect.

A change management system (e.g., Jira Service Desk, ServiceNow) is used to request, approve, and document changes to IT systems from a process perspective. While it might track that a code change was approved, it does not track the actual content of the code modifications themselves. That is the job of a version control system.

B. Behavioral analyzer is incorrect.

A behavioral analyzer is a security tool that monitors system or user behavior to detect anomalies that might indicate a threat. It is used for security monitoring and incident response, not for tracking code changes.

C. Collaboration platform is incorrect.

A collaboration platform (e.g., Microsoft Teams, Slack) is used for communication and file sharing. While teams might discuss code changes on these platforms, the platforms themselves do not version, track, or manage the code changes.

Reference:

CompTIA Security+ SY0-701 Objective 2.2: "Explain the importance of security concepts in an enterprise environment." The concept of Infrastructure as Code (IaC) and the use of version control systems to securely manage and track changes to configuration scripts is a key part of modern, secure development and operations practices.

Which of the following would be the most appropriate way to protect data in transit?

A. SHA-256

B. SSL 3.0

C. TLS 1.3

D. AES-256

Explanation:

Why C is Correct:

TLS (Transport Layer Security) is a cryptographic protocol designed specifically to provide security for data in transit over a network. TLS 1.3 is the latest and most secure version, offering improvements like stronger encryption algorithms, perfect forward secrecy, and reduced latency compared to older versions. It is the standard protocol used to secure web traffic (HTTPS), email, VPNs, and other communications.

Why A is Incorrect:

SHA-256 is a cryptographic hashing algorithm. Its primary purpose is to verify data integrity (ensuring data has not been altered), not to protect data confidentiality in transit. It is often used within TLS for certificate signatures and integrity checks, but it does not encrypt data on its own.

Why B is Incorrect:

SSL (Secure Sockets Layer) is the predecessor to TLS. SSL 3.0 is now considered obsolete and insecure due to known vulnerabilities (e.g., POODLE attack). It should never be used to protect data in transit.

Why D is Incorrect:

AES-256 is a symmetric encryption algorithm used to protect data at rest (e.g., encrypting files on a disk) or as part of securing data in transit (within protocols like TLS). However, AES-256 by itself is not a protocol for data in transit; it is a building block. TLS uses AES-256 (among other ciphers) to encrypt the data during transmission, but the protocol itself (TLS) is what provides the full suite of security services (encryption, authentication, integrity).

Reference:

This question falls under Domain 3.0: Implementation, specifically covering the use of cryptographic protocols and algorithms for data protection. Understanding the distinction between protocols (TLS), algorithms (AES, SHA), and their specific applications (data in transit vs. at rest) is critical for the SY0-701 exam. TLS 1.3 is the current best practice for securing data in transit.

Which of the following is prevented by proper data sanitization?

A. Hackers' ability to obtain data from used hard drives

B. Devices reaching end-of-life and losing support

C. Disclosure of sensitive data through incorrect classification

D. Incorrect inventory data leading to a laptop shortage

Explanation:

A) Hackers' ability to obtain data from used hard drives is the correct answer.

Proper data sanitization involves completely and irreversibly destroying or erasing data from storage devices (like hard drives, SSDs, etc.) before they are disposed of, recycled, or repurposed. This process ensures that any sensitive information previously stored on the device cannot be recovered by unauthorized individuals, including hackers who might acquire the used hardware. Techniques include physical destruction (e.g., shredding), cryptographic erasure, or using specialized software tools to overwrite data multiple times.

Why the others are incorrect:

B) Devices reaching end-of-life and losing support:

This is a lifecycle management issue related to patch management and vendor support cycles. Data sanitization deals with the data on the device, not the device's operational status or support eligibility.

C) Disclosure of sensitive data through incorrect classification:

Data classification is a process of categorizing data based on its sensitivity (e.g., public, internal, confidential). Incorrect classification is a procedural or human error that data sanitization cannot prevent. Sanitization removes data but does not address how data is labeled or handled during its active use.

D) Incorrect inventory data leading to a laptop shortage:

This is an operational or logistical issue related to asset management and inventory tracking. Data sanitization focuses on securing data at the end of a device's life, not on maintaining accurate counts of hardware assets.

Reference:

This question tests knowledge of Domain 5.1: Explain the importance of data protection. Data sanitization is a critical practice for ensuring that sensitive data is not exposed when storage media are retired or reused. It aligns with data retention and disposal policies, which are key components of data protection strategies.

An organization is building a new backup data center with cost-benefit as the primary requirement and RTO and RPO values around two days. Which of the following types of sites is the best for this scenario?

A. Real-time recovery

B. Hot

C. Cold

D. Warm

Explanation:

A Cold Site is the most cost-effective type of backup facility. It is a physical location with basic infrastructure like power, cooling, and raised flooring, but it typically lacks pre-configured hardware, software, or current data. Because the Recovery Time Objective (RTO) and Recovery Point Objective (RPO) are both around two days, there is sufficient time to procure, deliver, and set up the necessary equipment and restore data from backups. This aligns perfectly with the primary requirement of cost-benefit, as a cold site has the lowest ongoing operational cost.

Why the others are incorrect:

A) Real-time recovery:

This is not a standard term for a site type. It describes a technology (like synchronous replication) that would support an RPO and RTO of zero, which is far more expensive and exceeds the requirements of this scenario.

B) Hot Site:

A hot site is a fully operational, mirrored duplicate of the primary data center with live, synchronized data and redundant systems. It allows for recovery within minutes or hours (very low RTO/RPO). This is the most expensive option and is not justified for a two-day recovery objective.

D) Warm Site:

A warm site is a middle-ground option. It has the hardware and software pre-installed and configured, but data is not actively synchronized; it is restored from recent backups. This offers a faster recovery than a cold site (often hours to a day) but at a higher cost. Since a two-day RTO/RPO is acceptable, the organization can choose the more cost-effective cold site.

Reference:

This maps to SY0-701 Objective 5.4 ("Explain elements of the risk management process"), specifically business impact analysis and disaster recovery planning. Understanding the relationship between RTO/RPO, cost, and the types of recovery sites (cold, warm, hot) is a key part of developing a cost-effective business continuity strategy.

A group of developers has a shared backup account to access the source code repository. Which of the following is the best way to secure the backup account if there is an SSO failure?

A. RAS

B. EAP

C. SAML

D. PAM

Privileged Access Management (PAM) solutions enhance security by enforcing strong authentication, rotation of credentials, and access control for shared accounts. This is especially critical in scenarios like SSO failures.

An organization has a new regulatory requirement to implement corrective controls on a financial system. Which of the following is the most likely reason for the new requirement?

A. To defend against insider threats altering banking details

B. To ensure that errors are not passed to other systems

C. To allow for business insurance to be purchased

D. To prevent unauthorized changes to financial data

Corrective controls, such as auditing and versioning, help prevent unauthorized changes to financial data, ensuring data integrity and compliance with regulations.

Which of the following aspects of the data management life cycle is most directly impacted by local and international regulations?

A. Destruction

B. Certification

C. Retention

D. Sanitization

Explanation:

Data retention is the practice of keeping data for a specific period of time. This aspect of the data lifecycle is most directly and comprehensively governed by a complex web of local, national, and international regulations. These laws mandate how long an organization must retain certain types of data (e.g., financial records, healthcare information, customer transaction data) for compliance, auditing, and legal discovery purposes. Failure to adhere to these mandated retention periods can result in severe legal penalties, fines, and loss of compliance status.

Analysis of Incorrect Options:

A. Destruction & D. Sanitization:

While destruction and sanitization are critically important for security and privacy, they are typically governed by the end point of a retention policy. Regulations often specify that data must be securely destroyed after its mandated retention period expires, but the primary regulatory driver is first defining how long it must be kept. The "when" (retention) dictates the "how" (sanitization/destruction).

B. Certification:

Certification refers to the process of validating that a system, product, or process meets a specific set of standards (e.g., ISO 27001 certification, FIPS 140-2 certification). While achieving certification may be a regulatory or contractual requirement in some industries, it is not a core phase of the data management life cycle itself. Regulations directly dictate the retention phase, and adherence to those regulations may be audited as part of a certification.

Reference:

This question falls under Domain 5.0: Security Program Management and Oversight, specifically concerning compliance and data governance. Key regulations like GDPR (EU), HIPAA (US), SOX (US), and various financial industry rules have explicit and detailed data retention requirements that directly shape an organization's data management policies.

A security professional discovers a folder containing an employee's personal information on the enterprise's shared drive. Which of the following best describes the data type the security professional should use to identify organizational policies and standards concerning the storage of employees' personal information?

A. Legal

B. Financial

C. Privacy

D. Intellectual property

Explanation:

Privacy data and policies specifically address the handling, storage, and protection of personal information (e.g., employee data, customer data). This includes guidelines on where such data should be stored, who can access it, and how it must be secured to comply with regulations like GDPR, HIPAA, or CCPA. The security professional should refer to the organization's privacy policies to determine the proper protocols for storing employees' personal information and to identify any violations.

Why the others are incorrect:

A. Legal:

While legal considerations may underlie privacy policies (e.g., compliance with laws), the term "legal" is too broad. Privacy is the specific domain governing personal data.

B. Financial:

This pertains to monetary matters, such as accounting records or financial transactions, not personal information storage.

D. Intellectual property:

This refers to creations of the mind (e.g., patents, trademarks, trade secrets), not personal data about employees.

Reference:

This aligns with SY0-701 Objective 5.2 ("Explain elements of effective security governance"). Privacy policies are a key component of data governance, as emphasized in frameworks like ISO/IEC 27701 (Privacy Information Management) and regulations such as GDPR. They define how organizations must protect personal data to avoid breaches and ensure compliance.

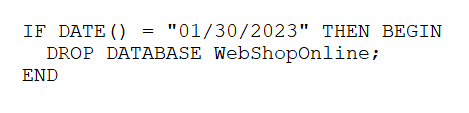

A company's online shopping website became unusable shortly after midnight on January

30, 2023. When a security analyst reviewed the database server, the analyst noticed the

following code used for backing up data:

Which of the following should the analyst do next?

A. Check for recently terminated DBAs

B. Review WAF logs for evidence of command injection.

C. Scan the database server for malware

While conducting a business continuity tabletop exercise, the security team becomes concerned by potential impacts if a generator fails during failover. Which of the following is the team most likely to consider in regard to risk management activities?

A. RPO

B. ARO

C. BIA

D. MTTR

Explanation:

MTTR (Mean Time To Repair) is the most appropriate metric in this scenario. The team's concern is about the generator failing during a critical failover event. The immediate risk management question is: "If this generator fails, how long will it take us to repair or replace it to restore power?" MTTR directly measures this average repair time, which is crucial for understanding the potential duration of a power outage and its impact on business operations.

Why the other options are incorrect:

A. RPO (Recovery Point Objective):

RPO concerns data loss, defining the maximum acceptable amount of data measured in time that can be lost. The scenario is about a power system failure (a hardware availability issue), not data backup or restoration points.

B. ARO (Annualized Rate of Occurrence):

ARO is a risk assessment metric that estimates how often a specific threat (e.g., generator failure) is expected to happen in a single year. While calculating the ARO for generator failure would be part of a broader risk analysis, the team in the exercise is specifically concerned with the impact (consequence) of the failure if it happens, not its statistical likelihood.

C. BIA (Business Impact Analysis):

A BIA is the overarching process used to identify and evaluate the potential effects of an interruption to critical business operations. The tabletop exercise itself is an activity based on the BIA. The team's specific concern about the generator is a finding that would be fed back into the BIA and risk management process to be quantified (e.g., using MTTR to calculate downtime costs).

Reference:

This question tests the practical application of risk management metrics during a business continuity exercise.

It combines objectives from Domain 5.4: Explain the key aspects of business continuity and disaster recovery (tabletop exercises, BIA) and Domain 5.7: Explain appropriate incident response activities (identifying risks during a drill).

MTTR is a key operational metric for any critical system. Understanding the repair time for a failed component (like a generator) is essential for accurately assessing the risk and impact of its failure.

Which of the following is the stage in an investigation when forensic images are obtained?

A. Acquisition

B. Preservation

C. Reporting

D. E-discovery

Explanation:

In digital forensics, the process of creating a forensic image (a bit-for-bit copy) of a storage device is specifically known as the Acquisition stage. This is a critical step where investigators obtain the data from the original evidence in a forensically sound manner to ensure its integrity for analysis.

Why not B?

Preservation: The Preservation stage involves securing the original evidence to prevent tampering, alteration, or damage. This includes documenting the state of the device, bagging it, and storing it securely. While preservation ensures the evidence is intact for acquisition, the actual act of creating the forensic image is acquisition.

Why not C?

Reporting: The Reporting stage occurs after analysis, where findings are compiled into a formal document or report for legal or administrative purposes. This is the final output, not the data gathering step.

Why not D?

E-discovery: E-discovery (electronic discovery) is a legal process focused on identifying, collecting, and producing electronically stored information (ESI) in response to a litigation request. While it may involve acquiring data, it is a broader legal procedure, not the specific forensic term for the technical process of obtaining a forensic image.

Reference:

Domain 4.5: "Explain key aspects of digital forensics." The SY0-701 objectives outline the forensic process, which includes the following steps:

Identification

Acquisition (Creating a forensic image)

Analysis

Reporting

Which of the following describes the procedures a penetration tester must follow while conducting a test?

A. Rules of engagement

B. Rules of acceptance

C. Rules of understanding

D. Rules of execution

Explanation:

Rules of Engagement (RoE) is the formal document that defines the scope, parameters, and guidelines for a penetration test. It is a critical document agreed upon by the penetration testing team and the client organization before any testing begins. The RoE specifies precisely what is allowed and what is off-limits, including:

Target Systems & Networks:

Which IP addresses, domains, and systems can be tested.

Testing Methods:

Which techniques are permitted (e.g., social engineering, phishing, denial-of-service simulations).

Timing:

The specific dates and times when testing can occur (e.g., only during business hours or only after hours).

Data Handling:

How any sensitive data discovered during the test must be handled and destroyed.

Communication & Escalation:

Points of contact and procedures for reporting critical findings immediately.

Why the others are incorrect:

B) Rules of acceptance:

This is not a standard term in penetration testing or cybersecurity. It may be confused with "Acceptable Use Policy (AUP)" or "Terms of Acceptance," but it does not describe the procedures for a test.

C) Rules of understanding:

This is not a standard industry term for governing a penetration test. While mutual understanding is crucial, the specific, agreed-upon procedures are formally documented in the Rules of Engagement.

D) Rules of execution:

This is not a standard term. The phase where testing activities are carried out is simply called the "execution phase," but it is governed by the pre-established Rules of Engagement.

Reference:

This aligns with SY0-701 Objective 4.1 ("Given a scenario, analyze indicators of malicious activity"). While focused on analysis, the exam objectives require an understanding of the penetration testing process. The concept of Rules of Engagement is a foundational element in professional penetration testing frameworks and standards, such as those from NIST (e.g., SP 800-115) and the Penetration Testing Execution Standard (PTES), ensuring tests are conducted legally, safely, and with clear authorization.

| Page 27 out of 60 Pages |

| Previous |