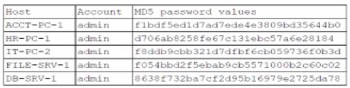

A security administrator recently reset local passwords and the following values were recorded in the system:

Which of the following in the security administrator most likely protecting against?

A. Account sharing

B. Weak password complexity

C. Pass-the-hash attacks

D. Password compromise

Explanation:

The correct answer is C. Pass-the-hash attacks.

A pass-the-hash (PtH) attack is a technique where an attacker steals a hashed user credential (the NTLM or LAN Manager hash in Windows environments) and then uses it to authenticate to a remote system or service without needing to know the plaintext password. The attack exploits the authentication protocol itself, not the strength of the password.

The image shows that after the password was reset, the system recorded a new hash for the user's password, but the LMHistory and NTHistory values were also updated.

These history values store the hashes of previous passwords. By resetting the local passwords and ensuring the system records and manages these history values, the administrator is ensuring that the old password hashes are no longer valid for authentication.

This action directly invalidates any hashes an attacker may have previously captured. Even if an attacker had stolen the old hash before the reset, they can no longer use it in a pass-the-hash attack because the system has recorded that it is an old, invalid credential.

Why the other options are incorrect:

A. Account sharing:

Account sharing is a policy violation where multiple people use the same login credentials. Resetting a password and recording its hash does nothing to prevent multiple individuals from learning and using the new password. Preventing account sharing requires policy enforcement, user training, and technical controls like multi-factor authentication (MFA), not password resets with hash history.

B. Weak password complexity:

Password complexity rules (e.g., requiring uppercase, lowercase, numbers, and symbols) are enforced by password policy settings at the time a new password is created. The act of resetting a password and recording the hash is an action taken after the password has already been created. It does not affect the complexity of the new or old password.

D. Password compromise:

While resetting a password is a standard response to a suspected or confirmed password compromise, the key detail in the question is the recording of the values in the history. A simple password reset would just change the current hash. The specific action of managing the password history hashes is a targeted measure against PtH attacks, not just a general response to a compromised plaintext password.

Reference:

This defense is specifically outlined in resources like the MITRE ATT&CK framework under technique T1550.002 - Use

Alternate Authentication Material:

Pass the Hash. Mitigation strategies include:

Password Reset:

Regularly resetting passwords, especially for privileged accounts.

Password History:

Enforcing password history policies to prevent the immediate reuse of old passwords and, crucially, to invalidate their associated hashes.

A systems administrator receives the following alert from a file integrity monitoring tool:

The hash of the cmd.exe file has changed.

The systems administrator checks the OS logs and notices that no patches were applied in the last two months. Which of the following most likely occurred?

A. The end user changed the file permissions.

B. A cryptographic collision was detected.

C. A snapshot of the file system was taken.

D. A rootkit was deployed.

Explanation:

A File Integrity Monitoring (FIM) tool alerts when a system file has been altered, which is a significant event, especially for a critical system file like cmd.exe.

D. A rootkit was deployed is correct.

A rootkit is a type of malware designed to gain privileged access to a system while actively hiding its presence. A common technique for maintaining persistence and avoiding detection is to replace or modify core system files (like cmd.exe) with malicious versions. The fact that no patches were applied rules out a legitimate change from an OS update, making unauthorized modification by a rootkit the most likely cause of the altered hash.

A. The end user changed the file permissions is incorrect.

Changing file permissions (e.g., read, write, execute) does not alter the actual contents of the file. The FIM tool is alerting that the hash has changed, which means the binary data of the file itself is different. Permissions are metadata, not file content.

B. A cryptographic collision was detected is incorrect.

A cryptographic collision occurs when two different inputs produce the same hash output. While theoretically possible with older algorithms like MD5, it is computationally infeasible with modern hashing algorithms (like SHA-256) used by FIM tools. This is an extremely unlikely event and would not be the assumed cause without evidence.

C. A snapshot of the file system was taken is incorrect.

Taking a snapshot (a point-in-time copy of a filesystem) is a read-only operation. It does not modify the original file or change its hash.

Reference:

CompTIA Security+ SY0-701 Objective 1.4: "Given a scenario, analyze indicators of malicious activity." The alteration of a core OS file is a primary indicator of compromise (IOC), often associated with malware like rootkits that attempt to hide their activity by subverting the operating system.

A company's marketing department collects, modifies, and stores sensitive customer data. The infrastructure team is responsible for securing the data while in transit and at rest. Which of the following data roles describes the customer?

A. Processor

B. Custodian

C. Subject

D. Owner

Explanation:

C) Subject is the correct answer.

In data protection and privacy contexts, the data subject is the individual whose personal data is being collected, processed, or stored. In this scenario, the customer is the data subject because their sensitive data is being handled by the company.

Why the others are incorrect:

A) Processor:

A data processor is an entity that processes data on behalf of the data controller. Here, the marketing department (or the company) acts as the processor or controller, not the customer.

B) Custodian:

The data custodian (or steward) is responsible for the technical handling and security of the data, such as the infrastructure team securing data in transit and at rest.

D) Owner:

The data owner is typically the entity (or individual) that determines the purposes and means of processing the data. In this case, the company (or its marketing department) is the data owner, not the customer.

Reference:

This question tests knowledge of Domain 5.1: Explain the importance of data protection and data roles defined in frameworks like GDPR. Understanding the distinctions between data subject, controller, processor, and custodian is essential for compliance and security responsibilities, as covered in the SY0-701 objectives.

An administrator has identified and fingerprinted specific files that will generate an alert if an attempt is made to email these files outside of the organization. Which of the following best describes the tool the administrator is using?

A. DLP

B. SNMP traps

C. SCAP

D. IPS

Explanation:

The scenario describes a system that is monitoring for the transmission of specific, sensitive files (identified via fingerprinting) and will generate an alert if those files are attempted to be emailed externally. This is a classic function of Data Loss Prevention (DLP) software.

A. DLP (Data Loss Prevention) (Correct):

DLP tools are designed to detect and prevent the unauthorized exfiltration or transmission of sensitive data. They work by:

Fingerprinting/Fingerprinting Files:

Creating a unique digital signature (a "fingerprint") for sensitive files.

Content Awareness:

Inspecting content in motion (e.g., network traffic like email), at rest (e.g., on file servers), and in use (e.g., on endpoints).

Policy Enforcement:

Generating alerts, blocking emails, or taking other actions based on predefined policies (e.g., "block any outbound email containing a file that matches the fingerprint of our proprietary design documents").

Why the other options are incorrect:

B. SNMP traps (Incorrect):

SNMP (Simple Network Management Protocol) traps are messages sent from network devices (like routers or switches) to a central monitoring system to alert about specific events or conditions (e.g., a port going down, high CPU usage). They are used for network performance and fault monitoring, not for inspecting email content for sensitive data.

C. SCAP (Incorrect):

SCAP (Security Content Automation Protocol) is a suite of standards used to automate vulnerability management, measurement, and policy compliance (e.g., checking if systems are configured according to a security baseline like DISA STIGs). It is not used for monitoring outbound email traffic for specific files.

D. IPS (Incorrect):

An Intrusion Prevention System (IPS) is a network security tool that monitors network traffic for signatures of known attacks or patterns of malicious behavior (e.g., exploit attempts, malware communication). Its primary focus is on stopping threats coming into the network or moving laterally, not on preventing sensitive data from leaving the network. While some next-generation IPS systems may have DLP features, the core function described is the specialty of a dedicated DLP solution.

Reference:

This question falls under Domain 2.0: Threats, Vulnerabilities, and Mitigations and Domain 4.0: Security Operations. It specifically tests knowledge of data security controls, with DLP being the primary technology for preventing data exfiltration.

Which of the following describes the understanding between a company and a client about what will be provided and the accepted time needed to provide the company with the resources?

A. SLA

B. MOU

C. MOA

D. BPA

Explanation: A Service Level Agreement (SLA) is a formal document between a service provider and a client that defines the expected level of service, including what resources will be provided and the agreed-upon time frames. It typically includes metrics to evaluate performance, uptime guarantees, and response times. MOU (Memorandum of Understanding) and MOA (Memorandum of Agreement) are less formal and may not specify the exact level of service. BPA (Business Partners Agreement) focuses more on the long-term relationship between partners.

Which of the following control types is AUP an example of?

A. Physical

B. Managerial

C. Technical

D. Operational

Explanation:

An Acceptable Use Policy (AUP) is a managerial control. Managerial controls are high-level policies, plans, and guidelines that form the foundation of an organization's security program. They are focused on governance and defining rules for behavior. The AUP is a policy document that outlines the rules and guidelines for the appropriate use of an organization's IT resources, making it a classic example of a managerial control.

Why the other options are incorrect:

A. Physical:

Physical controls are tangible measures to protect facilities and hardware (e.g., locks, security guards, fences). An AUP is a document, not a physical barrier.

C. Technical:

Technical controls (also called logical controls) are implemented through technology (e.g., firewalls, encryption, access control lists). While technical controls may enforce aspects of the AUP (e.g., blocking unauthorized websites), the AUP itself is a policy, not a technical tool.

D. Operational:

Operational controls are day-to-day procedures and practices executed by people (e.g., user access reviews, backup procedures, incident response). The AUP sets the rules, but the operational controls are the actions taken to implement and enforce those rules.

Reference:

This question tests the understanding of security control types and their examples.

This falls under Domain 5.6: Explain the importance of using appropriate security controls of the CompTIA Security+ SY0-701 exam objectives.

The classification of controls into managerial, operational, and technical (with physical as a subset) is a standard model in frameworks like NIST SP 800-53 (Security and Privacy Controls for Information Systems and Organizations). Policies like the AUP are clearly defined as managerial controls that guide the implementation of operational and technical controls.

Which of the following describes the category of data that is most impacted when it is lost?

A. Confidential

B. Public

C. Private

D. Critical

Explanation:

Critical data refers to information that is essential for the immediate operation and survival of an organization. The loss of critical data (e.g., core financial records, key operational data, intellectual property) would have the most severe impact, potentially causing significant financial harm, operational disruption, or even business failure. This category is defined by the impact of loss rather than just sensitivity or confidentiality.

Why the other options are incorrect:

A. Confidential:

Confidential data requires protection from unauthorized access (e.g., trade secrets, PII), but its loss might not always be immediately catastrophic if backups exist. The impact depends on the context—some confidential data may also be critical, but "critical" specifically denotes the highest level of operational impact.

B. Public:

Public data is intentionally made available to everyone (e.g., marketing materials). Its loss would have minimal to no impact, as it can easily be reproduced or redistributed.

C. Private:

This is often used interchangeably with "confidential" (e.g., private data = PII). Like confidential data, it is sensitive but may not always be critical to immediate operations.

Reference:

This question tests understanding of data classification based on impact.

This falls under Domain 5.5: Explain the importance of compliance with applicable regulations, standards, or frameworks and general risk management concepts in the SY0-701 exam objectives.

Data classification schemes (e.g., NIST FIPS 199) define categories based on the impact of loss (e.g., low, moderate, high). "Critical" aligns with high-impact data where loss could result in severe operational, financial, or reputational damage. Organizations prioritize protecting critical data above all else.

Which of the following can a security director use to prioritize vulnerability patching within a company's IT environment?

A. SOAR

B. CVSS

C. SIEM

D. CVE

Explanation:

Why B is Correct:

The Common Vulnerability Scoring System (CVSS) provides a standardized method for rating the severity of security vulnerabilities. It generates a numerical score (from 0.0 to 10.0) that reflects the characteristics and potential impact of a vulnerability (e.g., exploitability, impact on confidentiality, integrity, and availability). A security director can use these scores to prioritize patching efforts, focusing resources on remediating the most severe vulnerabilities (those with the highest CVSS scores) first. This is a fundamental practice in vulnerability management.

Why A is Incorrect:

SOAR (Security Orchestration, Automation, and Response) is a platform that helps automate and streamline security operations workflows (e.g., incident response, vulnerability management). While a SOAR platform can automate the prioritization process by ingesting CVSS scores and other data, the platform itself is not the scoring framework used to determine priority. It uses tools like CVSS to make decisions.

Why C is Incorrect:

A SIEM (Security Information and Event Management) system aggregates and correlates log data to identify potential security incidents. It is crucial for detection and monitoring but is not a scoring system used to assign a severity rating to a software vulnerability for patching prioritization.

Why D is Incorrect:

CVE (Common Vulnerabilities and Exposures) is a list of common identifiers for publicly known cybersecurity vulnerabilities. A CVE entry gives a vulnerability a standard name and number (e.g., CVE-2024-12345) but does not provide a severity score. The CVSS score is often provided alongside a CVE ID to quantify its severity.

Reference:

This question falls under Domain 4.0: Operations and Incident Response, specifically covering vulnerability management processes. Understanding the roles of CVE (identification) and CVSS (scoring/prioritization) is essential for the SY0-701 exam.

Which of the following should a systems administrator use to ensure an easy deployment of resources within the cloud provider?

A. Software as a service

B. Infrastructure as code

C. Internet of Things

D. Software-defined networking

Explanation:

B) Infrastructure as code (IaC) is the correct answer.

IaC is a key practice in cloud computing that allows systems administrators to define and manage infrastructure (e.g., virtual machines, networks, storage) using machine-readable configuration files (e.g., YAML, JSON) rather than manual processes. This enables:

Easy deployment:

Resources can be provisioned consistently and rapidly through automated scripts.

Version control:

Infrastructure configurations can be tracked, reviewed, and rolled back like code.

Reproducibility:

Environments (e.g., development, testing, production) can be replicated exactly.

Scalability:

Changes can be applied across the infrastructure with minimal effort.

Why the others are incorrect:

A) Software as a service (SaaS):

This is a cloud service model where applications are hosted and managed by a third party (e.g., email, CRM). It does not help in deploying or managing underlying infrastructure resources.

C) Internet of Things (IoT):

Refers to interconnected devices collecting and exchanging data. It is unrelated to cloud resource deployment.

D) Software-defined networking (SDN):

This is an approach to network management that simplifies configuration and operation through programmability. While useful in cloud environments, it is specific to networking and not the broader scope of resource deployment (e.g., compute, storage)

Reference:

This question tests knowledge of Domain 2.2: Summarize virtualization and cloud computing concepts. Infrastructure as code (IaC) is a fundamental DevOps practice for automating cloud deployments, as emphasized in the SY0-701 objectives. Tools like Terraform, AWS CloudFormation, or Azure Resource Manager exemplify IaC.

A security analyst needs to propose a remediation plan 'or each item in a risk register. The item with the highest priority requires employees to have separate logins for SaaS solutions and different password complexity requirements for each solution. Which of the following implementation plans will most likely resolve this security issue?

A. Creating a unified password complexity standard

B. Integrating each SaaS solution with the Identity provider

C. Securing access to each SaaS by using a single wildcard certificate

D. Configuring geofencing on each SaaS solution

Explanation:

The core security issue described is password fatigue and weak password management caused by employees managing multiple, separate sets of credentials (usernames and passwords) with different complexity rules for each SaaS application. This leads to users writing down passwords, reusing them, or creating weak ones to meet varying requirements.

Integrating each SaaS solution with the Identity Provider (IdP) via a standard like SAML (Security Assertion Markup Language) or OpenID Connect (OIDC) resolves this. This implementation of Single Sign-On (SSO) allows employees to use a single, strong set of corporate credentials (enforced by a unified complexity policy) to access all integrated applications. The identity provider handles authentication, eliminating the need for separate logins and passwords for each SaaS solution.

Why the others are incorrect:

A) Creating a unified password complexity standard:

While this is a good practice, it does not solve the problem of having separate logins. Employees would still have to create and remember a unique, complex password for every single SaaS application, which is the root cause of the issue.

C) Securing access to each SaaS by using a single wildcard certificate:

Certificates are used for encrypting traffic (TLS/SSL) and authenticating servers, not users. A wildcard certificate might secure the connections to various subdomains but does nothing to simplify or secure user authentication and password management.

D) Configuring geofencing on each SaaS solution:

Geofencing restricts access based on geographic location. It is a useful access control for preventing unauthorized logins from strange locations, but it does not address the fundamental problem of credential management and multiple passwords.

Reference:

This aligns with SY0-701 Objective 3.3 ("Given a scenario, implement secure identity and access management"). The solution describes implementing Federated Identity Management, a key concept where an identity provider (like Azure AD or Okta) is used to manage authentication across multiple systems, greatly improving security and user experience.

An employee in the accounting department receives an email containing a demand for payment tot services performed by a vendor However, the vendor is not in the vendor management database. Which of the following in this scenario an example of?

A. Pretexting

B. Impersonation

C. Ransomware

D. Invoice scam

Explanation:

Why D is Correct:

An invoice scam is a specific type of business email compromise (BEC) where a threat actor sends a fraudulent invoice for goods or services that were never rendered, hoping that an employee will process the payment without proper verification. The key indicators in this scenario are:

The email contains a demand for payment.

The vendor is unknown and "not in the vendor management database."

This is a classic social engineering attack that preys on the routine processes of accounting departments, relying on haste or a lack of rigorous verification to succeed.

Why A is Incorrect:

Pretexting is a broader social engineering technique where an attacker creates a fabricated scenario (a pretext) to steal information. For example, an attacker might pretend to be from IT support to trick an employee into revealing their password. While an invoice scam might use a pretext (the false pretext of being a legitimate vendor), the specific attack described is more precisely defined as an invoice scam.

Why B is Incorrect:

Impersonation is a technique used within many types of attacks, including this one. The attacker is impersonating a vendor. However, "impersonation" is too general a term. It describes the method of the attack (spoofing an identity), but not the specific goal or type of attack, which is financial fraud via a fake invoice.

Why C is Incorrect:

Ransomware is a type of malware that encrypts files and demands a ransom payment to decrypt them. This scenario describes a phishing email with a fraudulent payment demand, not a malware infection that holds data hostage. There is no indication of system encryption or a ransom note.

Reference:

This question falls under Domain 1.0: Threats, Attacks, and Vulnerabilities. It tests the ability to identify different types of social engineering attacks and business email compromises (BEC), specifically invoice scams, which are a common threat vector targeting financial departments.

Which of the following risk management strategies should an enterprise adopt first if a legacy application is critical to business operations and there are preventative controls that are not yet implemented?

A. Mitigate

B. Accept

C. Transfer

D. Avoid

Explanation:

The correct answer is A. Mitigate.

The scenario describes a legacy application that is critical to business operations but lacks preventative controls. This presents a clear risk.

Mitigation is the risk management strategy that involves implementing controls to reduce either the likelihood or the impact of a risk.

The question explicitly states that there are preventative controls "not yet implemented." The most logical and proactive strategy is to implement those controls to reduce the vulnerability of the critical system. This directly addresses the risk by strengthening the system's defenses.

Mitigation is often the preferred strategy for critical systems because it actively reduces the risk to an acceptable level, allowing the business to continue using the essential application without undue danger.

Why the other options are incorrect:

B. Accept:

Acceptance means understanding the risk and consciously deciding to take no action because the cost of addressing the risk outweighs the potential loss. For a critical application with known, unimplemented controls, simply accepting the risk would be negligent. The business would be knowingly leaving a vital asset unprotected.

C. Transfer:

Transfer shifts the financial burden of a risk to a third party, typically through insurance or outsourcing. While you could purchase cyber insurance (transfer), it does not reduce the technical vulnerability of the application itself. The system would still be unprotected, and a successful attack would disrupt critical operations even if insurance covered the financial cost. Insurance is a form of financial mitigation for impact, but the question focuses on the lack of preventative controls, making technical mitigation the primary and most direct strategy.

D. Avoid:

Avoidance involves eliminating the risk entirely by discontinuing the risky activity. For a critical legacy application, avoidance is likely not a viable option. Avoiding the risk would mean shutting down the application, which would directly halt business operations. This strategy is used for risks with extreme consequences, but it is not practical when the system is essential to the business.

Reference:

This question tests the understanding of the four core risk response strategies, a fundamental concept in risk management covered in the CompTIA Security+ SY0-701 objectives under Domain 5.4: Explain the importance of policies to organizational security.

The decision of which strategy to use is based on factors like criticality of the asset and feasibility of controls. For a critical asset with available controls that are not yet implemented, mitigation is the most responsible and effective first course of action.

| Page 20 out of 60 Pages |

| Previous |