A leading healthcare provider must improve its network infrastructure to secure sensitive patient data. You are evaluating a Next-Generation Firewall (NGFW), which will play a key role in protecting the network from attack. What feature of a Next-Generation Firewall (NGFW) will help protect sensitive patient data in the healthcare organization's network?

A. High Availability (HA) modes

B. Bandwidth management

C. Application - level inspection

D. Virtual Private Network (VPN) support

Explanation

Application-level inspection (often called deep packet inspection) is a core feature of a Next-Generation Firewall (NGFW) that goes beyond traditional firewalls. It allows the firewall to:

Identify Applications:

It can discern the specific application generating network traffic (e.g., Facebook, Salesforce, a custom medical application), regardless of the port or protocol being used. A traditional firewall would only see the port (e.g., TCP 443) and allow the traffic.

Enforce Granular Policies:

Based on this identification, the NGFW can create and enforce highly specific security policies. For example, it could:

Block unauthorized file-sharing applications that could exfiltrate patient data.

Allow electronic health record (EHR) applications but block any transfer of data to unauthorized cloud storage apps.

Scan the content of allowed traffic for sensitive data patterns (like Social Security numbers or patient IDs) to prevent data loss.

This granular control over what applications can do on the network is the key feature that directly protects the confidentiality and integrity of sensitive patient data.

Why the Other Options Are Incorrect

A. High Availability (HA) modes:

HA is a feature that provides fault tolerance by allowing a secondary firewall to take over if the primary one fails. This is crucial for availability and business continuity but does not directly protect the confidentiality of sensitive data from attacks or exfiltration.

B. Bandwidth management:

This feature (often called Quality of Service or QoS) prioritizes certain types of traffic to ensure performance. For example, it could prioritize video conferencing for telehealth. While important for performance, it does not directly inspect or secure the data itself from threats.

D. Virtual Private Network (VPN) support:

VPNs create encrypted tunnels for secure remote access. This protects data in transit from eavesdropping, which is important for remote workers. However, it is a access control and transmission security feature. It does not inspect the content of the traffic once it's inside the network to prevent data exfiltration or enforce application-level policies, which is the primary defensive role of the NGFW at the network perimeter.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

3.2 Given a scenario, implement host or application security solutions.

4.2 Explain the purpose of mitigation techniques used to secure the enterprise.

You are the security analyst overseeing a Security Information and Event Management (SIEM) system deployment. The CISO has concerns about negatively impacting the system resources on individual computer systems. Which would minimize the resource usage on individual computer systems while maintaining effective data collection?

A. Deploying additional SIEM systems to distribute the data collection load

B. Using a sensor based collection method on the computer systems

C. Implementing an agentless collection method on the computer systems

D. Running regular vulnerability scans on the computer systems to optimize their performance

Explanation

An agentless collection method retrieves log and event data from systems without requiring a permanent software agent (a small program) to be installed and running on each endpoint.

How it works:

The SIEM server connects to the target systems using standard network protocols (like Syslog, SNMP, or WMI) to pull data or receives data that systems are configured to send.

Minimizing Resource Usage:

Since no additional software is running constantly on the endpoint, this method uses significantly less CPU, memory, and disk I/O on the individual computer systems. The resource burden shifts to the SIEM server itself, which is built to handle the processing load.

This approach directly addresses the CISO's concern about impacting system resources while still allowing for effective centralised data collection.

Why the Other Options Are Incorrect

A. Deploying additional SIEM systems to distribute the data collection load:

This would increase the complexity and cost of the deployment but would not reduce the resource usage on the individual endpoints. Each endpoint might still need to send data to multiple systems or would be assigned to a specific SIEM, but the act of collecting the data (e.g., via an agent) would still consume local resources.

B. Using a sensor based collection method on the computer systems:

A "sensor" typically refers to a software agent installed on a host. This agent constantly monitors the system for events, collects data, and forwards it to the SIEM. This method increases resource usage on the endpoint (CPU to process events, memory to run, network to transmit), which is the opposite of what the CISO wants.

D. Running regular vulnerability scans on the computer systems to optimize their performance:

Vulnerability scans are a separate security activity. They proactively assess systems for known weaknesses. While a well-tuned system might perform better, running scans actually consumes significant system resources (CPU, network) during the scan window and does not optimize the ongoing, constant resource consumption of SIEM data collection.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

4.4 Given a scenario, analyze and interpret output from security technologies.

This includes understanding the operation and data sources of a SIEM system, including the methods of log collection (agent vs. agentless).

A client asked a security company to provide a document outlining the project, the cost, and the completion time frame. Which of the following documents should the company provide to the client?

A. MSA

B. SLA

C. BPA

D. SOW

Explanation

A Statement of Work (SOW) is a formal document that captures and defines all aspects of a project. It clearly outlines the work to be performed (the project), the timeline (completion time frame), deliverables, costs, and any other specific requirements.

The client's request for a document that details the "project, the cost, and the completion time frame" is a direct description of the core components of an SOW.

Why the Other Options Are Incorrect

A. MSA (Master Service Agreement):

An MSA is an overarching agreement that establishes the general terms and conditions (e.g., confidentiality, intellectual property rights, liability) under which future projects or services will be performed. It does not include specific details about a particular project's scope, cost, or timeline. An SOW is often created as an addendum to an MSA to define a specific project.

B. SLA (Service Level Agreement):

An SLA is an agreement that specifically defines the level of service a client can expect, focusing on measurable metrics like uptime, performance, and response times. For example, an SLA might guarantee "99.9% network availability." It does not outline a project's scope, cost, or overall timeline.

C. BPA (Business Partners Agreement):

This is a less common term and is often synonymous with a partnership agreement that outlines how two businesses will work together, share resources, or refer clients. It is not the document used to propose the specifics of a single project to a client.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

5.3 Explain the importance of policies to organizational security.

This includes understanding the purpose and components of various agreements like an SOW, MSA, and SLA in the context of third-party and vendor relationships.

Which of the following vulnerabilities is exploited when an attacker overwrites a register with a malicious address?

A. VM escape

B. SQL injection

C. Buffer overflow

D. Race condition

Explanation

A buffer overflow vulnerability occurs when a program writes more data to a fixed-length block of memory (a buffer) than it can hold. The excess data overflows into adjacent memory locations.

Exploitation:

A skilled attacker can carefully craft this excess data. By overwriting specific adjacent memory, they can overwrite the return address stored in the function's stack frame (a type of register pointer). Instead of pointing to the legitimate code, the attacker sets this address to point to their own malicious code, which they also inserted into the overflowed buffer.

Result:

When the function finishes and tries to return, it jumps to the malicious address the attacker provided, executing their code and granting them control of the application or system.

This process of overwriting a memory address (a register pointer) to redirect program flow is the classic exploitation of a buffer overflow vulnerability.

Why the Other Options Are Incorrect

A. VM escape:

This is a vulnerability in a virtualized environment where an attacker breaks out of a virtual machine's isolation to interact with and attack the host operating system or other VMs on the same host. It does not typically involve overwriting memory registers on the same system.

B. SQL injection:

This vulnerability exploits improper input validation in web applications. It allows an attacker to inject malicious SQL code into a query, which is then executed by the database. It manipulates database commands, not CPU registers or memory addresses.

D. Race condition:

This is a vulnerability where the outcome of a process depends on the sequence or timing of uncontrollable events. Exploiting a race condition involves manipulating the timing between two operations (e.g., between a privilege check and a file access), not overwriting memory addresses directly.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

2.3 Explain various types of vulnerabilities.

Application Vulnerabilities: Buffer overflows are a core application vulnerability listed in the objectives.

A company is concerned about weather events causing damage to the server room and downtime. Which of the following should the company consider?

A. Clustering servers

B. Geographic dispersion

C. Load balancers

D. Off-site backups

Explanation

Geographic dispersion (or geographic distribution) involves physically locating critical infrastructure, such as servers and data centers, in multiple, geographically separate areas.

Mitigates Weather Risks:

This strategy directly addresses the concern about local weather events. If a hurricane, flood, or tornado damages the primary server room, systems located in a different geographic region (outside the affected weather pattern) can continue to operate, preventing downtime.

Provides Redundancy:

It is a form of infrastructure redundancy that ensures business continuity even when a entire site is lost due to a natural disaster.

While other options provide elements of high availability or data recovery, geographic dispersion is the most comprehensive solution for protecting against large-scale physical threats like weather.

Why the Other Options Are Incorrect

A. Clustering servers:

Server clustering (e.g., a failover cluster) connects multiple servers to work as a single system for high availability. If one server fails, another in the cluster takes over. However, if all servers are in the same server room, a single weather event could damage the entire cluster and still cause downtime. It does not protect against a site-level failure.

C. Load balancers:

A load balancer distributes network traffic across multiple servers to optimize resource use and prevent any single server from becoming a bottleneck. Like clustering, it is designed for performance and availability within a single location but offers no protection if the entire location is taken offline by a disaster.

D. Off-site backups:

Off-site backups are crucial for data recovery after a disaster. They protect the data itself but do not directly prevent downtime. Restoring from backups to a new environment can take hours or days, during which the company would still be experiencing downtime. Geographic dispersion aims to avoid downtime altogether by having systems already running in another location.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

3.4 Explain the importance of resilience and recovery in security architecture.

Disaster Recovery: Geographic dispersion is a key strategy for disaster recovery sites (e.g., hot sites, cold sites).

1.5 Explain organizational security concepts.

Site Resilience: The objectives cover how geographic location affects site resilience and the ability to maintain operations during a disaster.

An organization would like to store customer data on a separate part of the network that is not accessible to users on the main corporate network. Which of the following should the administrator use to accomplish this goal?

A. Segmentation

B. Isolation

C. Patching

D. Encryption

Explanation

Segmentation is the practice of dividing a network into smaller, distinct subnetworks (subnets or segments). This is accomplished using switches, routers, and firewalls to control traffic between segments.

How it accomplishes the goal:

The administrator can place the customer data on a dedicated, isolated network segment. Firewall rules and access control lists (ACLs) can then be configured to deny all traffic from the main corporate network to this segment, making it inaccessible to general users. Only authorized systems or administrators (e.g., a specific application server) would be granted access.

This approach enhances security by limiting lateral movement and ensuring that only necessary systems can communicate with the sensitive data store.

Why the Other Options Are Incorrect

B. Isolation:

While isolation (completely disconnecting a system from the network) would technically make the data inaccessible, it would also make it unusable for any legitimate purpose. The goal is to store data that presumably needs to be accessed by some authorized systems or processes, not to completely air-gap it. Segmentation is the practical implementation of logical isolation for specific network resources.

C. Patching:

Patching is the process of applying updates to software to fix vulnerabilities. While crucial for security, it does not control network access or make data inaccessible to users on the network. It protects systems from exploitation but does not hide them.

D. Encryption:

Encryption protects the confidentiality of data by making it unreadable without a key. However, it does not prevent access to the storage location. A user on the corporate network could still potentially access the encrypted data files, they just wouldn't be able to read them. The requirement is to prevent access entirely, which is achieved through network controls, not cryptographic ones.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

3.3 Given an incident, apply mitigation techniques or controls to secure an environment.

Segmentation: The objectives cover network segmentation as a primary control for limiting the scope of incidents and protecting critical assets.

4.2 Explain th purpose of mitigation techniques used to secure the enterprise.

A company has begun labeling all laptops with asset inventory stickers and associating them with employee IDs. Which of the following security benefits do these actions provide? (Choose two.)

A. If a security incident occurs on the device, the correct employee can be notified.

B. The security team will be able to send user awareness training to the appropriate device.

C. Users can be mapped to their devices when configuring software MFA tokens.

D. User-based firewall policies can be correctly targeted to the appropriate laptops.

E. When conducting penetration testing, the security team will be able to target the desired laptops.

F. Company data can be accounted for when the employee leaves the organization.

Explanation

Labeling assets and associating them with employee IDs is a fundamental practice of IT asset management and accountability. The two primary security benefits are:

A. If a security incident occurs on the device, the correct employee can be notified.

When a security tool (like EDR or antivirus) generates an alert from a specific device, the asset tag and employee association allow the security team to immediately identify who is responsible for that device. This enables rapid communication with the employee to investigate the incident (e.g., "Did you just click that link?") and contain potential threats.

F. Company data can be accounted for when the employee leaves the organization.

This practice is crucial for the offboarding process. When an employee leaves, the company can quickly identify all hardware assigned to that individual (laptop, phone, etc.) and ensure it is returned. This allows the company to secure the device, wipe it if necessary, and prevent the loss of sensitive company data.

Why the Other Options Are Incorrect

B. The security team will be able to send user awareness training to the appropriate device.

Security awareness training is targeted at users, not devices. It is sent via email or assigned through a learning management system to an employee's account, not to a specific hardware asset.

C. Users can be mapped to their devices when configuring software MFA tokens.

Multi-Factor Authentication (MFA) tokens are tied to a user's identity in an identity provider (e.g., Azure AD, Okta), not to a specific physical device. A user can use their software MFA token on multiple devices (phone, laptop, tablet).

D. User-based firewall policies can be correctly targeted to the appropriate laptops.

Modern firewalls and network access control (NAC) systems apply policies based on user authentication or the device's certificate, not based on a physical asset sticker. The sticker itself does not help configure these policies.

E. When conducting penetration testing, the security team will be able to target the desired laptops.

Penetration testers target systems based on IP addresses, hostnames, or network ranges discovered during reconnaissance. They do not use physical asset inventory stickers to choose their targets.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

1.6 Explain the security implications of proper hardware, software, and data asset management.

This objective covers the importance of asset inventory, including tracking assigned users, for security purposes such as incident response and data accounting during offboarding.

A security engineer is working to address the growing risks that shadow IT services are introducing to the organization. The organization has taken a cloud-first approach end does not have an on-premises IT infrastructure. Which of the following would best secure the organization?

A. Upgrading to a next-generation firewall

B. Deploying an appropriate in-line CASB solution

C. Conducting user training on software policies

D. Configuring double key encryption in SaaS platforms

Explanation

A Cloud Access Security Broker (CASB) is a security policy enforcement point that sits between an organization's users and its cloud services. It is the definitive tool for addressing the risks of shadow IT in a cloud-first environment.

Visibility:

A CASB provides discovery capabilities to identify all cloud services being used across the organization (shadow IT), categorizing them by risk.

Enforcement:

An in-line (or proxy) CASB can actively intercept and inspect traffic in real-time. This allows it to enforce security policies, such as blocking access to unauthorized or high-risk cloud services, preventing data exfiltration, and ensuring compliance.

Data Security:

It can enforce data loss prevention (DLP) policies, encryption, and other controls on data being uploaded to or downloaded from cloud services.

Since the organization has no on-premises infrastructure, an in-line CASB deployed in the cloud (often as a reverse proxy) is the most effective technical control to directly manage and secure cloud application usage.

Why the Other Options Are Incorrect

A. Upgrading to a next-generation firewall (NGFW):

An NGFW is excellent for securing network perimeters and enforcing policy for known traffic. However, much shadow IT traffic uses common, encrypted web protocols (HTTPS) that are difficult to distinguish from legitimate traffic without specialized cloud-focused inspection. A CASB is specifically designed for this cloud application context.

C. Conducting user training on software policies:

User training is a critical administrative control to raise awareness about the dangers of shadow IT and the organization's approved software list. However, training alone is not enforceable and will not prevent all users from circumventing policy. It is an important complementary measure but not the best technical solution for securing the environment.

D. Configuring double key encryption in SaaS platforms:

Double key encryption is a powerful data protection method where two keys are needed to decrypt data. While it enhances data security within known SaaS platforms, it does nothing to discover or block the use of unauthorized (shadow IT) SaaS platforms in the first place. It's a data control, not a usage control.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

3.3 Given an incident, apply mitigation techniques or controls to secure an environment.

The objectives cover implementing controls to mitigate data loss and unauthorized access.

4.2 Explain the purpose of mitigation techniques used to secure the enterprise.

This includes the use of cloud security solutions like CASB to enforce security policies for cloud services.

An administrator at a small business notices an increase in support calls from employees who receive a blocked page message after trying to navigate to a spoofed website. Which of the following should the administrator do?

A. Deploy multifactor authentication.

B. Decrease the level of the web filter settings

C. Implement security awareness training.

D. Update the acceptable use policy

Explanation

The scenario describes users attempting to navigate to spoofed websites (fraudulent sites designed to look legitimate) and being blocked by a web filter. This indicates that a technical control (the filter) is working correctly to prevent the threat.

The Root Cause:

The problem is that employees are clicking on links that lead to these malicious sites. This is a human factor issue, not a technical failure.

The Solution:

The most effective and appropriate response is to address this human factor through security awareness training. This training should educate employees on how to:

Identify phishing emails and suspicious links.

Recognize the signs of a spoofed website (e.g., checking the URL for slight misspellings, looking for HTTPS certificates).

Follow proper procedures for reporting suspected phishing attempts.

This proactive measure aims to reduce the number of times users click on malicious links in the first place.

Why the Other Options Are Incorrect

A. Deploy multifactor authentication (MFA):

MFA is a critical security control for protecting accounts from unauthorized access, even if credentials are stolen. However, it does not prevent users from clicking links and attempting to visit spoofed websites. It protects against the consequences of a successful phishing attack but does not address the initial user behavior described.

B. Decrease the level of the web filter settings:

This would be a dangerous and incorrect action. The web filter is successfully doing its job by blocking access to known malicious sites. Lowering its security settings would allow employees to access these spoofed websites, potentially leading to malware infections or credential theft.

D. Update the acceptable use policy (AUP):

An AUP defines proper and improper use of company resources. While it might prohibit visiting malicious sites, updating it is a passive administrative control. It sets rules but does not actively train users to recognize the threat. It also does nothing to address the immediate behavior of clicking links.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

5.5 Explain the importance of human resources security and third-party risk management.

This includes the critical role of security awareness training in creating a human firewall against social engineering and phishing attacks.

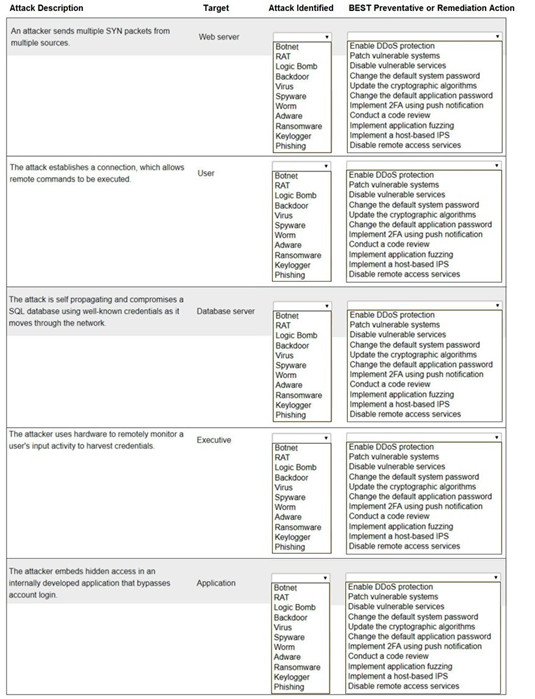

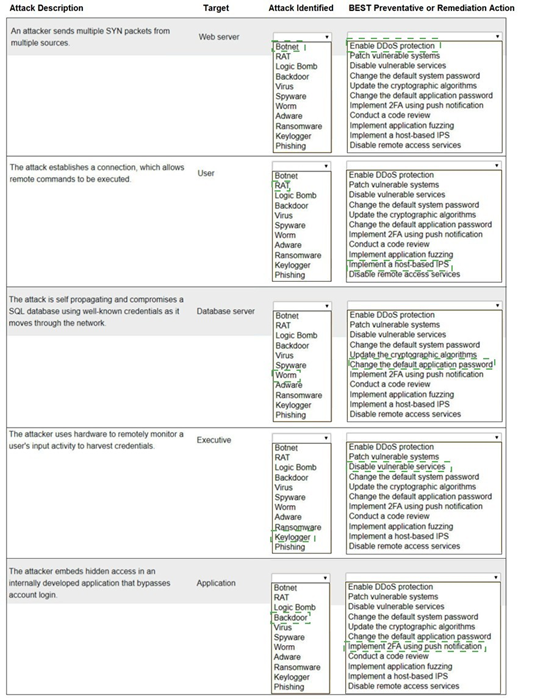

Select the appropriate attack and remediation from each drop-down list to label the corresponding attack with its remediation.

INSTRUCTIONS

Not all attacks and remediation actions will be used.

If at any time you would like to bring back the initial state of the simulation, please click the Reset All button.

1. An attacker sends multiple SYN packets from multiple sources.

Target: Web server

Attack Identified: This is a DDoS (Distributed Denial of Service) attack, specifically a SYN flood. It is not listed by that exact name, but the remediation gives it away. The closest match in the "Attack Identified" list is not a direct fit, indicating the attack type is implied by the action.

BEST Preventative or Remediation Action: Enable DDoS protection (This action is specifically designed to mitigate large-scale volumetric attacks like SYN floods from multiple sources.)

2. The attack establishes a connection, which allows remote commands to be executed.

Target: User

Attack Identified: RAT (Remote Access Trojan). This malware provides the attacker with remote control of the victim's machine, allowing them to execute commands.

BEST Preventative or Remediation Action: Implement a host-based IPS (A host-based Intrusion Prevention System can detect and block the malicious network traffic and behavior associated with a RAT calling home and receiving commands.)

3. The attack is self propagating and compromises a SQL database using well-known credentials as it moves through the network.

Target: Database server

Attack Identified: Worm (A worm is a self-replicating, self-propagating piece of malware that spreads across a network without user interaction.)

BEST Preventative or Remediation Action: Change the default application password (The attack specifically uses "well-known credentials." The most direct remediation is to change those default passwords on the database service to strong, unique ones, preventing the worm from authenticating.)

4. The attacker uses hardware to remotely monitor a user's input activity to harvest credentials.

Target: Executive

Attack Identified:Keylogger (The term "Keylogger" is not in the list, but Keyfogger is a likely typo or specific brand meant to represent a keylogger. It is hardware or software that records keystrokes to harvest credentials.)

BEST Preventative or Remediation Action: Implement MFA using push notification (Multi-factor authentication using push notifications is the best remediation. Even if credentials are harvested by the keylogger, the attacker would still need to bypass the second factor to gain access.)

5. The attacker embeds hidden access in an internally developed application that bypasses account login.

Target: Application

Attack Identified: Backdoor (A backdoor is a piece of code intentionally hidden in an application to bypass normal authentication mechanisms and provide covert access.)

BEST Preventative or Remediation Action: Conduct a code review (The most effective way to find a maliciously planted backdoor in custom-developed code is through a thorough manual or automated code review by a security-aware developer.)

A software developer would like to ensure. The source code cannot be reverse engineered or debugged. Which of the following should the developer consider?

A. Version control

B. Obfuscation toolkit

C. Code reuse

D. Continuous integration

E. Stored procedures

Explanation

Obfuscation is a technique specifically designed to make source code difficult for humans to understand and for automated tools to reverse-engineer or debug.

How it works:

An obfuscation toolkit will transform the original, readable source code into a functional equivalent that is deliberately complex, confusing, and unstructured. It does this by renaming variables to nonsensical characters, inserting dead code, encrypting parts of the code, and breaking up logical flows.

Purpose:

The primary goal is to protect intellectual property and prevent malicious actors from easily analyzing the code to find vulnerabilities, bypass licensing checks, or create exploits. This directly addresses the developer's goal of preventing reverse engineering and debugging.

Why the Other Options Are Incorrect

A. Version control:

Systems like Git are used to track changes in source code over time, allowing developers to collaborate and revert to previous states. They do not protect the code from being reverse-engineered once it is compiled and distributed.

C. Code reuse:

This is the practice of using existing code to build new functionality. It improves development efficiency but has no effect on the ability to reverse engineer the final application.

D. Continuous integration (CI):

CI is a development practice where developers frequently merge code changes into a central repository, after which automated builds and tests are run. It is a process for improving software quality and speed of development, not for securing code against analysis.

E. Stored procedures:

These are pre-compiled SQL code stored within a database server. They are used to encapsulate database logic for performance and security but are unrelated to protecting application source code from reverse engineering.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

2.1 Explain the importance of security concepts in an enterprise environment.

The concept of obfuscation falls under application security and protecting intellectual property.

Which of the following should a security administrator adhere to when setting up a new set of firewall rules?

A. Disaster recovery plan

B. Incident response procedure

C. Business continuity plan

D. Change management procedure

Explanation

A change management procedure is a formal process used to ensure that modifications to IT systems, such as implementing a new set of firewall rules, are reviewed, approved, tested, and documented before being implemented.

Why it's essential for firewall rules:

Firewall rules are a critical security control. Making changes without a formal process can inadvertently create security holes, cause service outages, or violate compliance requirements.

The Process:

The change management procedure would require the security administrator to:

Submit a request detailing the change, its purpose, and its potential impact.

Get approval from relevant stakeholders (e.g., network team, system owners, management).

Test the changes in a non-production environment.

Schedule the implementation for a specific maintenance window.

Document the new rules for future auditing and reference.

Adhering to this process minimizes risk and ensures stability and security.

Why the Other Options Are Incorrect

A. Disaster recovery plan (DRP):

A DRP focuses on restoring IT systems and operations after a major disaster or outage has occurred. It is a reactive plan, not a procedural guide for making routine configuration changes.

B. Incident response procedure:

This procedure outlines the steps to take when responding to a active security incident (e.g., a breach, malware infection). It is used to contain, eradicate, and recover from an attack. Setting up new firewall rules is a proactive maintenance task, not an incident response action.

C. Business continuity plan (BCP):

A BCP is a broader plan that focuses on maintaining essential business functions during and after a disaster. While related to DRP, it is more about keeping the business running (e.g., using manual processes) and is not a guideline for IT change control.

Reference

This aligns with the CompTIA Security+ (SY0-701) Exam Objectives, specifically under:

1.5 Explain organizational security concepts.

Change Management: The objectives emphasize the importance of a formal change management process to maintain security and avoid unintended outages when modifying systems.

| Page 2 out of 60 Pages |

| Previous |