Topic 1: Exam Pool A

A company has purchased appliances from different vendors. The appliances all have loT

sensors. The sensors send status information in the vendors' proprietary formats to a

legacy application that parses the information into JSON. The parsing is simple, but each

vendor has a unique format. Once daily, the application parses all the JSON records and

stores the records in a relational database for analysis.

The company needs to design a new data analysis solution that can deliver faster and

optimize costs.

Which solution will meet these requirements?

A. Connect the loT sensors to AWS loT Core. Set a rule to invoke an AWS Lambda function to parse the information and save a .csv file to Amazon S3. Use AWS Glue to catalog the files. Use Amazon Athena and Amazon OuickSight for analysis.

B. Migrate the application server to AWS Fargate, which will receive the information from loT sensors and parse the information into a relational format. Save the parsed information to Amazon Redshift for analysis.

C. Create an AWS Transfer for SFTP server. Update the loT sensor code to send the information as a .csv file through SFTP to the server. Use AWS Glue to catalog the files. Use Amazon Athena for analysis.

D. Use AWS Snowball Edge to collect data from the loT sensors directly to perform local analysis. Periodically collect the data into Amazon Redshift to perform global analysis.

Explanation:

Connect the IoT sensors to AWS IoT Core. Set a rule to invoke an AWS Lambda

function to parse the information and save a .csv file to Amazon S3. Use AWS

Glue to catalog the files. Use Amazon Athena and Amazon QuickSight for

analysis. This solution meets the requirement of faster analysis and cost

optimization by using AWS IoT Core to collect data from the IoT sensors in realtime

and then using AWS Glue and Amazon Athena for efficient data analysis.

This solution involves connecting the loT sensors to the AWS loT Core, setting a rule to

invoke an AWS Lambda function to parse the information, and saving a .csv file to Amazon

S3. AWS Glue can be used to catalog the files and Amazon Athena and Amazon

QuickSight can be used for analysis. This solution will enable faster and more costeffective

data analysis.

This solution is in line with the official Amazon Textbook and Resources for the AWS

Certified Solutions Architect - Professional certification. In particular, the book states that:

“AWS IoT Core can be used to ingest and process the data, AWS Lambda can be used to

process and transform the data, and Amazon S3 can be used to store the data. AWS Glue can be used to catalog and access the data, Amazon Athena can be used to query the

data, and Amazon QuickSight can be used to visualize the data.”

A solutions architect needs to implement a client-side encryption mechanism for objects

that will be stored in a new Amazon S3 bucket. The solutions architect created a CMK that is stored in AWS Key Management Service (AWS KMS) for this purpose.

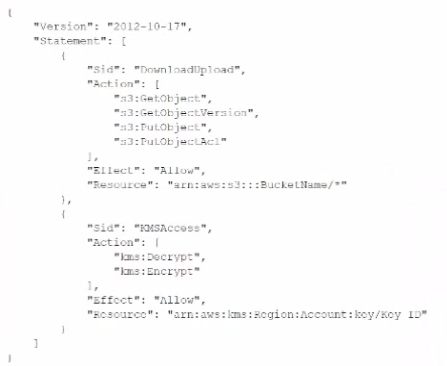

The solutions architect created the following IAM policy and attached it to an IAM role:

During tests, me solutions architect was able to successfully get existing test objects m the

S3 bucket However, attempts to upload a new object resulted in an error message. The

error message stated that me action was forbidden.

Which action must me solutions architect add to the IAM policy to meet all the

requirements?

A. Kms:GenerateDataKey

B. KmsGetKeyPolpcy

C. kmsGetPubKKey

D. kms:SKjn

A company needs to establish a connection from its on-premises data center to AWS. The

company needs to connect all of its VPCs that are located in different AWS Regions with

transitive routing capabilities between VPC networks. The company also must reduce

network outbound traffic costs, increase bandwidth throughput, and provide a consistent

network experience for end users.

Which solution will meet these requirements?

A. Create an AWS Site-to-Site VPN connection between the on-premises data center and a new central VPC. Create VPC peering connections that initiate from the central VPC to all other VPCs.

B. Create an AWS Direct Connect connection between the on-premises data center and AWS. Provision a transit VIF, and connect it to a Direct Connect gateway. Connect the Direct Connect gateway to all the other VPCs by using a transit gateway in each Region.

C. Create an AWS Site-to-Site VPN connection between the on-premises data center and a new central VPC. Use a transit gateway with dynamic routing. Connect the transit gateway to all other VPCs.

D. Create an AWS Direct Connect connection between the on-premises data center and AWS Establish an AWS Site-to-Site VPN connection between all VPCs in each Region. Create VPC peering connections that initiate from the central VPC to all other VPCs.

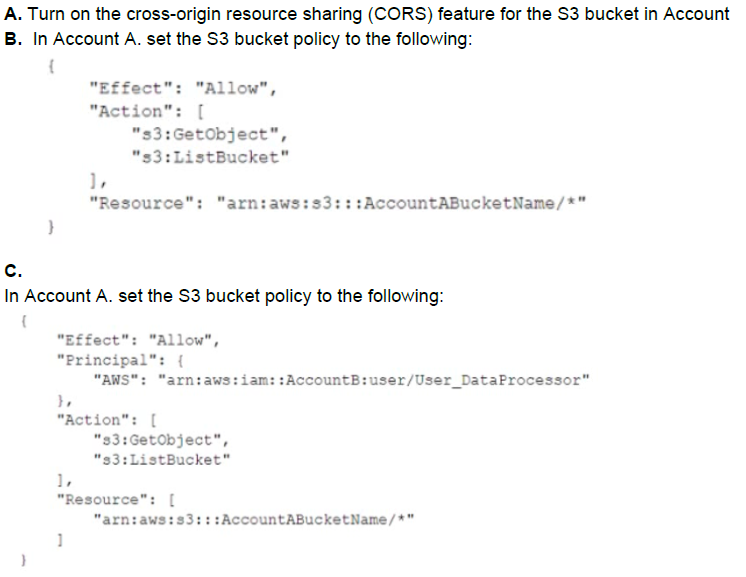

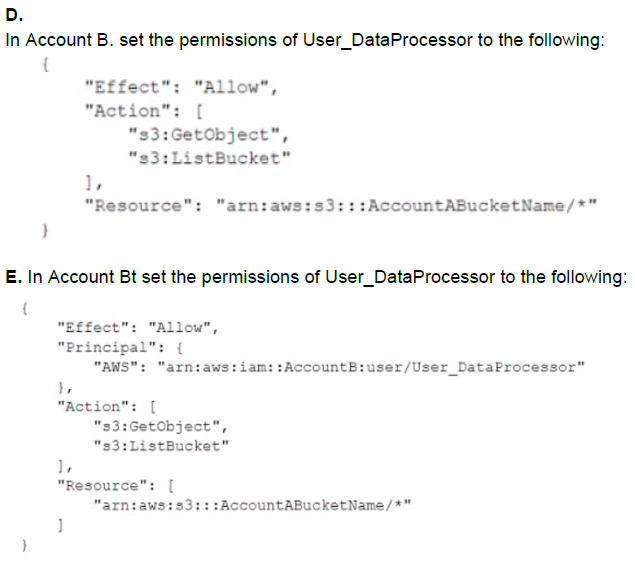

A retail company needs to provide a series of data files to another company, which is its

business partner These files are saved in an Amazon S3 bucket under Account A. which

belongs to the retail company. The business partner company wants one of its 1AM users.

User_DataProcessor. to access the files from its own AWS account (Account B).

Which combination of steps must the companies take so that User_DataProcessor can

access the S3 bucket successfully? (Select TWO.)

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

A company runs a processing engine in the AWS Cloud The engine processes

environmental data from logistics centers to calculate a sustainability index The company

has millions of devices in logistics centers that are spread across Europe The devices send

information to the processing engine through a RESTful API

The API experiences unpredictable bursts of traffic The company must implement a

solution to process all data that the devices send to the processing engine Data loss is

unacceptable

Which solution will meet these requirements?

A. Create an Application Load Balancer (ALB) for the RESTful API Create an Amazon Simple Queue Service (Amazon SQS) queue Create a listener and a target group for the ALB Add the SQS queue as the target Use a container that runs in Amazon Elastic Container Service (Amazon ECS) with the Fargate launch type to process messages in the queue

B. Create an Amazon API Gateway HTTP API that implements the RESTful API Create an Amazon Simple Queue Service (Amazon SQS) queue Create an API Gateway service integration with the SQS queue Create an AWS Lambda function to process messages in the SQS queue

C. Create an Amazon API Gateway REST API that implements the RESTful API Create a fleet of Amazon EC2 instances in an Auto Scaling group Create an API Gateway Auto Scaling group proxy integration Use the EC2 instances to process incoming data

D. Create an Amazon CloudFront distribution for the RESTful API Create a data stream in Amazon Kinesis Data Streams Set the data stream as the origin for the distribution Create an AWS Lambda function to consume and process data in the data stream

Explanation: it will use the ALB to handle the unpredictable bursts of traffic and route it to the SQS queue. The SQS queue will act as a buffer to store incoming data temporarily and the container running in Amazon ECS with the Fargate launch type will process messages in the queue. This approach will ensure that all data is processed and prevent data loss.

A company is building a call center by using Amazon Connect. The company’s operations

team is defining a disaster recovery (DR) strategy across AWS Regions. The contact

center has dozens of contact flows, hundreds of users, and dozens of claimed phone

numbers.

Which solution will provide DR with the LOWEST RTO?

A. Create an AWS Lambda function to check the availability of the Amazon Connect instance and to send a notification to the operations team in case of unavailability. Create an Amazon EventBridge rule to invoke the Lambda function every 5 minutes. After notification, instruct the operations team to use the AWS Management Console to provision a new Amazon Connect instance in a second Region. Deploy the contact flows, users, and claimed phone numbers by using an AWS CloudFormation template.

B. Provision a new Amazon Connect instance with all existing users in a second Region. Create an AWS Lambda function to check the availability of the Amazon Connect instance. Create an Amazon EventBridge rule to invoke the Lambda function every 5 minutes. In the event of an issue, configure the Lambda function to deploy an AWS CloudFormation template that provisions contact flows and claimed numbers in the second Region.

C. Provision a new Amazon Connect instance with all existing contact flows and claimed phone numbers in a second Region. Create an Amazon Route 53 health check for the URL of the Amazon Connect instance. Create an Amazon CloudWatch alarm for failed health checks. Create an AWS Lambda function to deploy an AWS CloudFormation template that provisions all users. Configure the alarm to invoke the Lambda function.

D. Provision a new Amazon Connect instance with all existing users and contact flows in a second Region. Create an Amazon Route 53 health check for the URL of the Amazon Connect instance. Create an Amazon CloudWatch alarm for failed health checks. Create an AWS Lambda function to deploy an AWS CloudFormation template that provisions claimed phone numbers. Configure the alarm to invoke the Lambda function.

Explanation: Option D provisions a new Amazon Connect instance with all existing users and contact flows in a second Region. It also sets up an Amazon Route 53 health check for the URL of the Amazon Connect instance, an Amazon CloudWatch alarm for failed health checks, and an AWS Lambda function to deploy an AWS CloudFormation template that provisions claimed phone numbers. This option allows for the fastest recovery time because all the necessary components are already provisioned and ready to go in the second Region. In the event of a disaster, the failed health check will trigger the AWS Lambda function to deploy the CloudFormation template to provision the claimed phone numbers, which is the only missing component.

A company operates a proxy server on a fleet of Amazon EC2 instances. Partners in

different countries use the proxy server to test the company's functionality. The EC2

instances are running in a VPC. and the instances have access to the internet.

The company's security policy requires that partners can access resources only from

domains that the company owns.

Which solution will meet these requirements?

A. Create an Amazon Route 53 Resolver DNS Firewall domain list that contains the allowed domains. Configure a DNS Firewall rule group with a rule that has a high numeric value that blocks all requests. Configure a rule that has a low numeric value that allows requests for domains in the allowed list. Associate the rule group with the VPC.

B. Create an Amazon Route 53 Resolver DNS Firewall domain list that contains the allowed domains. Configure a Route 53 outbound endpoint. Associate the outbound endpoint with the VPC. Associate the domain list with the outbound endpoint.

C. Create an Amazon Route 53 traffic flow policy to match the allowed domains. Configure the traffic flow policy to forward requests that match to the Route 53 Resolver. Associate the traffic flow policy with the VPC.

D. Create an Amazon Route 53 outbound endpoint. Associate the outbound endpoint with the VPC. Configure a Route 53 traffic flow policy to forward requests for allowed domains to the outbound endpoint. Associate the traffic flow policy with the VPC.

Explanation: The company should create an Amazon Route 53 Resolver DNS Firewall domain list that

contains the allowed domains. The company should configure a DNS Firewall rule group

with a rule that has a high numeric value that blocks all requests. The company should

configure a rule that has a low numeric value that allows requests for domains in the

allowed list. The company should associate the rule group with the VPC. This solution will

meet the requirements because Amazon Route 53 Resolver DNS Firewall is a feature that

enables you to filter and regulate outbound DNS traffic for your VPC. You can create

reusable collections of filtering rules in DNS Firewall rule groups and associate them with

your VPCs. You can specify lists of domain names to allow or block, and you can

customize the responses for the DNS queries that you block1. By creating a domain list

with the allowed domains and a rule group with rules to allow or block requests based on

the domain list, the company can enforce its security policy and control access to sites.

The other options are not correct because:

Configuring a Route 53 outbound endpoint and associating it with the VPC would

not help with filtering outbound DNS traffic. A Route 53 outbound endpoint is a

resource that enables you to forward DNS queries from your VPC to your network

over AWS Direct Connect or VPN connections2. It does not provide any filtering

capabilities.

Creating a Route 53 traffic flow policy to match the allowed domains would not

help with filtering outbound DNS traffic. A Route 53 traffic flow policy is a resource

that enables you to route traffic based on multiple criteria, such as endpoint health,

geographic location, and latency3. It does not provide any filtering capabilities.

Creating a Gateway Load Balancer (GWLB) would not help with filtering outbound

DNS traffic. A GWLB is a service that enables you to deploy, scale, and manage

third-party virtual appliances such as firewalls, intrusion detection and prevention

systems, and deep packet inspection systems in the cloud4. It does not provide

any filtering capabilities.

A solutions architect is designing an AWS account structure for a company that consists of

multiple teams. All the teams will work in the same AWS Region. The company needs a

VPC that is connected to the on-premises network. The company expects less than 50

Mbps of total traffic to and from the on-premises network.

Which combination of steps will meet these requirements MOST cost-effectively? (Select

TWO.)

A. Create an AWS Cloud Formation template that provisions a VPC and the required subnets. Deploy the template to each AWS account.

B. Create an AWS Cloud Formation template that provisions a VPC and the required subnets. Deploy the template to a shared services account Share the subnets by using AWS Resource Access Manager.

C. Use AWS Transit Gateway along with an AWS Site-to-Site VPN for connectivity to the on-premises network. Share the transit gateway by using AWS Resource Access Manager.

D. Use AWS Site-to-Site VPN for connectivity to the on-premises network.

E. Use AWS Direct Connect for connectivity to the on-premises network.

A company that provides image storage services wants to deploy a customer-lacing

solution to AWS. Millions of individual customers will use the solution. The solution will

receive batches of large image files, resize the files, and store the files in an Amazon S3

bucket for up to 6 months.

The solution must handle significant variance in demand. The solution must also be reliable

at enterprise scale and have the ability to rerun processing jobs in the event of failure.

Which solution will meet these requirements MOST cost-effectively?

A. Use AWS Step Functions to process the S3 event that occurs when a user stores an image. Run an AWS Lambda function that resizes the image in place and replaces the original file in the S3 bucket. Create an S3 Lifecycle expiration policy to expire all stored images after 6 months.

B. Use Amazon EventBridge to process the S3 event that occurs when a user uploads an image. Run an AWS Lambda function that resizes the image in place and replaces the original file in the S3 bucket. Create an S3 Lifecycle expiration policy to expire all stored images after 6 months.

C. Use S3 Event Notifications to invoke an AWS Lambda function when a user stores an image. Use the Lambda function to resize the image in place and to store the original file in the S3 bucket. Create an S3 Lifecycle policy to move all stored images to S3 Standard- Infrequent Access (S3 Standard-IA) after 6 months.

D. Use Amazon Simple Queue Service (Amazon SQS) to process the S3 event that occurs when a user stores an image. Run an AWS Lambda function that resizes the image and stores the resized file in an S3 bucket that uses S3 Standard-Infrequent Access (S3 Standard-IA). Create an S3 Lifecycle policy to move all stored images to S3 Glacier Deep Archive after 6 months.

Explanation:

S3 Event Notifications is a feature that allows users to receive notifications when certain

events happen in an S3 bucket, such as object creation or deletion1. Users can configure

S3 Event Notifications to invoke an AWS Lambda function when a user stores an image in

the bucket. Lambda is a serverless compute service that runs code in response to events

and automatically manages the underlying compute resources2. The Lambda function can

resize the image in place and store the original file in the same S3 bucket. This way, the

solution can handle significant variance in demand and be reliable at enterprise scale. The

solution can also rerun processing jobs in the event of failure by using the retry and deadletter

queue features of Lambda2.

S3 Lifecycle is a feature that allows users to manage their objects so that they are stored

cost-effectively throughout their lifecycle3. Users can create an S3 Lifecycle policy to move

all stored images to S3 Standard-Infrequent Access (S3 Standard-IA) after 6 months. S3

Standard-IA is a storage class designed for data that is accessed less frequently, but

requires rapid access when needed4. It offers a lower storage cost than S3 Standard, but

charges a retrieval fee. Therefore, moving the images to S3 Standard-IA after 6 months

can reduce the storage cost for the solution.

Option A is incorrect because using AWS Step Functions to process the S3 event that

occurs when a user stores an image is not necessary or cost-effective. AWS Step

Functions is a service that lets users coordinate multiple AWS services into serverless

workflows. However, for this use case, a single Lambda function can handle the image

resizing task without needing Step Functions.

Option B is incorrect because using Amazon EventBridge to process the S3 event that

occurs when a user uploads an image is not necessary or cost-effective. Amazon

EventBridge is a serverless event bus service that makes it easy to connect applications

with data from a variety of sources. However, for this use case, S3 Event Notifications can

directly invoke the Lambda function without needing EventBridge.

Option D is incorrect because using Amazon Simple Queue Service (Amazon SQS) to

process the S3 event that occurs when a user stores an image is not necessary or costeffective.

Amazon SQS is a fully managed message queuing service that enables users to

decouple and scale microservices, distributed systems, and serverless applications.

However, for this use case, S3 Event Notifications can directly invoke the Lambda function

without needing SQS. Moreover, storing the resized file in an S3 bucket that uses S3

Standard-IA will incur a retrieval fee every time the file is accessed, which may not be cost-effective for frequently accessed files.

A company runs an application in an on-premises data center. The application gives users

the ability to upload media files. The files persist in a file server. The web application has

many users. The application server is overutilized, which causes data uploads to fail

occasionally. The company frequently adds new storage to the file server. The company

wants to resolve these challenges by migrating the application to AWS.

Users from across the United States and Canada access the application. Only

authenticated users should have the ability to access the application to upload files. The

company will consider a solution that refactors the application, and the company needs to

accelerate application development.

Which solution will meet these requirements with the LEAST operational overhead?

A. Use AWS Application Migration Service to migrate the application server to Amazon EC2 instances. Create an Auto Scaling group for the EC2 instances. Use an Application Load Balancer to distribute the requests. Modify the application to use Amazon S3 to persist the files. Use Amazon Cognito to authenticate users.

B. Use AWS Application Migration Service to migrate the application server to Amazon EC2 instances. Create an Auto Scaling group for the EC2 instances. Use an Application Load Balancer to distribute the requests. Set up AWS IAM Identity Center (AWS Single Sign-On) to give users the ability to sign in to the application. Modify the application to use Amazon S3 to persist the files.

C. Create a static website for uploads of media files. Store the static assets in Amazon S3. Use AWS AppSync to create an API. Use AWS Lambda resolvers to upload the media files to Amazon S3. Use Amazon Cognito to authenticate users.

D. Use AWS Amplify to create a static website for uploads of media files. Use Amplify Hosting to serve the website through Amazon CloudFront. Use Amazon S3 to store the uploaded media files. Use Amazon Cognito to authenticate users.

Explanation:

The company should use AWS Amplify to create a static website for uploads of media files.

The company should use Amplify Hosting to serve the website through Amazon

CloudFront. The company should use Amazon S3 to store the uploaded media files. The

company should use Amazon Cognito to authenticate users. This solution will meet the

requirements with the least operational overhead because AWS Amplify is a complete

solution that lets frontend web and mobile developers easily build, ship, and host full-stack

applications on AWS, with the flexibility to leverage the breadth of AWS services as use

cases evolve. No cloud expertise needed1. By using AWS Amplify, the company can

refactor the application to a serverless architecture that reduces operational complexity and

costs. AWS Amplify offers the following features and benefits:

Amplify Studio: A visual interface that enables you to build and deploy a full-stack

app quickly, including frontend UI and backend.

Amplify CLI: A local toolchain that enables you to configure and manage an app

backend with just a few commands.

Amplify Libraries: Open-source client libraries that enable you to build cloudpowered

mobile and web apps.

Amplify UI Components: Open-source design system with cloud-connected

components for building feature-rich apps fast.

Amplify Hosting: Fully managed CI/CD and hosting for fast, secure, and reliable

static and server-side rendered apps.

By using AWS Amplify to create a static website for uploads of media files, the company

can leverage Amplify Studio to visually build a pixel-perfect UI and connect it to a cloud

backend in clicks. By using Amplify Hosting to serve the website through Amazon

CloudFront, the company can easily deploy its web app or website to the fast, secure, and

reliable AWS content delivery network (CDN), with hundreds of points of presence

globally. By using Amazon S3 to store the uploaded media files, the company can benefit

from a highly scalable, durable, and cost-effective object storage service that can handle

any amount of data2. By using Amazon Cognito to authenticate users, the company can

add user sign-up, sign-in, and access control to its web app with a fully managed service

that scales to support millions of users3.

The other options are not correct because:

Using AWS Application Migration Service to migrate the application server to

Amazon EC2 instances would not refactor the application or accelerate

development. AWS Application Migration Service (AWS MGN) is a service that

enables you to migrate physical servers, virtual machines (VMs), or cloud servers

from any source infrastructure to AWS without requiring agents or specialized

tools. However, this would not address the challenges of overutilization and data

uploads failures. It would also not reduce operational overhead or costs compared

to a serverless architecture.

Creating a static website for uploads of media files and using AWS AppSync to

create an API would not be as simple or fast as using AWS Amplify. AWS

AppSync is a service that enables you to create flexible APIs for securely

accessing, manipulating, and combining data from one or more data sources.

However, this would require more configuration and management than using

Amplify Studio and Amplify Hosting. It would also not provide authentication

features like Amazon Cognito.

Setting up AWS IAM Identity Center (AWS Single Sign-On) to give users the ability

to sign in to the application would not be as suitable as using Amazon Cognito.

AWS Single Sign-On (AWS SSO) is a service that enables you to centrally

manage SSO access and user permissions across multiple AWS accounts and

business applications. However, this service is designed for enterprise customers

who need to manage access for employees or partners across multiple resources.

It is not intended for authenticating end users of web or mobile apps.

A company runs an loT application in the AWS Cloud. The company has millions of

sensors that collect data from houses in the United States. The sensors use the MOTT

protocol to connect and send data to a custom MQTT broker. The MQTT broker stores the

data on a single Amazon EC2 instance. The sensors connect to the broker through the

domain named iot.example.com. The company uses Amazon Route 53 as its DNS service.

The company stores the data in Amazon DynamoDB.

On several occasions, the amount of data has overloaded the MOTT broker and has

resulted in lost sensor data. The company must improve the reliability of the solution.

Which solution will meet these requirements?

A. Create an Application Load Balancer (ALB) and an Auto Scaling group for the MOTT broker. Use the Auto Scaling group as the target for the ALB. Update the DNS record in Route 53 to an alias record. Point the alias record to the ALB. Use the MQTT broker to store the data.

B. Set up AWS loT Core to receive the sensor data. Create and configure a custom domain to connect to AWS loT Core. Update the DNS record in Route 53 to point to the AWS loT Core Data-ATS endpoint. Configure an AWS loT rule to store the data.

C. Create a Network Load Balancer (NLB). Set the MQTT broker as the target. Create an AWS Global Accelerator accelerator. Set the NLB as the endpoint for the accelerator. Update the DNS record in Route 53 to a multivalue answer record. Set the Global Accelerator IP addresses as values. Use the MQTT broker to store the data.

D. Set up AWS loT Greengrass to receive the sensor data. Update the DNS record in Route 53 to point to the AWS loT Greengrass endpoint. Configure an AWS loT rule to invoke an AWS Lambda function to store the data.

Explanation: it describes a solution that uses an Application Load Balancer (ALB) and an Auto Scaling group for the MQTT broker. The ALB distributes incoming traffic across the instances in the Auto Scaling group and allows for automatic scaling based on incoming traffic. The use of an alias record in Route 53 allows for easy updates to the DNS record without changing the IP address. This solution improves the reliability of the MQTT broker by allowing it to automatically scale based on incoming traffic, reducing the likelihood of lost data due to broker overload.

A company needs to audit the security posture of a newly acquired AWS account. The

company’s data security team requires a notification only when an Amazon S3 bucket

becomes publicly exposed. The company has already established an Amazon Simple

Notification Service (Amazon SNS) topic that has the data security team's email address

subscribed.

Which solution will meet these requirements?

A. Create an S3 event notification on all S3 buckets for the isPublic event. Select the SNS topic as the target for the event notifications.

B. Create an analyzer in AWS Identity and Access Management Access Analyzer. Create an Amazon EventBridge rule for the event type “Access Analyzer Finding” with a filter for “isPublic: true.” Select the SNS topic as the EventBridge rule target.

C. Create an Amazon EventBridge rule for the event type “Bucket-Level API Call via CloudTrail” with a filter for “PutBucketPolicy.” Select the SNS topic as the EventBridge rule target.

D. Activate AWS Config and add the cloudtrail-s3-dataevents-enabled rule. Create an Amazon EventBridge rule for the event type “Config Rules Re-evaluation Status” with a filter for “NON_COMPLIANT.” Select the SNS topic as the EventBridge rule target.

| Page 13 out of 41 Pages |

| Previous |