Topic 1: Exam Pool A

A financial services company receives a regular data feed from its credit card servicing

partner Approximately 5.000 records are sent every 15 minutes in plaintext, delivered over

HTTPS directly into an Amazon S3 bucket with server-side encryption. This feed contains

sensitive credit card primary account number (PAN) data The company needs to

automatically mask the PAN before sending the data to another S3 bucket for additional

internal processing. The company also needs to remove and merge specific fields, and

then transform the record into JSON format Additionally, extra feeds are likely to be added

in the future, so any design needs to be easily expandable.

Which solutions will meet these requirements?

A. Trigger an AWS Lambda function on file delivery that extracts each record and writes it to an Amazon SQS queue. Trigger another Lambda function when new messages arrive in the SQS queue to process the records, writing the results to a temporary location in Amazon S3. Trigger a final Lambda function once the SQS queue is empty to transform the records into JSON format and send the results to another S3 bucket for internal processing.

B. Trigger an AWS Lambda function on file delivery that extracts each record and writes it to an Amazon SQS queue. Configure an AWS Fargate container application to automatically scale to a single instance when the SQS queue contains messages. Have the application process each record, and transform the record into JSON format. When the queue is empty, send the results to another S3 bucket for internal processing and scale down the AWS Fargate instance.

C. Create an AWS Glue crawler and custom classifier based on the data feed formats and build a table definition to match. Trigger an AWS Lambda function on file delivery to start an AWS Glue ETL job to transform the entire record according to the processing and transformation requirements. Define the output format as JSON. Once complete, have the ETL job send the results to another S3 bucket for internal processing.

D. Create an AWS Glue crawler and custom classifier based upon the data feed formats and build a table definition to match. Perform an Amazon Athena query on file delivery to start an Amazon EMR ETL job to transform the entire record according to the processing and transformation requirements. Define the output format as JSON. Once complete, send the results to another S3 bucket for internal processing and scale down the EMR cluster.

Explanation: You can use a Glue crawler to populate the AWS Glue Data Catalog with tables. The Lambda function can be triggered using S3 event notifications when object create events occur. The Lambda function will then trigger the Glue ETL job to transform the records masking the sensitive data and modifying the output format to JSON. This solution meets all requirements.

A global media company is planning a multi-Region deployment of an application. Amazon

DynamoDB global tables will back the deployment to keep the user experience consistent

across the two continents where users are concentrated. Each deployment will have a

public Application Load Balancer (ALB). The company manages public DNS internally. The

company wants to make the application available through an apex domain.

Which solution will meet these requirements with the LEAST effort?

A. Migrate public DNS to Amazon Route 53. Create CNAME records for the apex domain to point to the ALB. Use a geolocation routing policy to route traffic based on user location.

B. Place a Network Load Balancer (NLB) in front of the ALB. Migrate public DNS to Amazon Route 53. Create a CNAME record for the apex domain to point to the NLB's static IP address. Use a geolocation routing policy to route traffic based on user location.

C. Create an AWS Global Accelerator accelerator with multiple endpoint groups that target endpoints in appropriate AWS Regions. Use the accelerator's static IP address to create a record in public DNS for the apex domain.

D. Create an Amazon API Gateway API that is backed by AWS Lambda in one of the AWS Regions. Configure a Lambda function to route traffic to application deployments by using the round robin method. Create CNAME records for the apex domain to point to the API's URL.

Explanation: AWS Global Accelerator is a service that directs traffic to optimal endpoints (in this case, the Application Load Balancer) based on the health of the endpoints and network routing. It allows you to create an accelerator that directs traffic to multiple endpoint groups, one for each Region where the application is deployed. The accelerator uses the AWS global network to optimize the traffic routing to the healthy endpoint. By using Global Accelerator, the company can use a single static IP address for the apex domain, and traffic will be directed to the optimal endpoint based on the user's location, without the need for additional load balancers or routing policies.

A company wants to migrate to AWS. The company wants to use a multi-account structure

with centrally managed access to all accounts and applications. The company also wants

to keep the traffic on a private network. Multi-factor authentication (MFA) is required at

login, and specific roles are assigned to user groups.

The company must create separate accounts for development. staging, production, and

shared network. The production account and the shared network account must have

connectivity to all accounts. The development account and the staging account must have

access only to each other.

Which combination of steps should a solutions architect take 10 meet these requirements?

(Choose three.)

A. Deploy a landing zone environment by using AWS Control Tower. Enroll accounts and invite existing accounts into the resulting organization in AWS Organizations.

B. Enable AWS Security Hub in all accounts to manage cross-account access. Collect findings through AWS CloudTrail to force MFA login.

C. Create transit gateways and transit gateway VPC attachments in each account. Configure appropriate route tables.

D. Set up and enable AWS IAM Identity Center (AWS Single Sign-On). Create appropriate permission sets with required MFA for existing accounts.

E. Enable AWS Control Tower in all Recounts to manage routing between accounts. Collect findings through AWS CloudTrail to force MFA login.

F. Create IAM users and groups. Configure MFA for all users. Set up Amazon Cognito user pools and identity pools to manage access to accounts and between accounts.

Explanation: The correct answer would be options A, C and D, because they address the requirements outlined in the question. A. Deploying a landing zone environment using AWS Control Tower and enrolling accounts in an organization in AWS Organizations allows for a centralized management of access to all accounts and applications. C. Creating transit gateways and transit gateway VPC attachments in each account and configuring appropriate route tables allows for private network traffic, and ensures that the production account and shared network account have connectivity to all accounts, while the development and staging accounts have access only to each other. D. Setting up and enabling AWS IAM Identity Center (AWS Single Sign-On) and creating appropriate permission sets with required MFA for existing accounts allows for multi-factor authentication at login and specific roles to be assigned to user groups.

A solutions architect is designing the data storage and retrieval architecture for a new

application that a company will be launching soon. The application is designed to ingest

millions of small records per minute from devices all around the world. Each record is less

than 4 KB in size and needs to be stored in a durable location where it can be retrieved

with low latency. The data is ephemeral and the company is required to store the data for

120 days only, after which the data can be deleted.

The solutions architect calculates that, during the course of a year, the storage

requirements would be about 10-15 TB.

Which storage strategy is the MOST cost-effective and meets the design requirements?

A. Design the application to store each incoming record as a single .csv file in an Amazon S3 bucket to allow for indexed retrieval. Configure a lifecycle policy to delete data older than 120 days.

B. Design the application to store each incoming record in an Amazon DynamoDB table properly configured for the scale. Configure the DynamoOB Time to Live (TTL) feature to delete records older than 120 days.

C. Design the application to store each incoming record in a single table in an Amazon RDS MySQL database. Run a nightly cron job that executes a query to delete any records older than 120 days.

D. Design the application to batch incoming records before writing them to an Amazon S3 bucket. Update the metadata for the object to contain the list of records in the batch and use the Amazon S3 metadata search feature to retrieve the data. Configure a lifecycle policy to delete the data after 120 days.

Explanation: DynamoDB with TTL, cheaper for sustained throughput of small items + suited for fast retrievals. S3 cheaper for storage only, much higher costs with writes. RDS not designed for this use case.

A company built an application based on AWS Lambda deployed in an AWS

CloudFormation stack. The last production release of the web application introduced an

issue that resulted in an outage lasting several minutes. A solutions architect must adjust

the deployment process to support a canary release.

Which solution will meet these requirements?

A. Create an alias for every new deployed version of the Lambda function. Use the AWS CLI update-alias command with the routing-config parameter to distribute the load.

B. Deploy the application into a new CloudFormation stack. Use an Amazon Route 53 weighted routing policy to distribute the load.

C. Create a version for every new deployed Lambda function. Use the AWS CLI updatefunction- configuration command with the routing-config parameter to distribute the load.

D. Configure AWS CodeDeploy and use CodeDeployDefault.OneAtATime in the Deployment configuration to distribute the load.

A company's solutions architect is reviewing a web application that runs on AWS. The

application references static assets in an Amazon S3 bucket in the us-east-1 Region. The

company needs resiliency across multiple AWS Regions. The company already has

created an S3 bucket in a second Region.

Which solution will meet these requirements with the LEAST operational overhead?

A. Configure the application to write each object to both S3 buckets. Set up an Amazon Route 53 public hosted zone with a record set by using a weighted routing policy for each S3 bucket. Configure the application to reference the objects by using the Route 53 DNS name.

B. Create an AWS Lambda function to copy objects from the S3 bucket in us-east-1 to the S3 bucket in the second Region. Invoke the Lambda function each time an object is written to the S3 bucket in us-east-1. Set up an Amazon CloudFront distribution with an origin group that contains the two S3 buckets as origins.

C. Configure replication on the S3 bucket in us-east-1 to replicate objects to the S3 bucket in the second Region Set up an Amazon CloudFront distribution with an origin group that contains the two S3 buckets as origins.

D. Configure replication on the S3 bucket in us-east-1 to replicate objects to the S3 bucket in the second Region. If failover is required, update the application code to load S3 objects from the S3 bucket in the second Region.

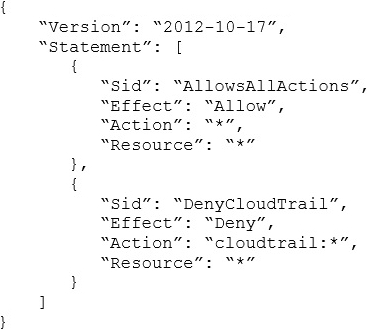

A company with several AWS accounts is using AWS Organizations and service control

policies (SCPs). An Administrator created the following SCP and has attached it to an

organizational unit (OU) that contains AWS account 1111-1111-1111:

Developers working in account 1111-1111-1111 complain that they cannot create Amazon

S3 buckets. How should the Administrator address this problem?

A. Add s3:CreateBucket with €Allow€ effect to the SCP.

B. Remove the account from the OU, and attach the SCP directly to account 1111-1111- 1111.

C. Instruct the Developers to add Amazon S3 permissions to their IAM entities.

D. Remove the SCP from account 1111-1111-1111.

A company uses AWS Organizations for a multi-account setup in the AWS Cloud. The

company uses AWS Control Tower for governance and uses AWS Transit Gateway for

VPC connectivity across accounts.

In an AWS application account, the company's application team has deployed a web

application that uses AWS Lambda and Amazon RDS. The company's database

administrators have a separate DBA account and use the account to centrally manage all

the databases across the organization. The database administrators use an Amazon EC2

instance that is deployed in the DBA account to access an RDS database that is deployed

in the application account.

The application team has stored the database credentials as secrets in AWS Secrets

Manager in the application account. The application team is manually sharing the secrets

with the database administrators. The secrets are encrypted by the default AWS managed

key for Secrets Manager in the application account. A solutions architect needs to

implement a solution that gives the database administrators access to the database and

eliminates the need to manually share the secrets.

Which solution will meet these requirements?

A. Use AWS Resource Access Manager (AWS RAM) to share the secrets from the application account with the DBA account. In the DBA account, create an IAM role that is named DBA-Admin. Grant the role the required permissions to access the shared secrets. Attach the DBA-Admin role to the EC2 instance for access to the cross-account secrets.

B. In the application account, create an IAM role that is named DBA-Secret. Grant the role the required permissions to access the secrets. In the DBA account, create an IAM role that is named DBA-Admin. Grant the DBA-Admin role the required permissions to assume the DBA-Secret role in the application account. Attach the DBA-Admin role to the EC2 instance for access to the cross-account secrets.

C. In the DBA account, create an IAM role that is named DBA-Admin. Grant the role the required permissions to access the secrets and the default AWS managed key in the application account. In the application account, attach resource-based policies to the key to allow access from the DBA account. Attach the DBA-Admin role to the EC2 instance for access to the cross-account secrets.

D. In the DBA account, create an IAM role that is named DBA-Admin. Grant the role the required permissions to access the secrets in the application account. Attach an SCP to the application account to allow access to the secrets from the DBA account. Attach the DBAAdmin role to the EC2 instance for access to the cross-account secrets.

A company has registered 10 new domain names. The company uses the domains for

online marketing. The company needs a solution that will redirect online visitors to a

specific URL for each domain. All domains and target URLs are defined in a JSON

document. All DNS records are managed by Amazon Route 53.

A solutions architect must implement a redirect service that accepts HTTP and HTTPS

requests.

Which combination of steps should the solutions architect take to meet these requirements

with the LEAST amount of operational effort? (Choose three.)

A. Create a dynamic webpage that runs on an Amazon EC2 instance. Configure the webpage to use the JSON document in combination with the event message to look up and respond with a redirect URL.

B. Create an Application Load Balancer that includes HTTP and HTTPS listeners.

C. Create an AWS Lambda function that uses the JSON document in combination with the event message to look up and respond with a redirect URL.

D. Use an Amazon API Gateway API with a custom domain to publish an AWS Lambda function.

E. Create an Amazon CloudFront distribution. Deploy a Lambda@Edge function.

F. Create an SSL certificate by using AWS Certificate Manager (ACM). Include the domains as Subject Alternative Names.

A large mobile gaming company has successfully migrated all of its on-premises

infrastructure to the AWS Cloud. A solutions architect is reviewing the environment to

ensure that it was built according to the design and that it is running in alignment with the

Well-Architected Framework.

While reviewing previous monthly costs in Cost Explorer, the solutions architect notices

that the creation and subsequent termination of several large instance types account for a

high proportion of the costs. The solutions architect finds out that the company's

developers are launching new Amazon EC2 instances as part of their testing and that the

developers are not using the appropriate instance types.

The solutions architect must implement a control mechanism to limit the instance types that

only the developers can launch.

Which solution will meet these requirements?

A. Create a desired-instance-type managed rule in AWS Config. Configure the rule with the instance types that are allowed. Attach the rule to an event to run each time a new EC2 instance is launched.

B. In the EC2 console, create a launch template that specifies the instance types that are allowed. Assign the launch template to the developers' IAM accounts.

C. Create a new IAM policy. Specify the instance types that are allowed. Attach the policy to an IAM group that contains the IAM accounts for the developers

D. Use EC2 Image Builder to create an image pipeline for the developers and assist them in the creation of a golden image.

A company is using multiple AWS accounts The DNS records are stored in a private

hosted zone for Amazon Route 53 in Account A The company's applications and

databases are running in Account B.

A solutions architect win deploy a two-net application In a new VPC To simplify the

configuration, the db.example com CNAME record set tor the Amazon RDS endpoint was

created in a private hosted zone for Amazon Route 53.

During deployment, the application failed to start. Troubleshooting revealed that

db.example com is not resolvable on the Amazon EC2 instance The solutions architect

confirmed that the record set was created correctly in Route 53.

Which combination of steps should the solutions architect take to resolve this issue?

(Select TWO )

A. Deploy the database on a separate EC2 instance in the new VPC Create a record set for the instance's private IP in the private hosted zone

B. Use SSH to connect to the application tier EC2 instance Add an RDS endpoint IP address to the /eto/resolv.conf file

C. Create an authorization lo associate the private hosted zone in Account A with the new VPC In Account B

D. Create a private hosted zone for the example.com domain m Account B Configure Route 53 replication between AWS accounts

E. Associate a new VPC in Account B with a hosted zone in Account A. Delete the association authorization In Account A.

A solutions architect is auditing the security setup of an AWS Lambda function for a

company. The Lambda function retrieves the latest changes from an Amazon Aurora

database. The Lambda function and the database run in the same VPC. Lambda

environment variables are providing the database credentials to the Lambda function.

The Lambda function aggregates data and makes the data available in an Amazon S3

bucket that is configured for server-side encryption with AWS KMS managed encryption

keys (SSE-KMS). The data must not travel across the internet. If any database credentials

become compromised, the company needs a solution that minimizes the impact of the

compromise.

What should the solutions architect recommend to meet these requirements?

A. Enable IAM database authentication on the Aurora DB cluster. Change the IAM role for the Lambda function to allow the function to access the database by using IAM database authentication. Deploy a gateway VPC endpoint for Amazon S3 in the VPC.

B. Enable IAM database authentication on the Aurora DB cluster. Change the IAM role for the Lambda function to allow the function to access the database by using IAM database authentication. Enforce HTTPS on the connection to Amazon S3 during data transfers.

C. Save the database credentials in AWS Systems Manager Parameter Store. Set up password rotation on the credentials in Parameter Store. Change the IAM role for the Lambda function to allow the function to access Parameter Store. Modify the Lambda function to retrieve the credentials from Parameter Store. Deploy a gateway VPC endpoint for Amazon S3 in the VPC.

D. Save the database credentials in AWS Secrets Manager. Set up password rotation on the credentials in Secrets Manager. Change the IAM role for the Lambda function to allow the function to access Secrets Manager. Modify the Lambda function to retrieve the credentials Om Secrets Manager. Enforce HTTPS on the connection to Amazon S3 during data transfers.

| Page 12 out of 41 Pages |

| Previous |