Ursa Major Solar has a custom object, serviceJob-o, with an optional Lookup field to Account called partner-service-Provider_c. The TotalJobs-o field on Account tracks the total number of serviceJob-o records to which a partner service provider Account is related. What is the most efficient way to ensure that the Total job_o field kept up to data?

A. Change TotalJob_o to a roll-up summary field.

B. Create a record-triggered flow on ServiceJob_o.

C. Create an Apex trigger on ServiceJob_o.

D. Create a schedule-triggered flow on ServiceJob_o.

Explanation:

Ursa Major Solar needs to keep the TotalJobs__c field on Account up to date with the number of ServiceJob__c records related via an optional Lookup field (Partner_Service_Provider__c).

Because this is an optional lookup, not a master-detail relationship, you cannot use a roll-up summary field. Instead, you need to calculate and update the total using automation.

✔️ Why B. Record-Triggered Flow is the best option:

✅ Declarative, no code required

✅ Supports logic based on lookup relationships

✅ Can handle create, update, and delete events

✅ Easily maintainable and more efficient than Apex for simple logic

✅ Most efficient non-code approach that satisfies the requirement

❌ Why other options are not ideal:

A. Change TotalJob__c to a roll-up summary field

❌ Not possible: roll-up summary fields only work with master-detail relationships, and Partner_Service_Provider__c is a lookup.

C. Create an Apex trigger on ServiceJob__c

❌ Functional, but unnecessary unless the logic is complex.

More maintenance-heavy and requires unit tests.

D. Create a schedule-triggered flow on ServiceJob__c

❌ Not efficient for real-time updates.

Delays data consistency and is resource-intensive.

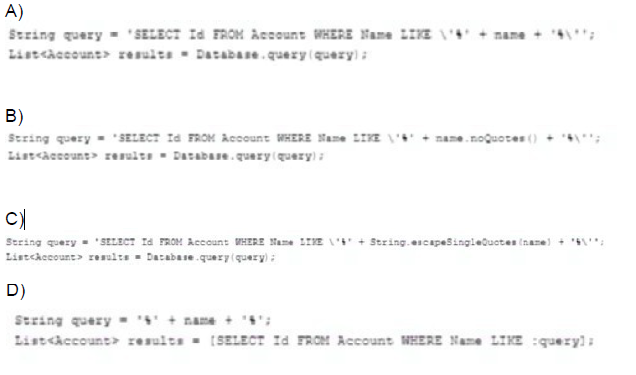

Assuming that naze is 8 String obtained by an

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

Option C: String.escapeSingleQuotes()

String query = 'SELECT Id FROM Account WHERE Name LIKE \'%' + String.escapeSingleQuotes(name) + '%\'';

List

Why Safe?

String.escapeSingleQuotes() sanitizes the input by escaping single quotes (' → \').

Prevents attackers from breaking the query with malicious input (e.g., name = "test' OR Name != ''").

Option D: Bind Variable

String query = '%' + name + '%';

List

Why Safe?

Bind variables (:query) are automatically escaped by Salesforce.

No string concatenation = no injection risk.

Why Not the Others?

Option A: Unsafe Concatenation

String query = 'SELECT Id FROM Account WHERE Name LIKE \'%' + name + '%\'';

Risk: Direct concatenation allows SOQL injection (e.g., if name = "test' OR Name != ''").

Option B: noQuotes() (Invalid Method)

String query = 'SELECT Id FROM Account WHERE Name LIKE \'%' + name.noQuotes() + '%\'';

Risk: noQuotes() doesn’t exist in Apex. Even if it did, it wouldn’t escape quotes.

Universal Containers wants to back up all of the data and attachments in its Salesforce org once month. Which approach should a developer use to meet this requirement?

A. Use the Data Loader command line.

B. Create a Schedulable Apex class.

C. Schedule a report.

D. Define a Data Export scheduled job.

Explanation: Scheduling a Data Export job is the best way to back up all the data and attachments in a Salesforce org once a month. The Data Export job can be scheduled to run at a certain frequency so that the data can be backed up on a regular basis. This makes it easy to restore the data in case of any emergency.

A developer must perform a complex SOQL query that joins two objects in a Lightning component. How can the Lightning component execute the query?

A. Create a flow to execjte the query and invoke from the Lightning component

B. Write the query in a custom Lightning web component wrapper ana invoke from the Lightning component,

C. Invoke an Apex class with the method annotated as &AuraEnabled to perform the query.

D. Use the Salesforce Streaming API to perform the SOQL query.

Explanation:

In Lightning components (whether Aura or LWC), if you need to perform a complex SOQL query (such as one involving a relationship/join between two objects), the best practice is to:

✅ Write the query in an Apex class, and expose the method using the @AuraEnabled annotation.

This allows the Lightning component to invoke the method server-side, and retrieve the data for use in the component.

❌ Why the other options are incorrect:

A. Create a flow to execute the query and invoke from the Lightning component

❌ Flows are not well-suited for complex SOQL, and calling them from components requires additional setup (not ideal for querying).

B. Write the query in a custom Lightning web component wrapper and invoke from the Lightning component

❌ LWCs and Aura components cannot execute SOQL directly — they must go through Apex.

D. Use the Salesforce Streaming API to perform the SOQL query

❌ The Streaming API is used for event-based notifications, not querying data.

A developer is creating a Lightning web component to showa list of sales records. The Sales Representative user should be able to see the commission field on each record. The Sales Assistant user should be able to see all fields on the record except the commission field. How should this be enforced so that the component works for both users without showing any errors?

A. Use WITH SECURITY_ENFORCED in the SOQL that fetches the data for the component.

B. Use Security. stripInaccessible to remove fields inaccessible to the current user.

C. Use Lightning Data Service to get the collection of sales records.

D. Use Lightning Locker Service to enforce sharing rules and field-level security.

Explanation:

To ensure that the Lightning Web Component (LWC) displays only the fields the current user is allowed to see, based on field-level security (FLS), you should use:

✅ Security.stripInaccessible() in Apex

This method:

Removes fields from queried records that the user doesn’t have read access to

Ensures the component does not throw errors or expose restricted data

Allows a single Apex method to return different sets of data depending on user permissions

🔍 Example:

List

salesRecords = (List

return salesRecords;

❌ Why other options are incorrect:

A. Use WITH SECURITY_ENFORCED

❌ This will throw a runtime exception if a user lacks access to a field in the query.

Not ideal when you want to show partial data based on user permissions.

C. Use Lightning Data Service

❌ LDS respects FLS but is typically used for single-record forms, not for querying lists of records.

D. Use Lightning Locker Service

❌ Locker Service is a security layer for JavaScript isolation, not for enforcing data visibility or FLS.

Universal Containers has a Visualforce page that displays a table of every Container c being rented by a given Account. Recently this page is failing with a view state limit because some of the customers rent over 10,000 containers. What should a developer change about the Visualforce page to help with the page load errors?

A. Use JavaScript remotlng with SOQL Offset.

B. Implement pagination with a StandardSetController.

C. Implement pagination with an OffaetController.

D. Use lazy loading and a transient List variable.

Explanation:

The issue here is that the Visualforce page is exceeding the View State limit because it's trying to display a very large dataset (10,000+ Container__c records). To fix this efficiently, the developer should:

✅ Use a StandardSetController to paginate the data.

This approach:

Loads and displays a subset of records at a time (e.g., 50 per page)

Keeps the view state small and manageable

Is the most declarative and Salesforce-recommended way to paginate in Visualforce

🔍 Example:

public with sharing class ContainerPaginationController {

public ApexPages.StandardSetController setCon {

get {

if (setCon == null) {

setCon = new ApexPages.StandardSetController(

[SELECT Id, Name FROM Container__c WHERE Account__c = :ApexPages.currentPage().getParameters().get('id')]

);

setCon.setPageSize(50);

}

return setCon;

}

set;

}

public List

return (List

}

}

❌ Why other options are less appropriate:

A. JavaScript Remoting with SOQL OFFSET

❌ OFFSET is inefficient at large scale (10,000+ records)

Also requires custom JavaScript and Apex code

C. OffsetController

❌ Not a standard Salesforce controller — likely refers to a custom implementation, which is more complex and error-prone

D. Lazy loading and transient List

❌ transient helps reduce view state, but by itself won’t fix the issue

Lazy loading requires custom logic, still won't handle paging automatically

Which three statements are true regarding custom exceptions in Apex? (Choose three.)

A. A custom exception class must extend the system Exception class.

B. A custom exception class can implement one or many interfaces.

C. A custom exception class cannot contain member variables or methods.

D. A custom exception class name must end with “Exception”.

E. A custom exception class can extend other classes besides the Exception class.

Eplanation:

✔️ A. A custom exception class must extend the system Exception class

✅ True

All custom exceptions in Apex must extend the built-in Exception class, either directly or indirectly.

This is required for the class to behave like an exception and be used in try/catch blocks.

✔️ B. A custom exception class can implement one or many interfaces

✅ True

Like any Apex class, a custom exception can implement interfaces to provide additional behavior (though this is rare in practice).

✔️ D. A custom exception class name must end with “Exception”

✅ True (best practice)

While not technically required, Salesforce enforces that custom exception class names must end with Exception to maintain clarity and consistency.

❌ Incorrect Statements:

C. A custom exception class cannot contain member variables or methods

❌ False

You can include member variables and methods in a custom exception class — for example, to store custom error details or logging information.

E. A custom exception class can extend other classes besides the Exception class

❌ False

In Apex, custom exception classes must extend Exception and cannot extend arbitrary classes.

When importing and exporting data into Salesforce, which two statements are true? Choose 2 answers

A. Bulk API can be used to import large data volumes in development environments without bypassing the storage limits.

B. Bulk API can be used to bypass the storage limits when importing large data volumes in development environments.

C. Developer and Developer Pro sandboxes have different storage limits.

D. Data import wizard is a client application provided by Salesforce.

Explanations:

A. Bulk API & Storage Limits

True. Bulk API is optimized for large data volumes (up to 10k records/batch) but does not bypass storage limits.

Storage limits (e.g., data, file storage) are enforced in all environments, including sandboxes.

C. Sandbox Storage Limits

True. Salesforce sandboxes have tiered storage:

Developer Sandbox: 200 MB data + 200 MB file storage.

Developer Pro Sandbox: 1 GB data + 1 GB file storage.

Why the Others Are False:

B. Bulk API Bypassing Limits

False. No tool (Bulk API, Data Loader, etc.) can bypass Salesforce storage limits.

D. Data Import Wizard as a Client App

False. The Data Import Wizard is a built-in Salesforce UI tool (accessible via Setup), not a separate client application.

A developer wants to import 500 Opportunity records into a sandbox. Why should the developer choose to use data Loader instead of Data Import Wizard?

A. Data Loader runs from the developer's browser.

B. Data Import Wizard does not support Opportunities.

C. Data Loader automatically relates Opportunities to Accounts.

D. Data Import Wizard cannot import all 500 records.

Explanation:

The Data Import Wizard is a user-friendly, web-based tool in Salesforce, but it has limited object support. Specifically:

🔹 The Data Import Wizard does not support importing Opportunity records.

Instead, it supports standard objects like:

Leads

Contacts

Accounts

Campaign Members

Custom objects

To import Opportunities, a developer must use a tool like the Data Loader.

❌ Why the other options are incorrect:

A. Data Loader runs from the developer's browser

❌ False: Data Loader is a client application that runs on your desktop, not in a browser.

C. Data Loader automatically relates Opportunities to Accounts

❌ False: You must explicitly map the AccountId field or provide matching criteria — it doesn’t happen automatically.

D. Data Import Wizard cannot import all 500 records

❌ False: It can handle more than 500 records — limits depend on the object and user profile, but 500 is well within its capacity.

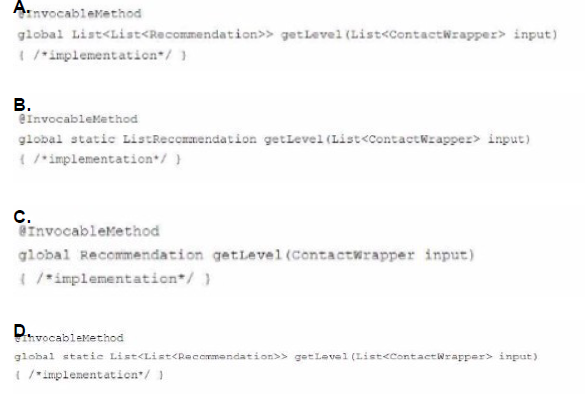

A Next Best Action strategy uses an Enhance element that invokes an Apex method to determine a discount level for a Contact, based on a number of factors. What is the correct definition of the Apex method?

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

In Next Best Action (NBA) with Einstein Recommendations, when using the Enhance element to call an Apex method, the method must meet specific requirements defined by the InvocableMethod interface.

🔒 Requirements for @InvocableMethod used with Enhance:

Must be global static

Must accept a List

Must return a List

NBA expects multiple recommendation results per input record

🔍 Why Option D is correct:

✅ Uses @InvocableMethod

✅ Declared as global static

✅ Input is List

✅ Output is List

❌ Why the other options are incorrect:

A. Missing static keyword → ❌

B. Return type is List

C. Input is not a list, return type is a single object → ❌

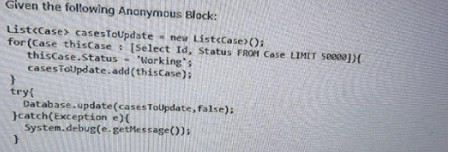

Given the following Anonymous Block:

Which one do you like?

What should a developer consider for an environment that has over 10,000 Case records?

A. The transaction will fail due to exceeding the governor limit.

B. The try/catch block will handle any DML exceptions thrown.

C. The transaction will succeed and changes will be committed.

D. The try/catch block will handle exceptions thrown by governor limits.

Explanation:

The anonymous block attempts to query and update 50,000 Case records:

for(Case thisCase : [SELECT Id, Status FROM Case LIMIT 50000]){

thisCase.Status = 'Working';

casesToUpdate.add(thisCase);

}

Then it performs a bulk update:

Database.update(casesToUpdate, false);

However, Salesforce enforces a strict governor limit:

❗ Maximum DML rows per transaction: 10,000

This code updates 50,000 records, which exceeds the limit, and will cause the transaction to fail with a "Too many DML rows" exception.

❌ Why the other options are incorrect:

B. The try/catch block will handle any DML exceptions thrown

❌ Partially true — it can catch exceptions, but governor limit exceptions are uncatchable once hit.

C. The transaction will succeed and changes will be committed

❌ False — It will exceed the 10,000 DML rows limit and fail.

D. The try/catch block will handle exceptions thrown by governor limits

❌ False — Governor limit exceptions are not catchable. Once a limit is hit, the transaction is immediately halted.

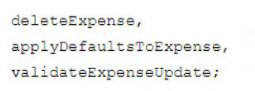

A developer identifies the following triggers on the Expense c object:

The triggers process before delete, before insert, and before update events respectively.

Which two techniques should the developer implement to ensure trigger best practices are followed? Choose 2 answers

A. Create helper classes to execute the appropriate logic when a record is saved.

B. Unify the before insert and before update triggers and use Flow for the delete action.

C. Unify all three triggers in a single trigger on the Expense c object that includes all events.

D. Maintain all three triggers on the Expense c object, but move the Apex logic out of the trigger definition.

Explanation:

Salesforce trigger best practices encourage developers to reduce complexity, avoid duplicate logic, and maintain scalability. Let's apply that to the scenario shown in the image (multiple triggers on Expense__c).

✔️ A. Create helper classes to execute the appropriate logic when a record is saved

✅ True

You should always move logic out of the trigger body and into handler/helper classes.

This improves testability, reusability, and helps enforce separation of concerns.

✔️ C. Unify all three triggers in a single trigger on the Expense__c object that includes all events

✅ True

Salesforce allows only one trigger per object (technically multiple are allowed, but not recommended).

All logic should be combined in a single trigger and routed using event-specific logic like:

if (Trigger.isInsert && Trigger.isBefore) { ... }

if (Trigger.isDelete && Trigger.isBefore) { ... }

❌ Incorrect Answers:

B. Unify the before insert and before update triggers and use Flow for the delete action

❌ Mixing code and Flow across trigger events leads to inconsistent behavior, harder maintenance, and violates consistency best practices.

D. Maintain all three triggers on the Expense__c object, but move the Apex logic out of the trigger definition

❌ Partially correct, but keeping multiple triggers per object is discouraged.

You should consolidate to a single trigger per object.

| Page 5 out of 20 Pages |

| Previous |