Topic 1: Case Study Alpine Ski House

You need to access the RoomsAPI API from the canvas app.

What should you do?

A. Use the default API configuration in Business Central

B. Enable the APIs for the Business Central online environment.

C. Open the Web Services page and publish the RoomsAPI page as a web service.

D. Include in the extension a codeunit of type Install that publishes RoomsAPI.

Explanation:

To access the RoomsAPI from a Power Apps canvas app, you must expose it as a web service in Business Central. This is done by publishing the API page on the Web Services page. Once published, it becomes accessible via OData or REST endpoints, allowing external apps like Power Apps to consume it.

Publishing the API page registers it with a unique service name and URL, which can then be used in Power Platform connectors or HTTP requests. This is the standard and supported method for making custom APIs available externally.

✅ Why Option C is correct:

The Web Services page is the central interface in Business Central for exposing pages, reports, and queries as web services.

Publishing RoomsAPI here makes it discoverable and accessible to external systems.

Once published, you can retrieve the endpoint URL and use it in Power Apps via custom connectors or direct API calls.

❌ Why the other options are incorrect:

A. Use the default API configuration in Business Central

This only applies to standard APIs provided by Microsoft (e.g., customer, vendor, item APIs). Custom APIs like RoomsAPI are not automatically exposed and require manual publishing.

B. Enable the APIs for the Business Central online environment This is a prerequisite for any API access, but it does not publish individual custom APIs. It simply allows API access at the environment level.

D. Include in the extension a codeunit of type Install that publishes RoomsAPI While this is a valid automation technique, it’s not required for accessing the API from Power Apps. Manual publishing via the Web Services page is sufficient and more straightforward for testing or one-off integrations.

📚 Valid References:

Microsoft Learn –

Web Services Overview

Microsoft Learn –

Integrate Business Central with Power Platform

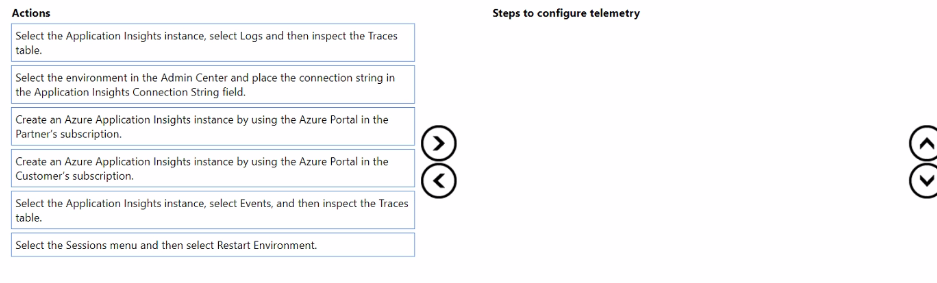

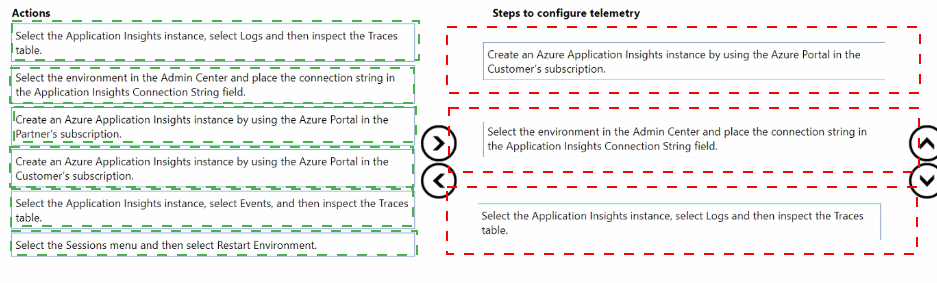

You need to configure telemetry for the SaaS tenant and test whether the ingested signals

are displayed.

Which three actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.

Explanation:

Configuring telemetry for a SaaS (Software-as-a-Service) Business Central tenant involves a specific sequence of steps across different administrative portals.

Step 1: Create an Azure Application Insights instance by using the Azure Portal in the Partner’s subscription.

Reasoning: The foundational step is to create the destination where telemetry data will be sent. For a SaaS tenant, the partner (the developer or ISV creating the extension) is responsible for providing the Application Insights resource. This is created in the partner's Azure subscription, not the customer's, because the partner typically analyzes the telemetry for their extension's performance and usage.

Step 2: Select the environment in the Admin Center and place the connection string in the Application Insights Connection String field.

Reasoning: After the Application Insights resource exists, you must configure the Business Central environment to send data to it. This is done in the Business Central Admin Center. You select the specific SaaS environment and paste the "Connection String" from the Application Insights resource into the designated field. This action links the environment to the telemetry data sink.

Step 3: Select the Application Insights instance, select Logs, and then inspect the Traces table.

Reasoning: This is the correct method to verify that telemetry is being ingested. After performing actions in Business Central (like using the extension), you go to the Application Insights resource in the Azure portal, open the Logs query interface (not the "Events" view), and run a query against the traces table. This table contains the detailed log traces sent from Business Central, confirming the configuration is working. The "Sessions menu" and "Restart Environment" are not part of this process.

Reference:

Microsoft Learn Documentation: Enabling Application Insights for an Environment

The official documentation outlines this exact process: creating an Application Insights resource and then configuring the environment in the admin center with the connection string. It also directs users to use the Logs experience in Application Insights to query data, specifically mentioning the traces table.

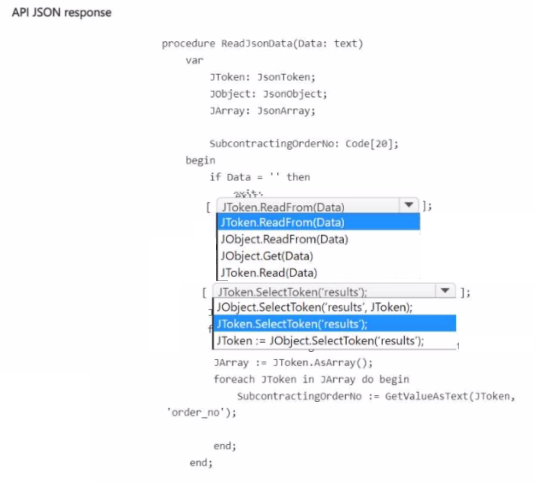

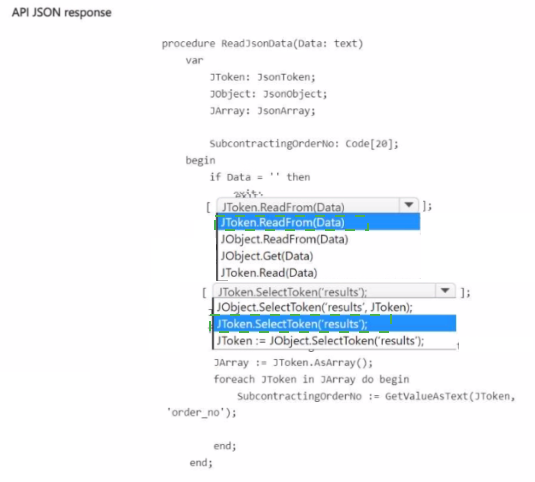

You need to parse the API JSON response and retrieve each order no. in the response

body.

How should you complete the code segment? To answer select the appropriate options in

the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

Parsing JSON in AL follows a specific sequence: you read the raw text into a JSON structure, then navigate through the object hierarchy to extract the values you need.

First blank: JToken.ReadFrom(Data)

The JsonToken.ReadFrom method is the initial step that parses the raw JSON text (Data) into a hierarchical JSON structure represented by a JsonToken. This token becomes the root from which you can navigate the entire JSON document.

Why not the others?

JObject.ReadFrom(Data) would fail if the root of the JSON is not an object (e.g., if it's an array). ReadFrom for a specific type is less flexible than using a generic JsonToken.

JObject.Get(Data) and JToken.Read(Data) are not valid methods.

Second blank: JToken := JObject.SelectToken('results');

After reading the data into a JsonToken, you typically convert it to a JsonObject to access its properties. The SelectToken method is used to navigate the JSON path and retrieve a specific token. The path 'results' indicates we are looking for a property named "results" in the JSON object. This line correctly assigns the value of the "results" property (which is likely the array containing the orders) to the JToken variable for further processing.

Why not the others?

JToken.SelectToken('results'); is incorrect because SelectToken is a method of JsonObject and JsonArray, not JsonToken. You must first convert the root token to a JsonObject.

JObject.SelectToken('results'); JToken); is syntactically incorrect.

Note on the subsequent code: The line JArray := JToken.AsArray(); is correct after the second blank has been properly filled, as it converts the token retrieved by SelectToken('results') into a JsonArray so it can be iterated with the foreach loop.

Reference:

Microsoft Learn Documentation: JSON Data Type

The official documentation details the methods for working with JSON. It explains that JsonToken.ReadFrom is used to parse a text string into a token, and JsonObject.SelectToken is used to get a token based on a JSON Path. The AsArray method is then used to treat a token as an array.

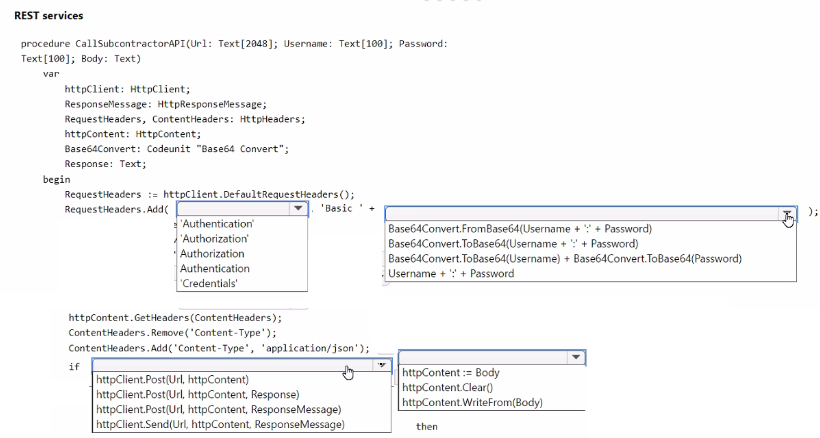

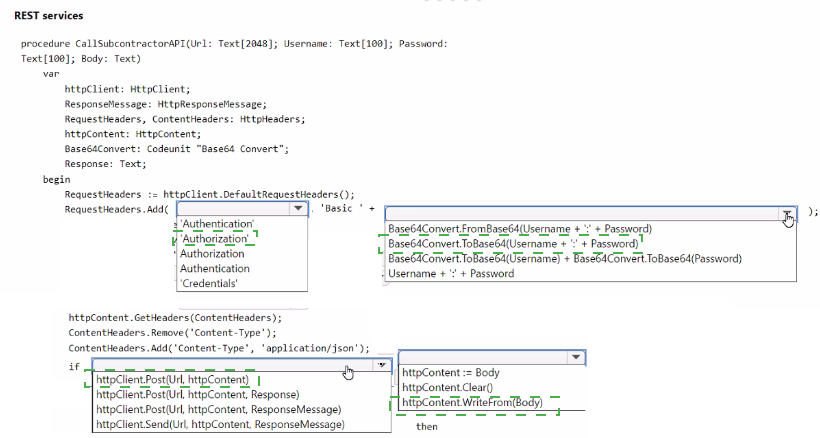

You need to write the code to call the subcontractor's REST API.

How should you complete the code segment? To answer, select the appropriate options in

the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

This code sets up an HTTP call with Basic Authentication to a REST API.

First blank: 'Authorization'

The HTTP header for Basic Authentication is named Authorization. The value of this header will be the word Basic followed by the base64-encoded credentials.

Second blank: Base64Convert.ToBase64(Username + ':' + Password)

Basic Authentication requires the credentials to be in the format username:password and then base64 encoded. The Base64 Convert codeunit's ToBase64 method performs this encoding. Concatenating the username and password with a colon (Username + ':' + Password) before encoding is the correct format.

Third blank: httpContent.WriteFrom(Body)

This method writes the JSON string (the Body parameter) into the HttpContent object, which will be sent as the request body. httpContent := Body is invalid because you cannot assign text directly to an HttpContent object.

Fourth blank: httpClient.Post(Url, HttpContent, ResponseMessage)

The HttpClient.Post method is used to send a POST request. It takes the URL, the content (body), and an HttpResponseMessage variable to store the server's response. The other options are either invalid method names or incorrect parameters.

Reference:

Microsoft Learn Documentation: HttpClient Data Type

The documentation for the HttpClient data type shows the Post method signature which requires the URL, content, and a response message variable. It also details how to use HttpContent.WriteFrom to set the request body. The use of the Authorization header with a base64-encoded string for Basic Auth is standard HTTP protocol.

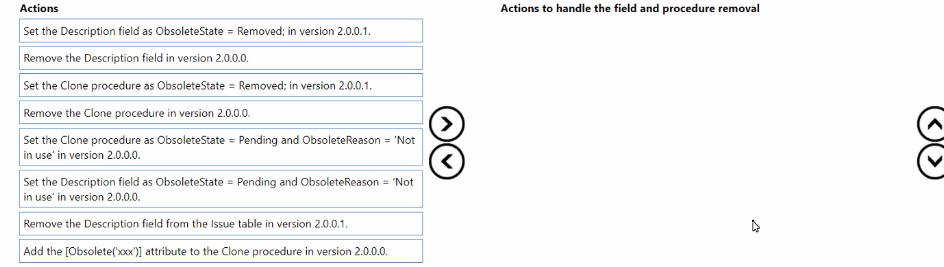

You need to handle the removal of the Description field and the Clone procedure without

breaking other extensions.

Which three actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of

the correct orders you select.

Explanation:

The correct approach follows a two-phase deprecation process using the Obsolete attribute to avoid breaking dependent extensions. This gives other developers time to update their code.

Step 1: Mark as Pending (Version 2.0.0.0)

In the first version, you mark the field and procedure as ObsoleteState = Pending with a clear ObsoleteReason. This causes compile-time warnings (not errors) for any other extensions that are still using these elements. This alerts other developers that the elements will be removed in the future, giving them a chance to update their code, but it does not break their existing extensions.

Step 2: Mark as Removed (Version 2.0.0.1)

In the next version, you change the state to ObsoleteState = Removed. This now causes compile-time errors for any extensions that haven't yet removed their references to the obsolete elements. This forces the necessary update before the dependent extensions can be compiled and published. The element is still technically in the metadata but is inaccessible for new compilations.

Note:

The Clone procedure would also be set to Removed in a subsequent version (e.g., 2.0.0.2). The sequence above correctly shows the first removal action for the Description field. A full sequence would be:

v2.0.0.0: Mark both as Pending.

v2.0.0.1: Mark Description as Removed.

v2.0.0.2: Mark Clone as Removed.

Why the other actions are incorrect:

Removing the field/procedure immediately (e.g., in version 2.0.0.0): This is a breaking change. Any extension that uses the field or procedure will fail to compile and will break at runtime.

Using only the [Obsolete('xxx')] attribute: This defaults to ObsoleteState = Pending. It is the first step but is incomplete without a subsequent move to the Removed state to fully enforce the deprecation.

Setting Removed in the first version (2.0.0.0): This immediately breaks all dependent extensions, which violates the requirement to avoid breaking other extensions.

Reference:

Microsoft Learn Documentation:

Obsolete Attribute

The official documentation explains the Obsolete attribute and its states. It states that Pending "Specifies that the element will be removed in a future release. It will generate a compilation warning," while Removed "Specifies that the element was removed. It will generate a compilation error." The documented best practice is to first use Pending to warn developers and then use Removed in a later version.

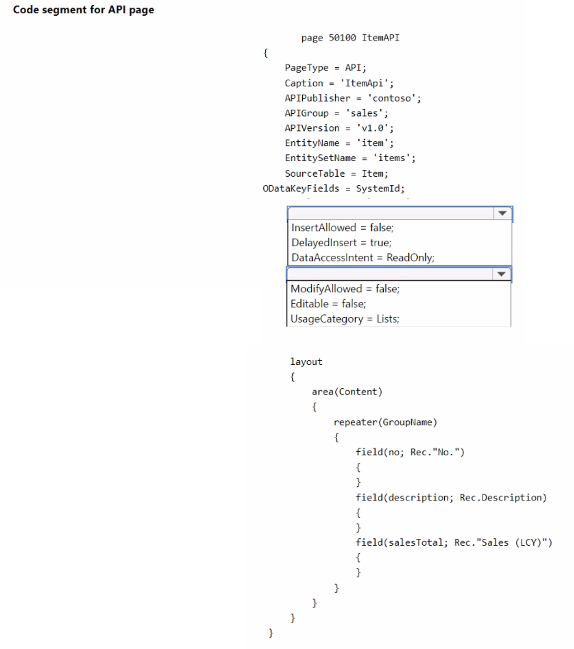

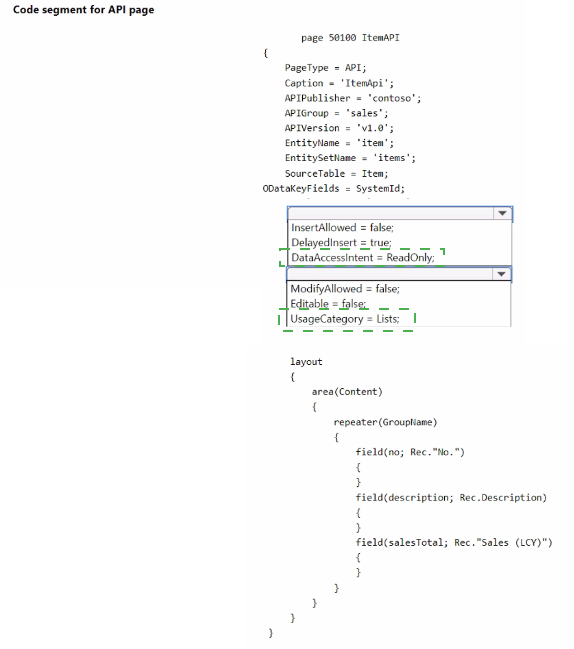

You need to create the API page according to the requirements.

How should you complete the code segment? To answer, select the appropriate options in

the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

Creating an API page requires specific properties to define its OData endpoint behavior and performance characteristics.

First blank: EntitySetName = 'items';

The EntitySetName property defines the name of the OData entity set for the collection of entities. This is the plural form used in the URL (e.g., /companies(...)/items). It is a required property for API pages. The standard practice is to use the plural form of the EntityName.

Second blank: DelayedInsert = true;

This property is crucial for API pages that allow inserting data. When set to true, it ensures that a new record is not inserted into the database until after all fields have been set from the incoming JSON payload. This prevents errors where required fields might be validated before all data is available. For an API, this is the standard and expected behavior.

Third blank: DataAccessIntent = ReadOnly;

This property is a key performance optimization for API pages used primarily for reading data. It tells the server that the page will not perform any data modification, allowing it to use read-only database replicas (if available) and reduce locking overhead. This significantly improves performance for data consumption scenarios. Given that InsertAllowed and ModifyAllowed are both set to false, this page is clearly intended for read-only use, making this property essential.

Why the other properties are incorrect or less suitable:

Obt&KeyFields appears to be a typo for ODataKeyFields, which is used to define the key fields for the OData entity. While SystemId is a common key for API pages, the primary requirement here is to define the entity set and the insert/read behavior.

UsageCategory = List; is a property for organizing the page in the Business Central UI (like in search), but it is not relevant for the core functionality of an API page.

Editable = false; is redundant when ModifyAllowed is already set to false.

Reference:

Microsoft Learn Documentation:

API Page Type

The official documentation details the properties specific to API pages, including the requirement for EntitySetName and the use of DelayedInsert for controlling the insert behavior. The DataAccessIntent property is documented as a performance optimization for read-heavy scenarios.

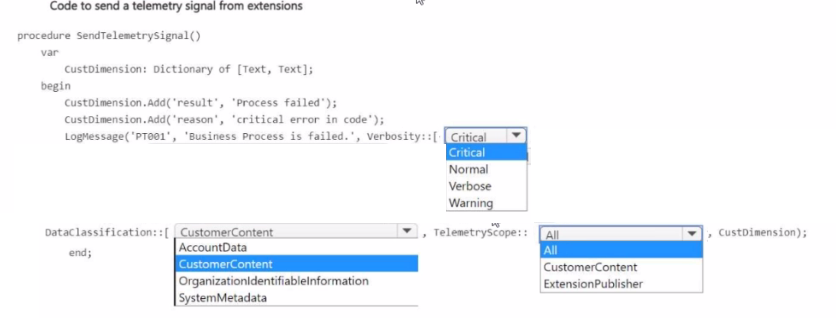

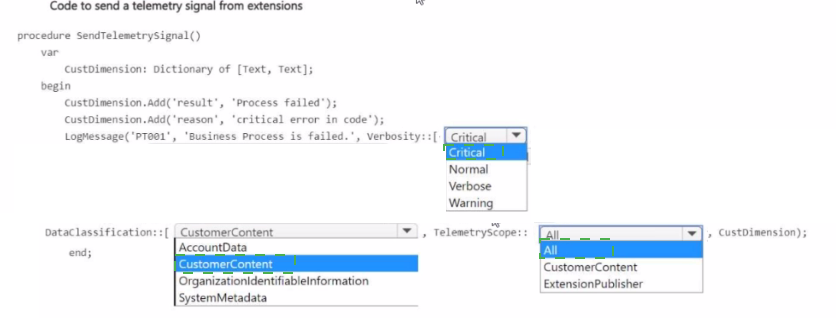

You need to log an error in telemetry when the business process defined in the custom

application developed for Contoso, Ltd fails.

How should you complete the code segment? To answer, select the appropriate options in

the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

Logging telemetry in AL requires specific parameters to categorize the message correctly and adhere to data handling policies.

First blank: Verbosity::Error

The Verbosity parameter defines the severity level of the telemetry signal. Since the scenario describes a business process failure with a "critical error," the appropriate level is Error. This ensures the signal is treated as a failure in monitoring systems.

Why not others? Critical is for system-level failures, Warning is for potential issues, Normal/Verbose are for informational tracing.

Second blank: DataClassification::CustomerContent

The DataClassification parameter is mandatory and specifies how the data in the log message should be treated. The message "Process failed" and the custom dimensions contain business process information, which falls under CustomerContent.

Why not others? AccountData is for authentication data, OrganizationIdentifiableInformation is for sensitive PII, SystemMetadata is for internal system data.

Third blank: TelemetryScope::ExtensionPublisher

The TelemetryScope determines who can view the telemetry data. ExtensionPublisher means only the publisher of the extension (Contoso, Ltd.) can view this telemetry signal in their associated Azure Application Insights resource. This is the standard and correct scope for application-specific telemetry.

Why not others? All would make the data visible to the tenant administrator, which isn't necessary here.

Fourth blank: CustomDimensions

The final parameter of the LogMessage method is for passing a dictionary of custom dimensions. The variable CustomDimensions (declared as a Dictionary of [Text, Text]) should be passed here to include the "result" and "reason" details with the telemetry signal.

Why not others? Customension appears to be a typo of the correct variable name CustomDimensions.

Reference:

Microsoft Learn Documentation: LogMessage Method

The official documentation for the LogMessage method (found on the CodeUnit "Logger" data type) details all the parameters: the event name, message, verbosity, data classification, telemetry scope, and custom dimensions dictionary. It explains that ExtensionPublisher scope sends data to the publisher's Application Insights resource.

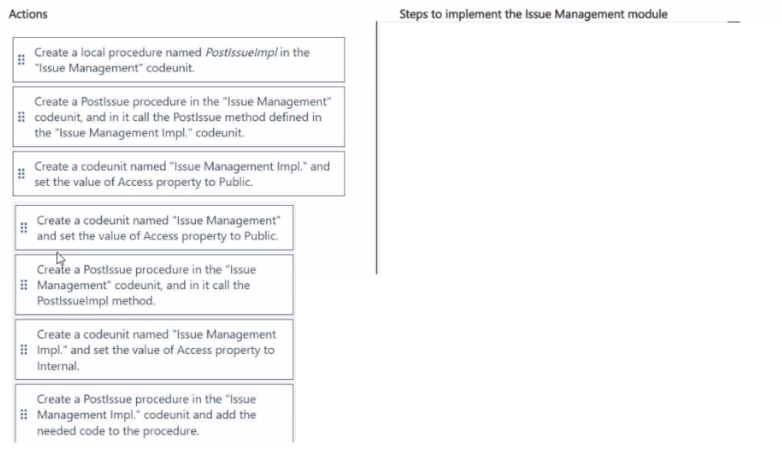

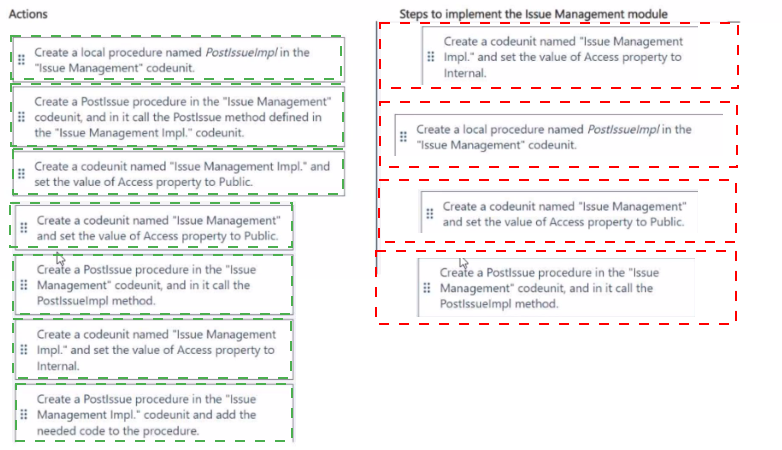

You need to implement the Issue Management module and expose the Postlssue method.

Which four actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.

NOTE: Note than one order of answer choices is correct. You will receive credit for any of

the correct orders you select.

Explanation:

This implements the Facade design pattern, which is a standard practice in Business Central for creating a clean, stable public API while encapsulating the complex implementation details.

Step 1: Create Public API Codeunit (iv)

The "Issue Management" codeunit with Access = Public serves as the public facade. This is the interface that other extensions or the base application will call. Its public procedures define the official, stable API for the module.

Step 2: Create Internal Implementation Codeunit (vi)

The "Issue Management Impl." codeunit with Access = Internal contains the actual business logic. Marking it Internal encapsulates the logic, preventing other extensions from calling it directly and ensuring all calls go through the public facade. This allows you to change the implementation without breaking consumers.

Step 3: Implement the Logic in the Internal Codeunit (vii)

The PostIssue procedure in the "Issue Management Impl." codeunit is where the complex business logic for posting an issue is written.

Step 4: Expose the Logic via the Public API (ii)

The PostIssue procedure in the public "Issue Management" codeunit acts as a thin wrapper. Its only job is to call the corresponding method in the internal implementation codeunit. This provides a clean separation of concerns.

Why this order is correct:

It separates the public interface from the private implementation.

It follows the principle of least privilege by making the implementation codeunit Internal.

It provides a stable API layer that can remain constant even if the underlying implementation changes.

Why other sequences are incorrect:

Creating the implementation codeunit as Public (iii) would break encapsulation.

Calling a method named PostIssueImpl (v) from the public codeunit is a non-standard naming convention compared to the clean facade pattern.

Creating a local procedure PostIssueImpl (i) in the public codeunit does not properly separate the API from the implementation.

You need to create the access modifier for IssueTotal.

Which variable declaration should you use?

A. Protected vat IssueTotal: Decimal

B. Internal var IssueTotal: Decimal

C. Public var IssueTotal: Decimal

D. Local var IssueTotal: Decimal

E. Var IssueTotal; Decimal

Explanation:

This question tests knowledge of X++ variable declarations and access modifiers in Microsoft Dynamics 365 Finance and Operations. The key is identifying the correct syntax and the most appropriate scope for a variable that will be used across the class but not exposed externally. The scenario implies IssueTotal should be accessible within the same module/assembly.

Correct Option:

B. Internal var IssueTotal: Decimal

The internal keyword restricts access to the variable to the current model (assembly). This is the correct and recommended access modifier for a variable that needs to be used by methods within the same module but not by external callers, promoting encapsulation. The syntax var IssueTotal: Decimal is the correct modern X++ syntax for variable declaration.

Incorrect Option:

A. Protected var IssueTotal: Decimal:

The protected modifier allows access within the class and its subclasses. This is more restrictive than internal and is typically used for inheritance scenarios, not for general intra-module access as implied here.

C. Public var IssueTotal: Decimal:

Public allows access from anywhere, including other models. This breaks encapsulation by over-exposing the variable and is generally not recommended for class member variables without a specific reason.

D. Local var IssueTotal: Decimal:

There is no local access modifier in X++. Variables declared within a method have local scope by default, but this is not a valid modifier for a class member variable.

E. Var IssueTotal; Decimal:

This syntax is incorrect. It uses a semicolon (;) instead of a colon (:) to declare the type, and it lacks a valid access modifier for a class member variable.

Reference:

Microsoft Learn documentation on X++ variables and scope: "Access modifiers control whether variables and methods can be accessed from other code. Use internal to allow access from within the same model." (Paraphrased from core X++ language concepts).

You need to determine why the debugger does not start correctly.

What is the cause of the problem?

A. The "userld" parameter must have the GUID of the user specified, not the username.

B. The "breakOnNext" parameter is not set to -WebServiceClient".

C. The "userld" parameter is specified, and the next user session that is specified in the 'breakOnNext" parameter is snapshot debugged.

D. The "executionContext* parameter is not set to "Debug".

Explanation:

This question is about troubleshooting the Snapshot Debugger in Microsoft Dynamics 365 Finance and Supply Chain Management. A common cause for the debugger not starting is an incorrect value passed to the userId parameter when initiating a snapshot debugging session via a URL. The system requires a unique identifier.

Correct Option:

A. The "userId" parameter must have the GUID of the user specified, not the username.

The userId parameter in the snapshot debugger URL must contain the user's unique system GUID, not their alphanumeric username or alias. Providing the username will cause the debugger to fail to start because it cannot correctly identify and attach to the specified user's session.

Incorrect Option:

B. The "breakOnNext" parameter is not set to "-WebServiceClient".

The -WebServiceClient value is used for the clientType parameter, not the breakOnNext parameter. The breakOnNext parameter typically accepts values like "CIL" to specify where the debugger should break.

C. The "userId" parameter is specified, and the next user session that is specified in the 'breakOnNext" parameter is snapshot debugged.

This describes a correct, functional scenario. If a userId is specified, the debugger will attach to that user's next matching session, which is the intended behavior and not a cause for failure.

D. The "executionContext" parameter is not set to "Debug".

The executionContext parameter is used in other scenarios, such as for running data packages, but it is not a required parameter for initiating the snapshot debugger via a URL. Its absence or value does not prevent the debugger from starting.

Reference:

Microsoft Learn, "Debug an on-environment issue by using Snapshot Debugger": The documentation specifies that the userId parameter in the debugger URL must be "the user ID (in GUID format) of the user that you want to debug."

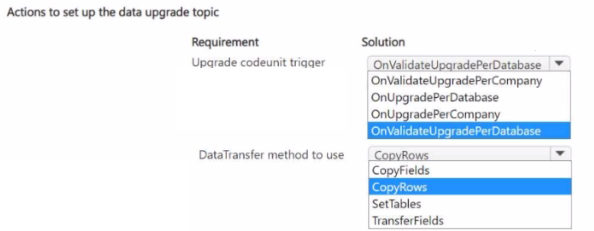

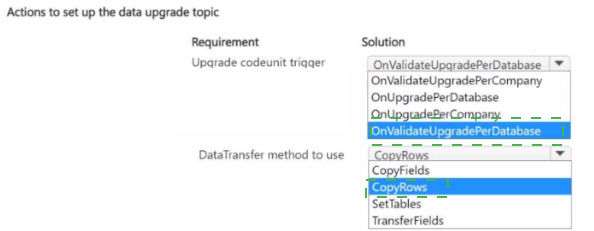

You need to write an Upgrade codeunit and use the DataTransfer object to handle the data

upgrade.

Which solution should you use for each requirement? To answer, select the appropriate

options in the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

This question tests knowledge of the correct pairing between upgrade codeunit triggers and the methods of the DataTransfer object in Dynamics 365 Finance and Operations. The OnValidateUpgradePerCompany and OnUpgradePerCompany triggers are used for per-company data migration, while OnUpgradePerDatabase is for cross-company data. Each trigger pairs with specific DataTransfer methods designed for bulk operations.

Correct Option:

OnValidateUpgradePerCompany -> CopyRows

The OnValidateUpgradePerCompany trigger is used to validate data migration for each company. The CopyRows method is appropriate here as it performs a fast, bulk copy of records from a source to a target table for validation before the actual upgrade, without transforming individual fields.

OnUpgradePerCompany -> CopyRows

The OnUpgradePerCompany trigger executes the actual data copy for each company. CopyRows is again the correct method for the live upgrade phase, as it efficiently transfers all validated rows in bulk from the old table structure to the new one.

OnUpgradePerDatabase -> TransferFields

The OnUpgradePerDatabase trigger is used for data upgrade tasks that apply across the entire database (not per-company), such as updating system tables. TransferFields is used when you need to map and transform specific fields from an old table to a new table where the schemas differ, which is a common cross-company task.

Incorrect Options (For Other Potential Selections in the Table):

OnValidateUpgradePerDatabase -> CopyFields: OnValidateUpgradePerDatabase is not a standard upgrade trigger. CopyFields is also not a standard method of the DataTransfer object.

SetTables: This is not a valid method of the DataTransfer object. The correct method for defining source and target tables is setTables (note the lowercase 's').

Reference:

Microsoft Learn, "Perform data upgrade": The documentation specifies the upgrade method triggers (OnUpgradePerCompany, OnUpgradePerDatabase) and details the DataTransfer class methods like copyRows for bulk copy and transferFields for field mapping.

You need to determine why the extension does not appear in the tenant.

What are two possible reasons for the disappearance? Each correct answer presents a

complete solution.

NOTE: Each correct selection is worth one point.

A. The extension was published as a DEV extension.

B. The extension was not compatible with the new version within 60 days of the first notification.

C. The extension was published as PTE. and the Platform parameter was not updated in the application file.

D. The extension was published as PTE. and the Platform and Runtime parameters were not updated in the application file.

E. The extension was not compatible with the new version within 90 days of the first notification.

Explanation:

An extension disappearing from a production tenant is a critical deployment or lifecycle issue. The two most common causes relate to (1) the platform version compatibility and the specific parameters used for Per-Tenant Extensions (PTE), and (2) the mandatory compliance with new platform versions within a strict Microsoft-enforced timeframe.

Correct Option:

B. The extension was not compatible with the new version within 60 days of the first notification.

Microsoft mandates that all extensions be made compatible with new platform updates. If an extension's publisher does not update and resubmit a compatible version to AppSource (for ISV solutions) or redeploy it (for PTEs) within 60 days of the first compatibility notification, Microsoft will uninstall the extension from all tenants to ensure system stability.

D. The extension was published as PTE, and the Platform and Runtime parameters were not updated in the application file.

For a Per-Tenant Extension (PTE), the Platform and Runtime version numbers in the app.json/Application Descriptor file must be updated to match the target environment's version before deployment. If these are not updated, the extension is incompatible and will fail to deploy or become invisible on the tenant.

Incorrect Option:

A. The extension was published as a DEV extension.

Extensions are not categorized as "DEV" vs. "PROD" in this manner. A developer-built extension is deployed as a PTE or an AppSource package; a "DEV extension" is not a valid deployment state that would cause disappearance.

C. The extension was published as PTE, and the Platform parameter was not updated in the application file.

This is partially correct but incomplete. For PTEs, both the Platform and the Runtime version parameters must be updated to match the target environment's application and platform versions.

E. The extension was not compatible with the new version within 90 days of the first notification.

The timeframe enforced by Microsoft for extension compatibility updates is 60 days, not 90 days. After 60 days, non-compliant extensions are automatically uninstalled.

Reference:

Microsoft Learn, "Upgrade and update finance and operations apps": Details the 60-day compliance policy for extensions after a new version release.

Microsoft Learn, "Configure a finance and operations development environment": Specifies the requirement to update the Platform and Runtime version properties in the application descriptor file for PTEs.

| Page 2 out of 10 Pages |

| Previous |