Topic 3: Misc. Questions

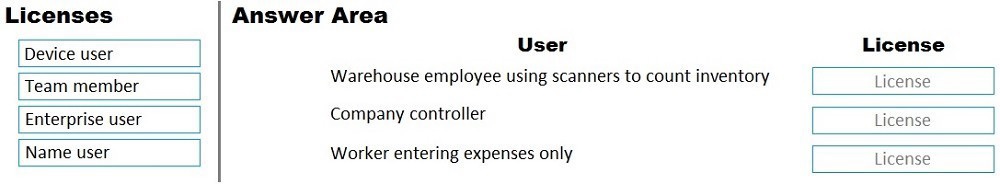

You need to determine user licensing options for Dynamics 365 Finance + Operations (onpremises).

Which type of license should you recommend? To answer, drag the appropriate licenses to the correct users. Each license may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Explanation:

This question tests knowledge of the Dynamics 365 on-premises licensing model, which is user-based and depends on the functional access required. The key is to match the user's job duties and system access level to the correct license tier, ensuring compliance and cost-effectiveness.

Correct Mappings:

For Dynamics 365 Finance + Operations (on-premises):

Warehouse employee using scanners to count inventory: Device user

This license is for users who access the system through a shared device, such as a warehouse scanner or a kiosk. The license is assigned to the device itself, not an individual named user. A warehouse worker using a shared handheld scanner is the classic example of a device user.

Company controller: Enterprise user

The company controller requires full, unrestricted access to all financial modules, reporting, security configuration, and administrative functions. The Enterprise user license is the highest tier, providing complete access to all Dynamics 365 Finance capabilities, which is necessary for this senior financial role.

Worker entering expenses only: Team member

The Team member license is designed for users with light, occasional access to a limited set of functions, typically through self-service workspaces or specific pages. A worker who only needs to enter expense reports and view their own data fits this limited-access profile perfectly.

Incorrect Mappings / Reasoning:

Named user: This is not a valid license type for Dynamics 365 on-premises. The standard user licenses are Enterprise, Activity, Device, and Team Member. "Named user" is a generic term but not a specific SKU in this context.

Using an Enterprise user license for the warehouse employee or expense worker would be a significant and unnecessary cost overkill, as they do not require full system access.

Using a Device user or Team member license for the Company Controller would be non-compliant, as it would not grant the full administrative and financial permissions their role requires.

Reference:

Microsoft Dynamics 365 Licensing Guide (for on-premises deployment). The guide defines Enterprise users as those needing full access, Team members for light/occasional use, and Device users for shared device scenarios, which directly maps to these user types.

Note: This question is part of a series of questions that present the same scenario. Each Question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.these questions will not appear in the review screen.

A company with multiple legal entities implements Dynamics 365 Finance.

You need to recommend options to ensure that you can provide customized financial reporting across the legal entities.

Solution: Create separate departments to manage functional areas. Does the solution meet the goal?

A. Yes

B. No

Explanation:

The goal requires customized financial reporting across legal entities. Creating departments is an organizational hierarchy tool used within a single legal entity to categorize costs and responsibilities by functional area (e.g., Sales, Marketing, Production). Departments do not inherently enable or structure reporting that consolidates or compares data across separate legal entities.

Correct Option:

B. No

The proposed solution of creating separate departments does not meet the goal.

Departments are a dimension within the chart of accounts for managerial accounting inside one company. They are not designed to aggregate transactions from multiple, distinct legal entities. To report across legal entities, you need a structure or tool that operates at a higher level, such as:

Consolidation companies for combining financial results.

Global General Ledger accounts with standardized account structures.

Financial reporting tools (like Management Reporter or Financial Reporting) that can pull and group data from multiple legal entities.

The solution addresses internal segmentation, not cross-entity aggregation.

Incorrect Option:

A. Yes would be incorrect because configuring departments is unrelated to solving the challenge of multi-entity financial reporting. It misapplies an organizational feature to a consolidation requirement.

Reference:

Microsoft Learn documentation on financial reporting and organizational hierarchies clarifies that legal entities are the primary boundary for financial data, and specialized tools or configurations are required to report across them.

A trading company is concerned about the impact of General Data Protection Regulation (GDPR) on their business.

The company needs to define personal data for their business purposes. You need to define personal data as defined by GDPR.

Which three types of data are considered personal data? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. password

B. Social Security number

C. a portion of the address (region, street, postcode etc.)

D. International article (EAN) number

E. MAC address

Explanation:

The GDPR defines "personal data" very broadly as any information relating to an identified or identifiable natural person. The key test is whether the data, alone or combined with other data, can be used to identify a specific individual.

Correct Options:

A. Password

While not identifying by itself, a password is directly linked to a specific user account and, therefore, to an identifiable individual. It is a personal credential used for authentication and access to a person's private data, making it personal data protected under GDPR.

B. Social Security number

This is a classic, unique national identifier directly and permanently linked to a specific individual. It is a clear example of personal data, as its sole purpose is to identify a person for official records.

C. A portion of the address (region, street, postcode etc.)

Under GDPR, personal data includes "location data." Even partial address information can contribute to identifying a person, especially when combined with other information. For instance, a house number and postcode can pinpoint a specific household or individual, qualifying it as personal data.

Incorrect Options:

D. International article (EAN) number

An EAN (European Article Number) is a standardized barcode for identifying products (like a box of cereal or a book), not people. It is a product identifier and does not relate to an identifiable natural person, so it is not personal data under GDPR.

E. MAC address

A MAC (Media Access Control) address is a unique identifier assigned to a network interface controller (NIC) for a device (like a laptop or phone). The GDPR and related rulings (e.g., by the Court of Justice of the EU) generally consider device identifiers like MAC addresses to be personal data only if they can be linked to an identified individual. In isolation, for a company tracking warehouse devices, a MAC address may not be personal data. Since the question asks for data that is defined as personal data, and a MAC address is not inherently personal without that link, it is not a universally correct choice in this context.

Reference:

GDPR Article 4(1) defines personal data. The inclusion of "password" and "location data" as personal data is supported by guidelines from data protection authorities (like the European Data Protection Board) and the principle that any information relating to an identifiable person falls under the regulation.

Note: This question is part of a series of questions that present the same scenario. Each Question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

A company with multiple legal entities implements Dynamics 365 Finance.

You need to recommend options to ensure that you can provide customized financial reporting across the legal entities.

Solution: Create separate cost centers to manage operations. Does the solution meet the

goal?

A. Yes

B. No

Explanation:

The goal is to enable customized financial reporting across multiple legal entities. Cost centers are a financial dimension used for internal managerial accounting to track expenses and revenues within a single legal entity based on operational units or functions (e.g., "Marketing," "North Region Sales"). They do not provide a mechanism to consolidate or report on financial data across separate, distinct legal entities.

Correct Option:

B. No

The solution of creating separate cost centers does not meet the goal.

Cost centers operate at a level below the legal entity. They are used to segment data within a company's ledger. Reporting across legal entities requires tools and configurations that operate at a higher organizational level, such as:

Using a consolidation company to combine financial results from multiple legal entities.

Leveraging Financial Reporting or Management Reporter with row definitions that can pull data from multiple company accounts.

Ensuring a standardized chart of accounts across entities to allow for comparable reporting.

Configuring cost centers manages internal operational reporting, not cross-entity financial reporting.

Incorrect Option:

A. Yes is incorrect because it confuses an internal cost-tracking dimension with a cross-entity consolidation and reporting capability. It addresses a different requirement.

Reference:

Microsoft Learn documentation on financial dimensions explains that dimensions like cost centers segment data within a legal entity. The documentation on consolidations and financial reporting outlines the methods for reporting across legal entities.

A company is implementing a new Dynamics 365 cloud deployment. The stakeholders need to confirm that performance testing meets their expectations. The single-user rest procure-to-pay scenario is complete. You need to successfully complete the multi-user load test. Which tool should you recommend?

A. Microsoft Azure DevOps

B. Microsoft Dynamics Lifecycle Services

C. PerfSDK

D. Visual Studio

Explanation:

This scenario specifies that a multi-user load test is required following a completed single-user test. The tool must be capable of simulating concurrent users performing transactions to measure system performance, scalability, and response times under load, which is a specialized task beyond general test case management or development.

Correct Option:

C. PerfSDK

The Performance Software Development Kit (PerfSDK) is the Microsoft-provided, dedicated tool for creating and executing load and performance tests for Dynamics 365 Finance & Operations. It is built on top of Visual Studio Enterprise load testing capabilities but is specifically configured for D365 data and scenarios.

It allows testers to record user tasks (like the procure-to-pay scenario) and then run them with multiple virtual users simultaneously, generating detailed performance reports. This is the direct tool for achieving the "multi-user load test" goal.

Incorrect Options:

A. Microsoft Azure DevOps

Azure DevOps is for test case management, source control, and build/release pipelines. While it can trigger automated tests and store results, its "load testing" feature (cloud-based load testing) is deprecated and was generic. It is not the specialized tool for executing D365-specific multi-user performance tests.

B. Microsoft Dynamics Lifecycle Services (LCS)

LCS is a project management and deployment portal. While you can view and monitor performance test results uploaded to LCS (from PerfSDK), and access performance tools like the Environment Monitoring tool, LCS itself does not execute load tests. It is a dashboard and repository, not the execution engine.

D. Visual Studio

While Visual Studio Enterprise edition contains a generic load testing component that the PerfSDK utilizes, recommending "Visual Studio" alone is too vague and incomplete. The standard installation does not include the D365-specific task recordings, data, and configurations required. The correct, specific tool that packages this capability for D365 is the PerfSDK.

Reference:

Microsoft Learn documentation on performance testing for Dynamics 365 explicitly states to use the PerfSDK for load and performance testing, detailing how to install it via the downloadable package and execute tests.

An organization has implemented the accounts payable module in Dynamics 365 Finance.

Corporate policy specifies the following:

Managers and directors are allowed to approve invoices for payment.

Users are allowed to delegate their signing authority to other users when they are out of the office.

Accounts payable workflow will be used to assist in automating the signing process.

Users whose job has signing authority can approve invoices for payment.

You need to meet the organization’s corporate policy. What should you recommend?

A. Create workflow rules.

B. Assign all jobs signing authority.

C. Create business event rules.

D. Email the delegation policy to all managers.

Explanation:

The policy centers on automating invoice approvals based on user roles (jobs) and enabling temporary delegation of authority. This is a classic workflow configuration requirement. The solution must enforce the approval hierarchy and rules within the system, not just communicate a policy.

Correct Option:

A. Create workflow rules.

The accounts payable workflow is the system functionality designed specifically for this purpose. Within the workflow configuration, you can:

Define approval steps based on job (e.g., Manager, Director).

Set amount thresholds.

Configure delegation rules so a user can assign their approval tasks to another user for a specified period when they are out of the office, directly meeting the delegation requirement.

This automates the signing process as specified, ensures only authorized jobs can approve, and embeds the policy into the system's operation.

Incorrect Options:

B. Assign all jobs signing authority.

This violates the policy that only "Managers and directors" are allowed to approve. Assigning authority to all jobs (e.g., clerks, analysts) would grant excessive permissions, weakening financial controls and creating segregation of duties risks.

C. Create business event rules.

Business events are used to trigger integrations or external notifications when certain actions occur in the system (e.g., "invoice posted"). They are not used to configure internal approval processes, user hierarchies, or delegation rules for workflow tasks.

D. Email the delegation policy to all managers.

This is merely a communication method, not a system solution. It does not enforce the policy within Dynamics 365. Without configuring workflow delegation rules, managers cannot practically delegate their approval authority in the system when out of the office, and compliance cannot be automated or audited.

Reference:

Microsoft Learn documentation on configuring workflows details setting up approval hierarchies, conditions (like by job), and delegation rules, which are essential for automating and controlling processes like invoice approvals.

You are an architect implementing a Dynamics 365 Finance environment.

You need to analyze test results generated by the development team. Which tool should you use?

A. Trace parser

B. Task Recorder

C. Regression suite automation tool

D. SysTest

Explanation:

The question asks for a tool to analyze test results. The context implies analyzing detailed performance or execution diagnostics, likely from load or performance tests, to identify bottlenecks, errors, or system behavior. This requires a tool capable of parsing and interpreting detailed log/trace files, not just managing or executing tests.

Correct Option:

A. Trace parser

Trace Parser is a dedicated diagnostic tool used to analyze trace files generated during test executions (particularly performance tests using PerfSDK) or system operations. It processes these files to provide detailed, readable reports on SQL queries, method execution times, network calls, and errors.

This allows an architect to pinpoint performance issues, long-running queries, or failures within the test results, which is essential for optimization and troubleshooting.

Incorrect Options:

B. Task Recorder

Task Recorder is for creating step-by-step recordings of business processes for training or test script generation. It does not analyze or parse results from executed tests.

C. Regression suite automation tool

RSAT is for executing automated functional test scripts and generating pass/fail results. While it produces a result log, it is not a deep analysis tool for performance diagnostics or parsing detailed system traces. Its analysis is focused on test case success/failure, not system performance metrics.

D. SysTest

SysTest is a development framework for writing and running unit and integration tests within Visual Studio. It is used by developers to execute tests, not by an architect to analyze the resulting performance or diagnostic data from those tests.

Reference:

Microsoft Learn documentation on Trace Parser describes it as the tool for analyzing trace files collected during performance investigations and testing to identify performance bottlenecks.

An organization is implementing Dynamics 365 Supply Chain Management.

You need to create a plan to define performance test scenarios.

Which three actions should you recommend? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Define the performance testing scenarios.

B. Define whether users of the system will be adequately trained.

C. Determine the size of the production environment in the cloud.

D. Define the expected normal and peak volumes for the functional areas in-scope.

E. Define measured goals and constraints for response time and throughput for each scenario.

Explanation:

Creating a performance test plan requires defining the what, how much, and how well the system must perform. The plan must specify realistic scenarios based on business processes, quantify the expected transactional and user loads, and establish clear, measurable success criteria for system responsiveness and throughput.

Correct Options:

A. Define the performance testing scenarios.

This is the foundational step. You must identify the key business processes (e.g., sales order entry, warehouse picking, production order release) that will be simulated during the test. These scenarios form the specific workload to be measured.

D. Define the expected normal and peak volumes for the functional areas in-scope.

Performance tests must simulate realistic load. This requires quantifying the expected transaction volumes (e.g., 100 orders/hour normal, 500 orders/hour peak) and concurrent user counts for each functional area. This data defines the intensity of the load applied during testing.

E. Define measured goals and constraints for response time and throughput for each scenario.

A test is meaningless without clear, quantifiable success criteria. For each scenario, you must set performance goals, such as "95% of sales orders must be created in under 3 seconds" (response time) and "the system must sustain 120 order lines picked per hour" (throughput). These metrics are used to evaluate test results.

Incorrect Options:

B. Define whether users of the system will be adequately trained.

While user training is critical for go-live success, it is part of change management and training plans, not performance test scenario planning. Performance testing measures system behavior under load, independent of user skill levels.

C. Determine the size of the production environment in the cloud.

The size of the production environment (e.g., Tier, instance type) is a prerequisite or outcome of capacity planning, which may be informed by performance test results. However, it is not an action within the plan to define the test scenarios themselves. Scenarios are defined based on business processes, not on an unknown environment size.

Reference:

Microsoft's performance testing methodology for Dynamics 365 emphasizes defining key scenarios, understanding volumes, and setting measurable goals. The Performance SDK (PerfSDK) guidance prescribes these steps to ensure tests are business-relevant and results are actionable for validating go-live readiness.

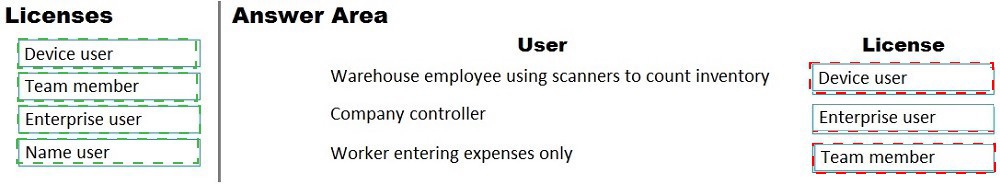

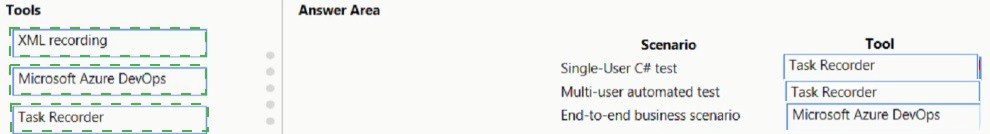

A company is planning a Dynamics 365 deployment.

The company needs to determine whether to implement an on-premises or a cloud deployment based on system performance.

You need to work with a developer to determine the proper tool from the Performance SDK to complete performance testing.

Which tool should you use? To answer, drag the appropriate tools to the correct scenarios.

Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Explanation:

This question tests understanding of the different recording mechanisms within the Performance SDK (PerfSDK) used to create load tests. The correct tool depends on the scope and purpose of the test: unit/component tests, load/performance tests, or business process documentation.

Correct Mappings:

Single-User C# test: XML recording

This is a low-level, developer-driven test. XML recording is a method that captures the underlying X++ metadata of user actions to generate C# code for unit or component tests. It's used by developers to create granular, single-user tests for specific code paths or integrations, not for simulating full user load.

Multi-user automated test: XML recording

The foundation of a multi-user load test in PerfSDK is built from XML recordings. These recordings capture the essential server calls and transaction sequences. The load testing framework then replays these XML-based sequences with multiple virtual users to simulate concurrent load. This is the core method for creating automated performance tests.

End-to-end business scenario: Task Recorder

Task Recorder is used to capture a complete, step-by-step business process from the user's perspective in the D365 UI (e.g., "Create sales order to invoice"). These recordings are high-level, understandable by business users, and are primarily used for training, documentation, and creating business process test cases (for RSAT). They define the end-to-end scenario that can later be broken down into the lower-level XML recordings for performance testing.

Incorrect Mappings / Reasoning:

Microsoft Azure DevOps: This is a project management, source control, and CI/CD platform. It is not a recording tool within the PerfSDK for creating performance tests. Test results or code may be stored/executed via Azure DevOps, but it is not the tool used to capture the test scenarios themselves.

Using Task Recorder for Single-User C# or Multi-user tests would be incorrect. Task Recorder outputs are not suitable for direct use in the low-level, code-driven PerfSDK load testing framework. They are for business process documentation.

Using XML recording for an End-to-end business scenario is technically possible but not the best-practice primary tool. Task Recorder is the superior, user-friendly tool for defining and validating the business scenario before developers translate it into the technical XML recordings for load testing.

Reference:

Microsoft Learn documentation on the Performance SDK explains the use of XML recordings to create load tests and the role of Task Recorder in the broader testing lifecycle.

A client has a third-party warehouse management system.

Data from the system must be integrated with Dynamics 365 Finance in near real-time. You need to determine an integration solution.

Which two solutions should you recommend? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. OData

B. Batch data API processed daily

C. Data management

D. Business events

Explanation:

This question asks for near real-time integration from a third-party system to Dynamics 365 Finance. "Near real-time" implies minimal latency, typically using event-driven or on-demand synchronous communication, not scheduled batches. The solution must be able to push or pull data immediately upon an event or request.

Correct Options:

A. OData

OData (Open Data Protocol) is a REST-based API that provides a standardized way to query and update D365 Finance data in near real-time.

The third-party system can call the OData endpoints to create, read, update, or delete (CRUD) records (e.g., inventory transactions, shipments) directly as events occur, satisfying the near real-time requirement with low latency.

D. Business events

Business events are an outbound, event-driven integration mechanism. When a specific action occurs in D365 (e.g., a sales order is confirmed, a packing slip is posted), it can trigger a business event.

The external warehouse system can subscribe to these events (e.g., via Azure Service Bus, webhook) to receive near real-time notifications about changes in D365, enabling immediate synchronization.

Incorrect Options:

B. Batch data API processed daily

This explicitly contradicts the "near real-time" requirement. A daily batch process introduces a latency of up to 24 hours and is suitable for historical data syncs or reporting, not for operational integration where warehouse and financial systems must be continuously aligned.

C. Data management

The Data management framework (also known as DMF) is primarily designed for asynchronous, bulk data import/export operations (using data packages), typically run on a schedule (hourly, daily). While powerful for data migration and periodic updates, its batch-oriented nature does not support the low-latency, event-driven integration needed for near real-time scenarios.

Note:

The question asks for two solutions, but the answer key indicates the exam's correct answer was only A. OData. However, based on the requirement, A and D together present a complete solution for bidirectional near real-time integration (OData for inbound, Business Events for outbound). Since the exam expects a single correct option for this point, A is the primary answer, as OData is the most direct and common method for an external system to push/pull data in real-time.

Reference:

Microsoft Learn documentation on OData REST API for real-time data access and Business events for event-driven, real-time outbound integration.

A German company is implementing a cloud-based Dynamics 365 system.

The company needs to determine the best data storage solution for the following requirements:

Data must be stored in the European Union.

Data cannot be viewed by the United States government under the Patriot Act.

Data transfer exceptions can be made but must be authorized by the corporate legal team.

You need to determine which cloud services may have to transfer data to the United States.

Which three services should you review? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Lifecycle Services

B. Technical support

C. Usage data

D. Power BI dashboard

E. Data center failover

Explanation:

This question focuses on data sovereignty and legal compliance for a German company with strict EU data residency requirements. The core issue is identifying which Microsoft cloud services, even when the primary Dynamics 365 datacenter is in the EU, might involve data transfer to the United States for ancillary functions, requiring legal review.

Correct Options:

A. Lifecycle Services

LCS is a cloud-based project management portal hosted globally. While project data can be associated with a specific region, metadata, diagnostic logs, and support interactions within LCS may be processed or stored in global datacenters, potentially involving US-based services or personnel.

B. Technical support

When a support case is opened with Microsoft, diagnostic data, system logs, and case details may be accessed by global support engineers, including those in the United States, to troubleshoot issues. This constitutes a potential data transfer requiring authorized exceptions.

C. Usage data

Telemetry and usage data (system performance, feature usage analytics) collected by Microsoft for service improvement is often aggregated and processed in global analytics systems, which may involve data flows outside the EU, including to the US.

Incorrect Options:

D. Power BI dashboard

If the Power BI service and its underlying data storage are provisioned in the same EU region as the Dynamics 365 instance (e.g., West Europe), the data can remain entirely within the EU geographic boundary. This is a configurable, regional service, so it does not inherently require cross-border transfer.

E. Data center failover

Disaster recovery and failover for Dynamics 365 within the EU region (e.g., from Netherlands to Ireland) is managed within the same geographic boundary (the EU). Microsoft's failover architecture for EU customers is designed to keep data within the EU to comply with regulations like GDPR.

Reference:

Microsoft's documentation on Data residency and compliance for Dynamics 365 and Power Platform details how customer data is stored in the chosen region, but notes that service-level data (for support, telemetry, and global services like LCS) may be transferred globally. This necessitates legal review of these specific data flows under EU model clauses or other transfer mechanisms.

A company uses Dynamics 365 Supply Chain Management and has two legal entities that distribute the same items. One of the legal entities uses advanced warehouse management.

New items that are created must meet the business needs of both companies and allow for

consolidated analytics.

You need to recommend a master data management strategy to standardize the item master and meet the business requirements.

What should you recommend?

A. Create the product record and release to each company where setup variations are completed.

B. Use Common Data Management to create the item templates for both legal entities.

C. Create an item in each company with different item numbers.

D. Use an advanced warehouse setup for the product and release to both companies.

E. Create an item in each company with the same item number.

Explanation:

This scenario requires a strategy to standardize items across two legal entities for consolidated analytics while respecting that one entity uses Advanced Warehouse Management (WHS) and has different setup needs. The solution must maintain a single source of truth for the product definition while allowing company-specific operational configurations.

Correct Option:

B. Use Common Data Management to create the item templates for both legal entities.

Common Data Service (CDS/Dataverse) serves as the central hub for master data management. You can create a standardized product template or shared product record in CDS that contains the core attributes (ID, name, dimensions).

This master record can then be synchronized to both legal entities in Dynamics 365 Supply Chain Management. Each company can then release the product to itself and perform its own company-specific setup (e.g., assigning different item groups, costing methods, and crucially, enabling WHS for the entity that needs it).

This ensures a single product definition for analytics while allowing operational variations.

Incorrect Options:

A. Create the product record and release to each company where setup variations are completed.

This describes the technical process within D365 SCM, but it is not a master data management strategy. It lacks the governance and centralized control layer. Without CDS or a similar MDM tool, there is no enforced single source of truth, and product creation could become inconsistent.

C. Create an item in each company with different item numbers. / E. Create an item in each company with the same item number.

Both options propose creating duplicate, independent items manually in each company. Using different numbers makes consolidated reporting impossible. Using the same number is risky and not a supported master data strategy—it relies on manual discipline and does not synchronize shared attributes. Neither approach provides a governed, centralized management process.

D. Use an advanced warehouse setup for the product and release to both companies.

This is incorrect because you cannot force the same warehouse setup on both companies if only one uses Advanced WHS. The product must be configured appropriately for each legal entity's operational mode. More importantly, this is a configuration detail, not a master data management strategy.

Reference:

Microsoft's guidance on product information management emphasizes using a centralized product definition. The integration with Common Data Service for master data management enables creating products in a hub and distributing them to multiple legal entities, which is the recommended strategy for standardization and analytics.

| Page 2 out of 9 Pages |

| Previous |