Topic 3: Misc. Questions Set

You have a Fabric workspace named Workspace1 that contains a notebook named

Notebook1.

In Workspace1, you create a new notebook named Notebook2.

You need to ensure that you can attach Notebook2 to the same Apache Spark session as

Notebook1.

What should you do?

A. Enable high concurrency for notebooks.

B. Enable dynamic allocation for the Spark pool.

C. Change the runtime version.

D. Increase the number of executors.

Explanation: To ensure that Notebook2 can attach to the same Apache Spark session as Notebook1, you need to enable high concurrency for notebooks. High concurrency allows multiple notebooks to share a Spark session, enabling them to run within the same Spark context and thus share resources like cached data, session state, and compute capabilities. This is particularly useful when you need notebooks to run in sequence or together while leveraging shared resources.

You have a Fabric workspace.

You have semi-structured data.

You need to read the data by using T-SQL, KQL, and Apache Spark. The data will only be

written by using Spark.

What should you use to store the data?

A. a lakehouse

B. an eventhouse

C. a datamart

D. a warehouse

Explanation: A lakehouse is the best option for storing semi-structured data when you need to read it using T-SQL, KQL, and Apache Spark. A lakehouse combines the flexibility of a data lake (which can handle semi-structured and unstructured data) with the performance features of a data warehouse. It allows data to be written using Apache Spark and can be queried using different technologies such as T-SQL (for SQL-based querying), KQL (Kusto Query Language for querying), and Apache Spark (for distributed processing). This solution is ideal when dealing with semi-structured data and requiring a versatile querying approach.

You are implementing a medallion architecture in a Fabric lakehouse.

You plan to create a dimension table that will contain the following columns:

• ID

• CustomerCode

• CustomerName

• CustomerAddress

• CustomerLocation

• ValidFrom

• ValidTo

You need to ensure that the table supports the analysis of historical sales data by customer

location at the time of each sale Which type of slowly changing dimension (SCD) should

you use?

A. Type 2

B. Type 0

C. Type 1

D. Type 3

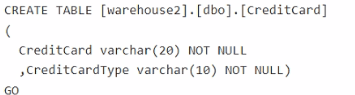

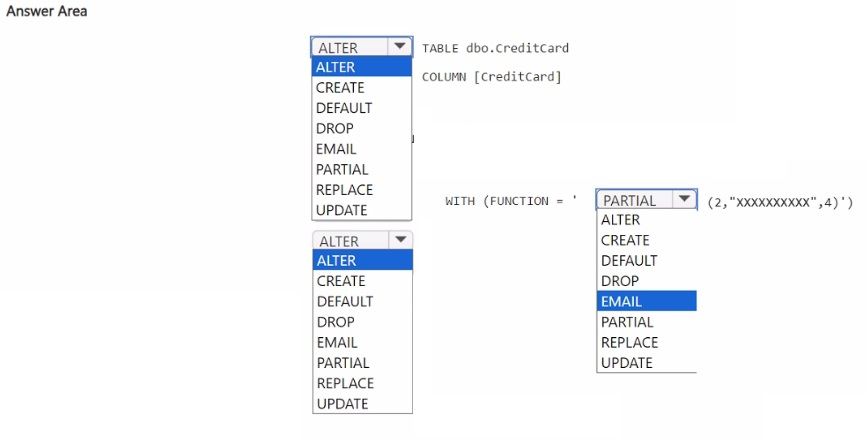

You have a Fabric workspace named Workspace1 that contains a warehouse named

Warehouse2. A team of data analysts has Viewer role access to Workspace1. You create a

table by running the following statement.

You need to ensure that the team can view only the first two characters and the last four

characters of the Creditcard attribute.

How should you complete the statement? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

HOTSPOT

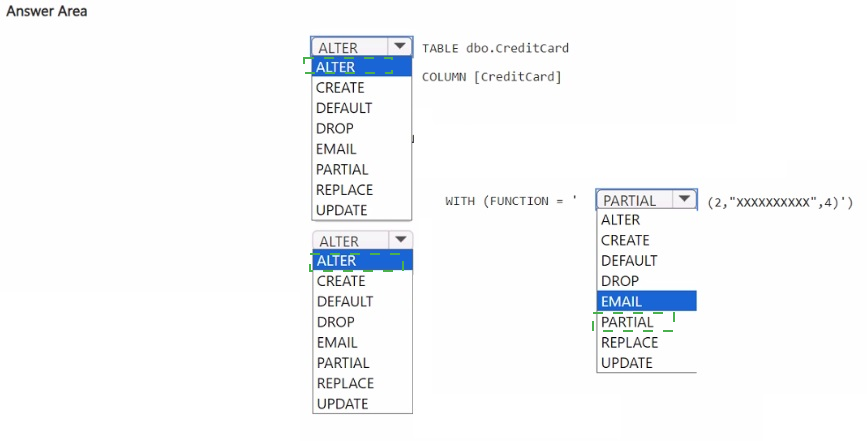

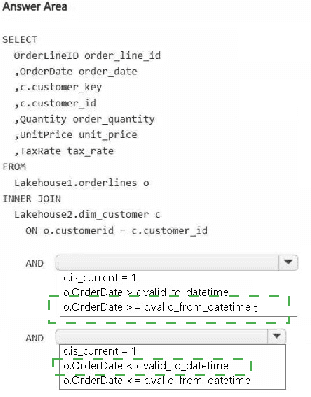

You have a Fabric workspace that contains two lakehouses named Lakehouse1 and

Lakehouse2. Lakehouse1 contains staging data in a Delta table named Orderlines.

Lakehouse2 contains a Type 2 slowly changing dimension (SCD) dimension table named

Dim_Customer.

You need to build a query that will combine data from Orderlines and Dim_Customer to

create a new fact table named Fact_Orders. The new table must meet the following

requirements:

Enable the analysis of customer orders based on historical attributes.

Enable the analysis of customer orders based on the current attributes.

How should you complete the statement? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

You have an Azure SQL database named DB1.

In a Fabric workspace, you deploy an eventstream named EventStreamDBI to stream

record changes from DB1 into a lakehouse.

You discover that events are NOT being propagated to EventStreamDBI.

You need to ensure that the events are propagated to EventStreamDBI.

What should you do?

A. Create a read-only replica of DB1.

B. Create an Azure Stream Analytics job.

C. Enable Extended Events for DB1.

D. Enable change data capture (CDC) for DB1.

Note: This question is part of a series of questions that present the same scenario. Each

question in the series contains a unique solution that might meet the stated goals. Some

question sets might have more than one correct solution, while others might not have a

correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result,

these questions will not appear in the review screen.

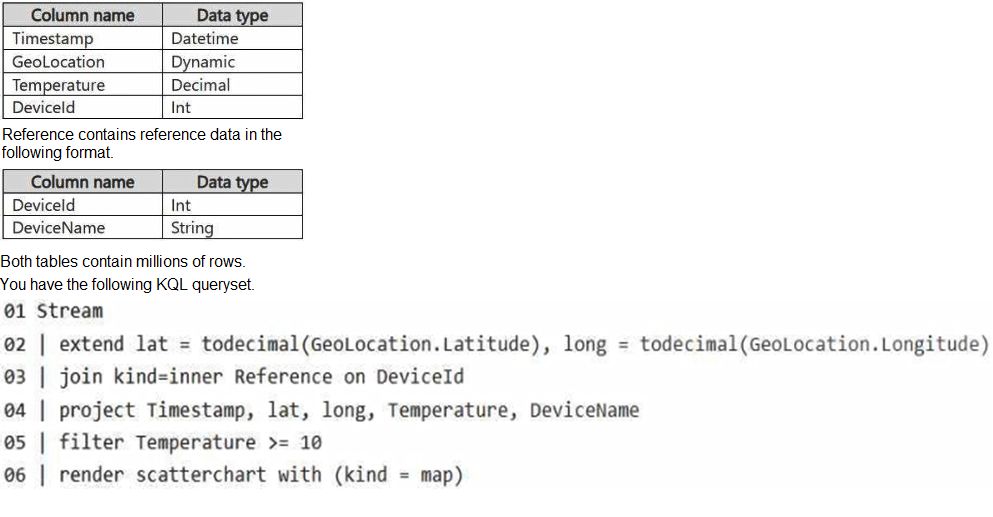

You have a KQL database that contains two tables named Stream and Reference. Stream

contains streaming data in the following format.

You need to reduce how long it takes to run the KQL queryset.

Solution: You change the join type to kind=outer.

Does this meet the goal?

A. Yes

B. No

Explanation: An outer join will include unmatched rows from both tables, increasing the dataset size and processing time. It does not improve query performance.

You need to develop an orchestration solution in fabric that will load each item one after the other. The solution must be scheduled to run every 15 minutes. Which type of item should you use?

A. warehouse

B. data pipeline

C. Dataflow Gen2 dataflow

D. notebook

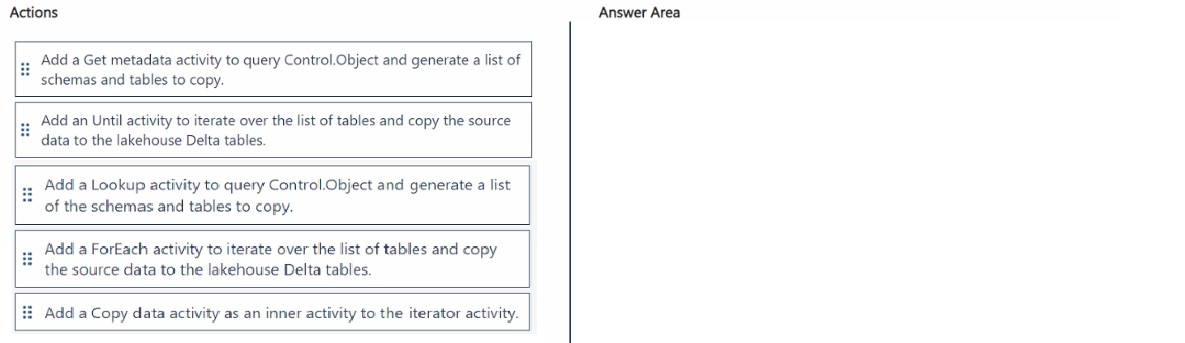

You are building a data loading pattern by using a Fabric data pipeline. The source is an

Azure SQL database that contains 25 tables. The destination is a lakehouse.

In a warehouse, you create a control table named Control.Object as shown in the exhibit.

(Click the Exhibit tab.)

You need to build a data pipeline that will support the dynamic ingestion of the tables listed

in the control table by using a single execution.

Which three actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.

HOTSPOT

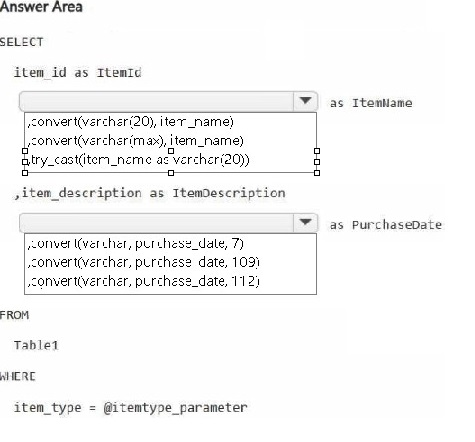

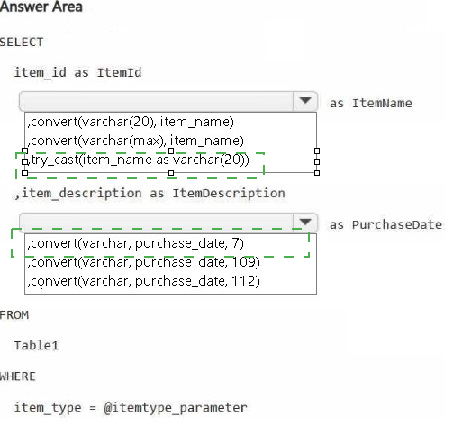

You have a Fabric workspace.

You are debugging a statement and discover the following issues:

Sometimes, the statement fails to return all the expected rows.

The PurchaseDate output column is NOT in the expected format of mmm dd, yy.

You need to resolve the issues. The solution must ensure that the data types of the results

are retained. The results can contain blank cells.

How should you complete the statement? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

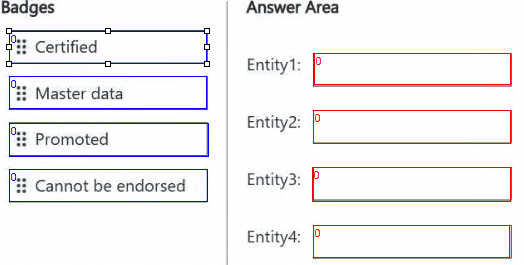

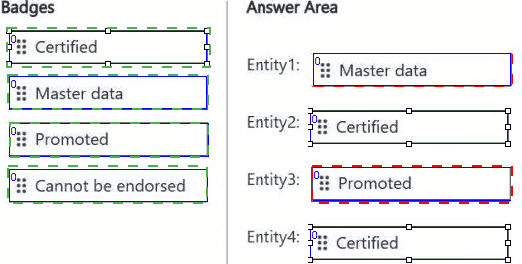

You are implementing the following data entities in a Fabric environment:

Entity1: Available in a lakehouse and contains data that will be used as a core organization

entity

Entity2: Available in a semantic model and contains data that meets organizational

standards

Entity3: Available in a Microsoft Power BI report and contains data that is ready for sharing

and reuse

Entity4: Available in a Power BI dashboard and contains approved data for executive-level

decision making

Your company requires that specific governance processes be implemented for the data.

You need to apply endorsement badges to the entities based on each entity’s use case.

Which badge should you apply to each entity? To answer, drag the appropriate badges the

correct entities. Each badge may be used once, more than once, or not at all. You may

need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Note: This question is part of a series of questions that present the same scenario. Each

question in the series contains a unique solution that might meet the stated goals. Some

question sets might have more than one correct solution, while others might not have a

correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result,

these questions will not appear in the review screen.

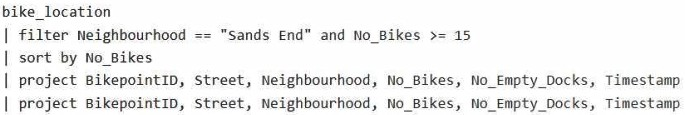

You have a Fabric eventstream that loads data into a table named Bike_Location in a KQL

database. The table contains the following columns:

BikepointID

Street

Neighbourhood

No_Bikes

No_Empty_Docks

Timestamp

You need to apply transformation and filter logic to prepare the data for consumption. The solution must return data for a neighbourhood named Sands End when No_Bikes is at

least 15. The results must be ordered by No_Bikes in ascending order.

Solution: You use the following code segment:

Does this meet the goal?

A. Yes

B. no

Explanation: This code does not meet the goal because it uses sort by without specifying the order, which defaults to ascending, but explicitly mentioning asc improves clarity.

| Page 3 out of 8 Pages |

| Previous |