Topic 1: Litware

Existing Environment Network Environment The manufacturing and research datacenters connect to the primary datacenter by using a VPN. The primary datacenter has an ExpressRoute connection that uses both Microsoft peering and private peering. The private peering connects to an Azure virtual network named HubVNet. Identity Environment Litware has a hybrid Azure Active Directory (Azure AD) deployment that uses a domain named litwareinc.com. All Azure subscriptions are associated to the litwareinc.com Azure AD tenant. Database Environment The sales department has the following database workload: An on-premises named SERVER1 hosts an instance of Microsoft SQL Server 2012 and two 1-TB databases. A logical server named SalesSrv01A contains a geo-replicated Azure SQL database named SalesSQLDb1. SalesSQLDb1 is in an elastic pool named SalesSQLDb1Pool. SalesSQLDb1 uses database firewall rules and contained database users. An application named SalesSQLDb1App1 uses SalesSQLDb1. The manufacturing office contains two on-premises SQL Server 2016 servers named SERVER2 and SERVER3. The servers are nodes in the same Always On availability group. The availability group contains a database named ManufacturingSQLDb1 Database administrators have two Azure virtual machines in HubVnet named VM1 and VM2 that run Windows Server 2019 and are used to manage all the Azure databases. Licensing Agreement Litware is a Microsoft Volume Licensing customer that has License Mobility through Software Assurance. Current Problems SalesSQLDb1 experiences performance issues that are likely due to out-of-date statistics and frequent blocking queries. Requirements Planned Changes Litware plans to implement the following changes: Implement 30 new databases in Azure, which will be used by time-sensitive manufacturing apps that have varying usage patterns. Each database will be approximately 20 GB. Create a new Azure SQL database named ResearchDB1 on a logical server named ResearchSrv01. ResearchDB1 will contain Personally Identifiable Information (PII) data. Develop an app named ResearchApp1 that will be used by the research department to populate and access ResearchDB1. Migrate ManufacturingSQLDb1 to the Azure virtual machine platform. Migrate the SERVER1 databases to the Azure SQL Database platform. Technical Requirements Litware identifies the following technical requirements: Maintenance tasks must be automated. The 30 new databases must scale automatically. The use of an on-premises infrastructure must be minimized. Azure Hybrid Use Benefits must be leveraged for Azure SQL Database deployments. All SQL Server and Azure SQL Database metrics related to CPU and storage usage and limits must be analyzed by using Azure built-in functionality. Security and Compliance Requirements Litware identifies the following security and compliance requirements: Store encryption keys in Azure Key Vault. Retain backups of the PII data for two months. Encrypt the PII data at rest, in transit, and in use. Use the principle of least privilege whenever possible. Authenticate database users by using Active Directory credentials. Protect Azure SQL Database instances by using database-level firewall rules. Ensure that all databases hosted in Azure are accessible from VM1 and VM2 without relying on public endpoints. Business Requirements Litware identifies the following business requirements: Meet an SLA of 99.99% availability for all Azure deployments. Minimize downtime during the migration of the SERVER1 databases. Use the Azure Hybrid Use Benefits when migrating workloads to Azure. Once all requirements are met, minimize costs whenever possible.

You need to provide an implementation plan to configure data retention for ResearchDB1.

The solution must meet the security and compliance requirements.

What should you include in the plan?

A.

Configure the Deleted databases settings for ResearchSrvOL

B.

Deploy and configure an Azure Backup server.

C.

Configure the Advanced Data Security settings for ResearchDBL

D.

Configure the Manage Backups settings for ResearchSrvOL

Configure the Manage Backups settings for ResearchSrvOL

https://docs.microsoft.com/en-us/azure/azure-sql/database/long-term-backup-retentionconfigure

You are evaluating the business goals.

Which feature should you use to provide customers with the required level of access based

on their service agreement?

A.

dynamic data masking

B.

Conditional Access in Azure

C.

service principals

D.

row-level security (RLS)

row-level security (RLS)

https://docs.microsoft.com/en-us/sql/relational-databases/security/row-levelsecurity?

view=sql-server-ver15

What should you use to migrate the PostgreSQL database?

A.

Azure Data Box

B.

AzCopy

C.

Azure Database Migration Service

D.

Azure Site Recovery

Azure Database Migration Service

https://docs.microsoft.com/en-us/azure/dms/dms-overview

You need to implement a solution to notify the administrators. The solution must meet the monitoring requirements.

What should you do?

A.

Create an Azure Monitor alert rule that has a static threshold and assign the alert rule to an action group.

B.

Add a diagnostic setting that logs QueryStoreRuntimeStatistics and streams to an Azure event hub.

C.

Add a diagnostic setting that logs Timeouts and streams to an Azure event hub.

D.

Create an Azure Monitor alert rule that has a dynamic threshold and assign the alert rule

to an action group.

Create an Azure Monitor alert rule that has a dynamic threshold and assign the alert rule

to an action group.

https://azure.microsoft.com/en-gb/blog/announcing-azure-monitor-aiops-alerts-withdynamic-

thresholds/

You need to design an analytical storage solution for the transactional data. The solution

must meet the sales transaction dataset requirements.

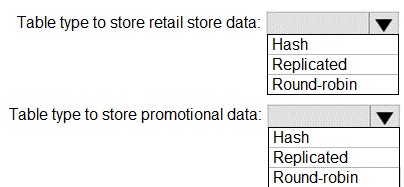

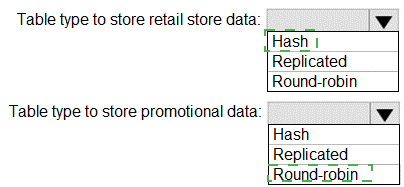

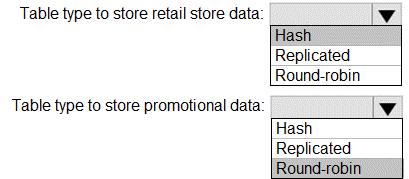

What should you include in the solution? To answer, select the appropriate options in the

answer area.

Each correct selection is worth one point.

Explanation:

Graphical user interface, text, application

Description automatically generated

Box 1: Hash

Scenario:

Ensure that queries joining and filtering sales transaction records based on product ID

complete as quickly as possible.

A hash distributed table can deliver the highest query performance for joins and

aggregations on large tables.

Box 2: Round-robin

Scenario:

You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific product. The product will be identified by a product ID. The

table will be approximately 5 GB.

A round-robin table is the most straightforward table to create and delivers fast

performance when used as a staging table for loads. These are some scenarios where you

should choose Round robin distribution:

When you cannot identify a single key to distribute your data.

If your data doesn’t frequently join with data

You need to implement the surrogate key for the retail store table. The solution must meet

the sales transaction dataset requirements

What should you create?

A.

a table that has a FOREIGN KEY constraint

B.

a table the has an IDENTITY property

C.

a user-defined SEQUENCE object

D.

a system-versioned temporal table

a table the has an IDENTITY property

Scenario: Contoso requirements for the sales transaction dataset include:

Implement a surrogate key to account for changes to the retail store addresses.

A surrogate key on a table is a column with a unique identifier for each row. The key is not

generated from the

table data. Data modelers like to create surrogate keys on their tables when they design

data warehouse models. You can use the IDENTITY property to achieve this goal simply

and effectively without affecting load performance.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-datawarehouse-

tablesidentity

You need to design a data retention solution for the Twitter feed data records. The solution

must meet the customer sentiment analytics requirements

Which Azure Storage functionality should you include in the solution?

A.

time-based retention

B.

change feed

C.

lifecycle management

D.

soft delete

lifecycle management

The lifecycle management policy lets you:

Delete blobs, blob versions, and blob snapshots at the end of their lifecycles

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-managementconcepts

You need to trigger an Azure Data Factory pipeline when a file arrives in an Azure Data

Lake Storage Gen2 container

Which resource provider should you enable?

A. Microsoft.EventHub

B. Microsoft.EventGrid

C. Microsoft.Sql

D. Microsoft.Automation

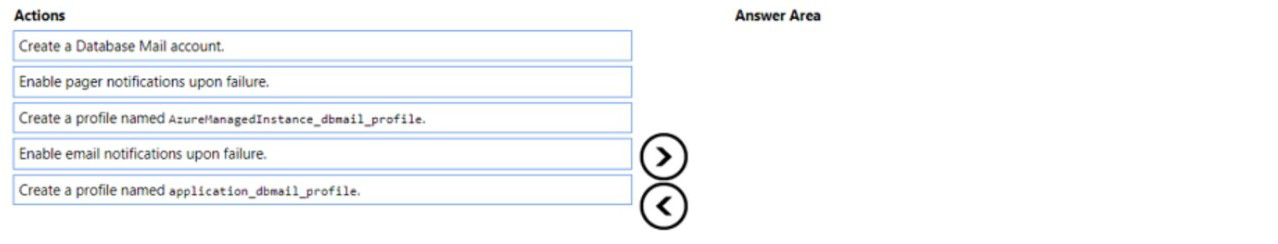

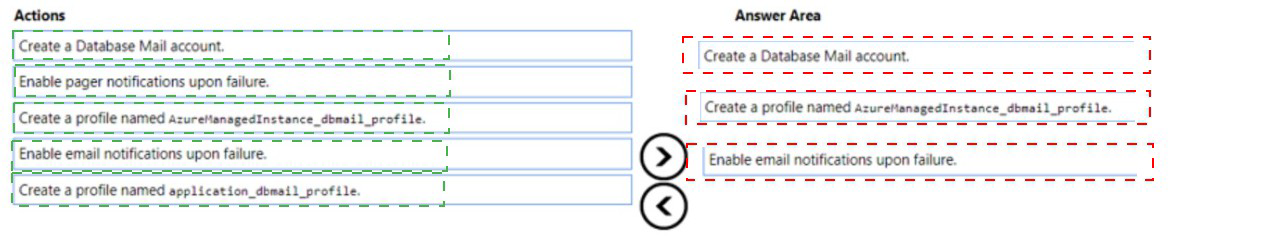

You create a new Azure SQL managed instance named SQL1 and enable Database Mail

extended stored

You need to ensure that SQ Server Agent jobs running on SQL 1 can notify when a failure

Occurs

Which three actions should you perform in sequence 7 TO answer. move the appropriate

actions from the list Of actions to answer area and arrange them in correct order.

Your on-premises network contains a server that hosts a 60-TB database named DB 1.

The network has a 10-Mbps internet connection.

You need to migrate DB 1 to Azure. The solution must minimize how long it takes to

migrate the database.

What should you use?

A. Azure Migrate

B. Data Migration Assistant (DMA)

C. Azure Data BOX

D. Azure Database Migration Service

You have an Azure SQL managed instance.

You need to gather the last execution of a query plan and its runtime statistics. The solution

must minimize the impact on currently running queries.

What should you do?

A. Generate an estimated execution plan.

B. Generate an actual execution plan.

C. Run sys.dm_exec_query_plan_scacs.

D. Generate Live Query Statistics.

Note: This question is part of a series of questions that present the same scenario.

Each question in the series contains a unique solution that might meet the stated

goals. Some question sets might have more than one correct solution, while others

might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a

result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named

Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2

container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime

is stored as an additional column in Table1.

Solution: You use a dedicated SQL pool to create an external table that has an additional

DateTime column.

Does this meet the goal?

A. Yes

B. No

| Page 11 out of 28 Pages |

| Previous |