A DevOps engineer needs to apply a core set of security controls to an existing set of AWS accounts. The accounts are in an organization in AWS Organizations. Individual teams will

administer individual accounts by using the AdministratorAccess AWS managed policy. For

all accounts. AWS CloudTrail and AWS Config must be turned on in all available AWS

Regions. Individual account administrators must not be able to edit or delete any of the

baseline resources. However, individual account administrators must be able to edit or

delete their own CloudTrail trails and AWS Config rules.

Which solution will meet these requirements in the MOST operationally efficient way?

A. Create an AWS CloudFormation template that defines the standard account resources. Deploy the template to all accounts from the organization's management account by using CloudFormation StackSets. Set the stack policy to deny Update:Delete actions.

B. Enable AWS Control Tower. Enroll the existing accounts in AWS Control Tower. Grant the individual account administrators access to CloudTrail and AWS Config.

C. Designate an AWS Config management account. Create AWS Config recorders in all accounts by using AWS CloudFormation StackSets. Deploy AWS Config rules to the organization by using the AWS Config management account. Create a CloudTrail organization trail in the organization’s management account. Deny modification or deletion of the AWS Config recorders by using an SCP.

D. Create an AWS CloudFormation template that defines the standard account resources. Deploy the template to all accounts from the organization's management account by using Cloud Formation StackSets Create an SCP that prevents updates or deletions to CloudTrail resources or AWS Config resources unless the principal is an administrator of the organization's management account.

A company is refactoring applications to use AWS. The company identifies an internal web

application that needs to make Amazon S3 API calls in a specific AWS account.

The company wants to use its existing identity provider (IdP) auth.company.com for

authentication. The IdP supports only OpenID Connect (OIDC). A DevOps engineer needs

to secure the web application's access to the AWS account.

Which combination of steps will meet these requirements? (Select THREE.)

A. Configure AWS 1AM Identity Center. Configure an IdP. Upload the IdP metadata from the existing IdP.

B. Create an 1AM IdP by using the provider URL, audience, and signature from the existing IdP.

C. Create an 1AM role that has a policy that allows the necessary S3 actions. Configure the role's trust policy to allow the OIDC IdP to assume the role if the sts.amazon.conraud context key is appid from idp.

D. Create an 1AM role that has a policy that allows the necessary S3 actions. Configure the role's trust policy to allow the OIDC IdP to assume the role if the auth.company.com:aud context key is appid_from_idp.

E. Configure the web application lo use the AssumeRoleWith Web Identity API operation to retrieve temporary credentials. Use the temporary credentials to make the S3 API calls.

F. Configure the web application to use the GetFederationToken API operation to retrieve temporary credentials Use the temporary credentials to make the S3 API calls.

A company builds a container image in an AWS CodeBuild project by running Docker

commands. After the container image is built, the CodeBuild project uploads the container

image to an Amazon S3 bucket. The CodeBuild project has an IAM service role that has

permissions to access the S3 bucket.

A DevOps engineer needs to replace the S3 bucket with an Amazon Elastic Container

Registry (Amazon ECR) repository to store the container images.

The DevOps engineer creates an ECR private image repository in the same AWS Region of the

CodeBuild project. The DevOps engineer adjusts the IAM service role with the permissions

that are necessary to work with the new ECR repository. The DevOps engineer also places

new repository information into the docker build command and the docker push command

that are used in the buildspec.yml file.

When the CodeBuild project runs a build job, the job fails when the job tries to access the

ECR repository.

Which solution will resolve the issue of failed access to the ECR repository?

A. Update the buildspec.yml file to log in to the ECR repository by using the aws ecr getlogin- password AWS CLI command to obtain an authentication token. Update the docker login command to use the authentication token to access the ECR repository.

B. Add an environment variable of type SECRETS_MANAGER to the CodeBuild project. In

the environment variable, include the ARN of the CodeBuild project's IAM service role.

Update the buildspec.yml file to use the new environment variable to log in with the docker

login command to access the ECR repository.

C. Update the ECR repository to be a public image repository. Add an ECR repository policy that allows the IAM service role to have access.

D. Update the buildspec.yml file to use the AWS CLI to assume the IAM service role for ECR operations. Add an ECR repository policy that allows the IAM service role to have access.

Explanation:

Update the buildspec.yml file to log in to the ECR repository by using the aws ecr get-loginpassword

AWS CLI command to obtain an authentica-tion token. Update the docker login

command to use the authentication token to access the ECR repository.

This is the correct solution. The aws ecr get-login-password AWS CLI command retrieves

and displays an authentication token that can be used to log in to an ECR repository. The

docker login command can use this token as a password to authenticate with the ECR

repository. This way, the CodeBuild project can push and pullimages from the ECR

repository without any errors. For more information, see Using Amazon ECR with the AWS

CLI and get-login-password.

A company needs to implement failover for its application. The application includes an

Amazon CloudFront distribution and a public Application Load Balancer (ALB) in an AWS

Region. The company has configured the ALB as the default origin for the distribution.

After some recent application outages, the company wants a zero-second RTO. The

company deploys the application to a secondary Region in a warm standby configuration. A

DevOps engineer needs to automate the failover of the application to the secondary

Region so that HTTP GET requests meet the desired R TO.

Which solution will meet these requirements?

A. Create a second CloudFront distribution that has the secondary ALB as the default origin. Create Amazon Route 53 alias records that have a failover policy and Evaluate Target Health set to Yes for both CloudFront distributions. Update the application to use the new record set.

B. Create a new origin on the distribution for the secondary ALB. Create a new origin group. Set the original ALB as the primary origin. Configure the origin group to fail over for HTTP 5xx status codes. Update the default behavior to use the origin group.

C. Create Amazon Route 53 alias records that have a failover policy and Evaluate Target Health set to Yes for both ALBs. Set the TTL of both records to O. Update the distribution's origin to use the new record set.

D. Create a CloudFront function that detects HTTP 5xx status codes. Configure the function to return a 307 Temporary Redirect error response to the secondary ALB if the function detects 5xx status codes. Update the distribution's default behavior to send origin responses to the function.

Explanation:

To implement failover for the application to the secondary Region so that HTTP GET

requests meet the desired RTO, the DevOps engineer should use the following solution:

Create a new origin on the distribution for the secondary ALB. A CloudFront origin

is the source of the content that CloudFront delivers to viewers. By creating anew

origin for the secondary ALB, the DevOps engineer can configure CloudFront to

route traffic to the secondary Region when the primary Region is unavailable1

Create a new origin group. Set the original ALB as the primary origin. Configure

the origin group to fail over for HTTP 5xx status codes. An origin group is a logical

grouping of two origins: a primary origin and a secondary origin. By creating an

origin group, the DevOps engineer can specify which origin CloudFront should use

as a fallback when the primary origin fails. The DevOps engineer can also define

which HTTP status codes should trigger a failover from the primary origin to the

secondary origin. By setting the original ALB as the primary origin and configuring

the origin group to fail over for HTTP 5xx status codes, the DevOps engineer can

ensure that CloudFront will switch to the secondary ALB when the primary ALB

returns server errors2

Update the default behavior to use the origin group. A behavior is a set of rules that CloudFront applies when it receives requests for specific URLs or file

types.The default behavior applies to all requests that do not match any other

behaviors. By updating the default behavior to use the origin group, the DevOps

engineer can enable failover routing for all requests that are sent to the

distribution3

This solution will meet the requirements because it will automate the failover of the

application to the secondary Region with zero-second RTO. When CloudFront receives an

HTTP GET request, it will first try to route it to the primary ALB in the primary Region. If the

primary ALB is healthy and returns a successful response, CloudFront will deliver it to the

viewer. If the primary ALB is unhealthy or returns an HTTP 5xx status code, CloudFront will

automatically route the request to the secondary ALB in the secondary Region and deliver

its response to the viewer.

The other options are not correct because they either do not provide zero-second RTO or

do not work as expected. Creating a second CloudFront distribution that has the secondary

ALB as the default origin and creating Amazon Route 53 alias records that have a failover

policy is not a good option because it will introduce additional latency and complexity to the

solution. Route 53 health checks and DNS propagation can take several minutes or longer,

which means that viewers might experience delays or errors when accessing the

application during a failover event. Creating Amazon Route 53 alias records that have a

failover policy and Evaluate Target Health set to Yes for both ALBs and setting the TTL of

both records to O is not a valid option because it will not work with CloudFront distributions.

Route 53 does not support health checks for alias records that point to CloudFront

distributions, so it cannot detect if an ALB behind a distribution is healthy or not. Creating a

CloudFront function that detects HTTP 5xx status codes and returns a 307 Temporary

Redirect error response to the secondary ALB is not a valid option because it will not

provide zero-second RTO. A 307 Temporary Redirect error response tells viewers to retry

their requests with a different URL, which means that viewers will have to make an

additional request and wait for another response from CloudFront before reaching the

secondary ALB.

A company detects unusual login attempts in many of its AWS accounts. A DevOps

engineer must implement a solution that sends a notification to the company's security

team when multiple failed login attempts occur. The DevOps engineer has already created

an Amazon Simple Notification Service (Amazon SNS) topic and has subscribed the

security team to the SNS topic.

Which solution will provide the notification with the LEAST operational effort?

A. Configure AWS CloudTrail to send log management events to an Amazon CloudWatch Logs log group. Create a CloudWatch Logs metric filter to match failed ConsoleLogin events. Create a CloudWatch alarm that is based on the metric filter. Configure an alarm action to send messages to the SNS topic.

B. Configure AWS CloudTrail to send log management events to an Amazon S3 bucket. Create an Amazon Athena query that returns a failure if the query finds failed logins in the logs in the S3 bucket. Create an Amazon EventBridge rule to periodically run the query. Create a second EventBridge rule to detect when the query fails and to send a message to the SNS topic.

C. Configure AWS CloudTrail to send log data events to an Amazon CloudWatch Logs log group. Create a CloudWatch logs metric filter to match failed Consolel_ogin events. Create a CloudWatch alarm that is based on the metric filter. Configure an alarm action to send messages to the SNS topic.

D. Configure AWS CloudTrail to send log data events to an Amazon S3 bucket. Configure an Amazon S3 event notification for the s3:ObjectCreated event type. Filter the event type by ConsoleLogin failed events. Configure the event notification to forward to the SNS topic.

Explanation:

The correct answer is C. Configuring AWS CloudTrail to send log data events to an

Amazon CloudWatch Logs log group and creating a CloudWatch logs metric filter to match

failed ConsoleLogin events is the simplest and most efficient way to monitor and alert on

failed login attempts. Creating a CloudWatch alarm that is based on the metric filter and

configuring an alarm action to send messages to the SNS topic will ensure that the security

team is notified when multiple failed login attempts occur. This solution requires the least

operational effort compared to the other options.

Option A is incorrect because it involves configuring AWS CloudTrail to send log

management events instead of log data events. Log management events are used to track

changes to CloudTrail configuration, such as creating, updating, or deleting a trail. Log data

events are used to track API activity in AWS accounts, such as login attempts. Therefore,

option A will not capture the failed ConsoleLogin events.

Option B is incorrect because it involves creating an Amazon Athena query and two

Amazon EventBridge rules to monitor and alert on failed login attempts. This is a more

complex and costly solution than using CloudWatch logs and alarms. Moreover, option B

relies on the query returning a failure, which may not happen if the query is executed

successfully but does not find any failed logins.

Option D is incorrect because it involves configuring AWS CloudTrail to send log data events to an Amazon S3 bucket and configuring an Amazon S3 event notification for the

s3:ObjectCreated event type. This solution will not work because the s3:ObjectCreated

event type does not allow filtering by ConsoleLogin failed events. The event notification will

be triggered for any object created in the S3 bucket, regardless of the event type.

Therefore, option D will generate a lot of false positives and unnecessary notifications.

A company requires its developers to tag all Amazon Elastic Block Store (Amazon EBS)

volumes in an account to indicate a desired backup frequency. This requirement Includes

EBS volumes that do not require backups. The company uses custom tags named

Backup_Frequency that have values of none, dally, or weekly that correspond to the

desired backup frequency. An audit finds that developers are occasionally not tagging the

EBS volumes.

A DevOps engineer needs to ensure that all EBS volumes always have the

Backup_Frequency tag so that the company can perform backups at least weekly unless a

different value is specified.

Which solution will meet these requirements?

A. Set up AWS Config in the account. Create a custom rule that returns a compliance

failure for all Amazon EC2 resources that do not have a Backup Frequency tag applied.

Configure a remediation action that uses a custom AWS Systems Manager Automation

runbook to apply the Backup_Frequency tag with a value of weekly.

B. Set up AWS Config in the account. Use a managed rule that returns a compliance

failure for EC2::Volume resources that do not have a Backup Frequency tag applied.

Configure a remediation action that uses a custom AWS Systems Manager Automation

runbook to apply the Backup_Frequency tag with a value of weekly.

C. Turn on AWS CloudTrail in the account. Create an Amazon EventBridge rule that reacts to EBS CreateVolume events. Configure a custom AWS Systems Manager Automation runbook to apply the Backup_Frequency tag with a value of weekly. Specify the runbook as the target of the rule.

D. Turn on AWS CloudTrail in the account. Create an Amazon EventBridge rule that reacts to EBS CreateVolume events or EBS ModifyVolume events. Configure a custom AWS Systems Manager Automation runbook to apply the Backup_Frequency tag with a value of weekly. Specify the runbook as the target of the rule.

Explanation: The following are the steps that the DevOps engineer should take to ensure

that all EBS volumes always have the Backup_Frequency tag so that the company can

perform backups at least weekly unless a different value is specified:

Set up AWS Config in the account.

Use a managed rule that returns a compliance failure for EC2::Volume resources

that do not have a Backup Frequency tag applied.

Configure a remediation action that uses a custom AWS Systems Manager

Automation runbook to apply the Backup_Frequency tag with a value of weekly.

The managed ruleAWS::Config::EBSVolumesWithoutBackupTagwill return a compliance

failure for any EBS volume that does not have the Backup_Frequency tag applied. The

remediation action will then use the Systems Manager Automation runbook to apply the

Backup_Frequency tag with a value of weekly to the EBS volume.

A company uses AWS Organizations to manage its AWS accounts. The organization root

has a child OU that is named Department. The Department OU has a child OU that is

named Engineering. The default FullAWSAccess policy is attached to the root, the

Department OU. and the Engineering OU.

The company has many AWS accounts in the Engineering OU. Each account has an

administrative 1AM role with the AdmmistratorAccess 1AM policy attached. The default

FullAWSAccessPolicy is also attached to each account.

A DevOps engineer plans to remove the FullAWSAccess policy from the Department OU

The DevOps engineer will replace the policy with a policy that contains an Allow statement

for all Amazon EC2 API operations.

What will happen to the permissions of the administrative 1AM roles as a result of this

change'?

A. All API actions on all resources will be allowed

B. All API actions on EC2 resources will be allowed. All other API actions will be denied.

C. All API actions on all resources will be denied

D. All API actions on EC2 resources will be denied. All other API actions will be allowed.

A company has a mission-critical application on AWS that uses automatic scaling The

company wants the deployment lilecycle to meet the following parameters.

• The application must be deployed one instance at a time to ensure the remaining fleet

continues to serve traffic

• The application is CPU intensive and must be closely monitored

• The deployment must automatically roll back if the CPU utilization of the deployment

instance exceeds 85%.

Which solution will meet these requirements?

A. Use AWS CloudFormalion to create an AWS Step Functions state machine and Auto Scaling hfecycle hooks to move to one instance at a time into a wait state Use AWS Systems Manager automation to deploy the update to each instance and move it back into the Auto Scaling group using the heartbeat timeout

B. Use AWS CodeDeploy with Amazon EC2 Auto Scaling. Configure an alarm tied to the CPU utilization metric. Use the CodeDeployDefault OneAtAtime configuration as a deployment strategy Configure automatic rollbacks within the deployment group to roll back the deployment if the alarm thresholds are breached

C. Use AWS Elastic Beanstalk for load balancing and AWS Auto Scaling Configure an alarm tied to the CPU utilization metric Configure rolling deployments with a fixed batch size of one instance Enable enhanced health to monitor the status of the deployment and roll back based on the alarm previously created.

D. Use AWS Systems Manager to perform a blue/green deployment with Amazon EC2 Auto Scaling Configure an alarm tied to the CPU utilization metric Deploy updates one at a time Configure automatic rollbacks within the Auto Scaling group to roll back the deployment if the alarm thresholds are breached

A company uses containers for its applications The company learns that some container

Images are missing required security configurations

A DevOps engineer needs to implement a solution to create a standard base image The

solution must publish the base image weekly to the us-west-2 Region, us-east-2 Region,

and eu-central-1 Region.

Which solution will meet these requirements?

A. Create an EC2 Image Builder pipeline that uses a container recipe to build the image.

Configure the pipeline to distribute the image to an Amazon Elastic Container Registry

(AmazonECR) repository in us-west-2. Configure ECR replication from us-west-2 to useast-

2 and from us-east-2 to eu-central-1 Configure the pipeline to run weekly

B. Create an AWS CodePipeline pipeline that uses an AWS CodeBuild project to build the image Use AWS CodeOeploy to publish the image to an Amazon Elastic Container Registry (Amazon ECR) repository in us-west-2 Configure ECR replication from us-west-2 to us-east-2 and from us-east-2 to eu-central-1 Configure the pipeline to run weekly

C. Create an EC2 Image Builder pipeline that uses a container recipe to build the Image Configure the pipeline to distribute the image to Amazon Elastic Container Registry (Amazon ECR) repositories in all three Regions. Configure the pipeline to run weekly.

D. Create an AWS CodePipeline pipeline that uses an AWS CodeBuild project to build the image Use AWS CodeDeploy to publish the image to Amazon Elastic Container Registry (Amazon ECR) repositories in all three Regions. Configure the pipeline to run weekly.

A company uses AWS CodePipeline pipelines to automate releases of its application A

typical pipeline consists of three stages build, test, and deployment. The company has

been using a separate AWS CodeBuild project to run scripts for each stage. However, the

company now wants to use AWS CodeDeploy to handle the deployment stage of the

pipelines.

The company has packaged the application as an RPM package and must deploy the

application to a fleet of Amazon EC2 instances. The EC2 instances are in an EC2 Auto

Scaling group and are launched from a common AMI.

Which combination of steps should a DevOps engineer perform to meet these

requirements? (Choose two.)

A. Create a new version of the common AMI with the CodeDeploy agent installed. Update the IAM role of the EC2 instances to allow access to CodeDeploy.

B. Create a new version of the common AMI with the CodeDeploy agent installed. Create an AppSpec file that contains application deployment scripts and grants access to CodeDeploy.

C. Create an application in CodeDeploy. Configure an in-place deployment type. Specify the Auto Scaling group as the deployment target. Add a step to the CodePipeline pipeline to use EC2 Image Builder to create a new AMI. Configure CodeDeploy to deploy the newly created AMI.

D. Create an application in CodeDeploy. Configure an in-place deployment type. Specify the Auto Scaling group as the deployment target. Update the CodePipeline pipeline to use the CodeDeploy action to deploy the application.

E. Create an application in CodeDeploy. Configure an in-place deployment type. Specify the EC2 instances that are launched from the common AMI as the deployment target. Update the CodePipeline pipeline to use the CodeDeploy action to deploy the application.

A company uses Amazon EC2 as its primary compute platform. A DevOps team wants to

audit the company's EC2 instances to check whether any prohibited applications have

been installed on the EC2 instances.

Which solution will meet these requirements with the MOST operational efficiency?

A. Configure AWS Systems Manager on each instance Use AWS Systems Manager Inventory Use Systems Manager resource data sync to synchronize and store findings in an Amazon S3 bucket Create an AWS Lambda function that runs when new objects are added to the S3 bucket. Configure the Lambda function to identify prohibited applications.

B. Configure AWS Systems Manager on each instance Use Systems Manager Inventory Create AWS Config rules that monitor changes from Systems Manager Inventory to identify prohibited applications.

C. Configure AWS Systems Manager on each instance. Use Systems Manager Inventory. Filter a trail in AWS CloudTrail for Systems Manager Inventory events to identify prohibited applications.

D. Designate Amazon CloudWatch Logs as the log destination for all application instances Run an automated script across all instances to create an inventory of installed applications Configure the script to forward the results to CloudWatch Logs Create a CloudWatch alarm that uses filter patterns to search log data to identify prohibited applications.

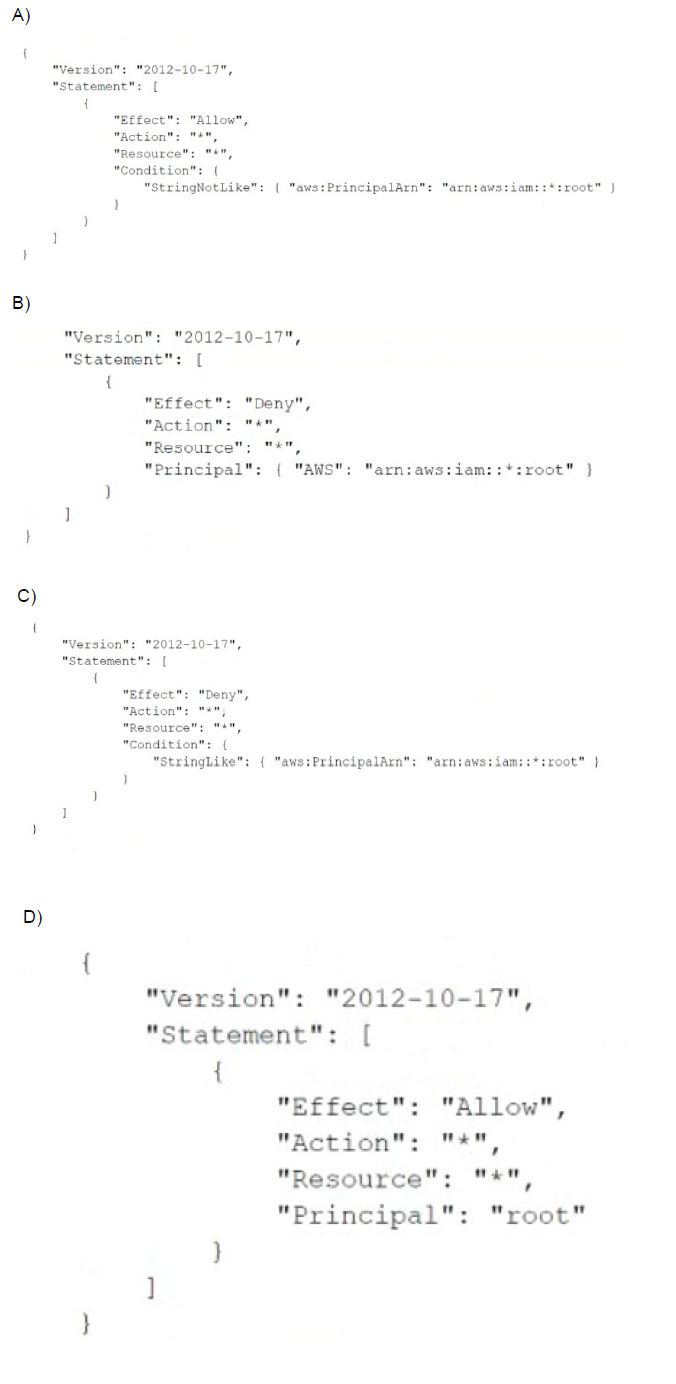

A company manages multiple AWS accounts in AWS Organizations. The company's

security policy states that AWS account root user credentials for member accounts must

not be used. The company monitors access to the root user credentials.

A recent alert shows that the root user in a member account launched an Amazon EC2

instance. A DevOps engineer must create an SCP at the organization's root level that will

prevent the root user in member accounts from making any AWS service API calls.

Which SCP will meet these requirements?

A. Option A

B. Option B

C. Option C

D. Option D

| Page 12 out of 27 Pages |

| Previous |