An Architect is documenting the technical design for a single B2C Commerce storefront. The Client has a business requirement to provide pricing that is customized to specific groups:

• 50 different pricing groups of customers

• 30 different pricing groups of employees

• 10 different pricing groups of vendors

Which items should the Architect include in the design in order to set applicable price books based on these requirements''

(Choose 2 answers)

A. - 50 customer groups for customers- 30 customer groups for employees- 10 customer groups for vendors

B. - One customer group and SO subgroups for customers- One customer group and 30 subgroups for employees- Onecustomer group and 10 subgroups for vendors

C. - One campaign and multiple promotions for each customer group

D. - One promotion and 50 campaigns for customers- One promotion and 30 campaigns for employees- One promotion and 10 campaigns for vendors

Explanation:

Option A:

✅ Valid Approach

50 customer groups for customers, 30 for employees, and 10 for vendors is a direct way to map pricing groups.

Customer Groups in Salesforce B2C Commerce allow assigning different price books to different segments.

Each group (customers, employees, vendors) gets its own set of price books.

Option B:

✅ Valid & Scalable Approach

Using one primary customer group per type (customers, employees, vendors) with subgroups is a structured approach.

Example:

Customers → 1 parent group + 50 subgroups.

Employees → 1 parent group + 30 subgroups.

Vendors → 1 parent group + 10 subgroups.

This keeps the hierarchy clean and manageable.

Why Not C & D?

❌ Option C (Campaigns & Promotions):

Campaigns and promotions are for discounts, not base pricing.

They are not the right mechanism for assigning price books.

❌ Option D (Promotions & Campaigns):

Similar issue as C—promotions and campaigns are for temporary discounts, not permanent pricing structures.

Price books (assigned via customer groups) are the correct way to handle this requirement.

During a technical review, the Client raises a need to display product pricing on the Product Detail Page (PDP) with discounted values prepromotion. The Client notes customers complained of bad user experiences in the past when they would add a product to the basket from the cached PDP and then see a higher price when they started checkout as the promotion had expired. What should the Architect suggest be implemented for this given that performance should be minimally impact?

A. Remove caching of the product page during the promotion.

B. Adjust the PDP to have a low caching period during the promotion.

C. Modify the page to vary the cache by price and promotion.

D. Create a separate template or view based on the promotion.

Explanation:

Why Option C?

✅ Vary the Cache by Price & Promotion

1. This ensures that different versions of the PDP are cached based on the applicable promotions and pricing for each customer.

2. When a promotion changes or expires, a new cached version is generated, preventing users from seeing outdated prices.

3. Minimal performance impact because the system still leverages caching—just with dynamic segmentation.

Why Not the Other Options?

❌ A. Remove caching of the product page during the promotion

Eliminating caching entirely would severely degrade performance, especially during high-traffic promotions.

❌ B. Adjust the PDP to have a low caching period during the promotion

While reducing TTL (Time-To-Live) helps, it doesn’t fully prevent race conditions where a user sees an expired promotion before the cache refreshes.

❌ D. Create a separate template or view based on the promotion

This adds unnecessary complexity (multiple templates for the same page).

Does not solve the caching issue—if the promotion expires, cached versions could still show incorrect prices.

A third party survey provider offers both an API endpoint for individual survey data and an SFTP server endpoint that can accept batch survey data. The initial implementation of the integration includes

1. Marking the order as requiring a survey before order placement

2. On the order confirmation pace, the survey form is displayed for the customer to fill

3. The data is sent to the survey provider API, and the order it marked as not requiring a survey

Later it was identified that this solution is not fit for purpose as the following issues and additional requirements were identified:

1. If the API call fails, the corresponding survey data is lost. The Business requires to avoid data loss.

2. Some customers skipped the form. The Business require sending a survey email to such customers.

3. The Order Management System (OMS) uses a non-standard XML parser it did not manage to parse orders with the survey, until the survey attribute was manually removed from the xml.

How should the Architect address the issues and requirements described above?

A. Create a custom session attribute when the survey is required. Send to the API endpoint in real-time. On failure, capture the survey data in the session and reprocess, use me session attribute to send emails for the cases when survey was skipped.

B. Create a custom object to store the survey data. Send to the API endpoint using a job. On success, remove the custom object. On failure, send the survey data with API from the next execution of the same job. Use the custom object to send emails for the cases when the survey was skipped.

C. Create a custom object when the survey is required Send to the API endpoint in real- time. On success, remove the object. On failure, capture the survey data in the custom object and later reprocess with a job. Use the custom object to send emails for the cases when survey was skipped.

D. Send the survey data to the API endpoint in real-time until the survey data is successfully captured. Instruct the OMS development team to update their XML parser, use the Order survey attribute to send emails for the cases when the survey was skipped.

Explanation:

Why Option B?

✅ Persistent Storage (Custom Object) for Survey Data

Ensures no data loss if the API fails (stores survey responses reliably).

Batch processing via a job improves reliability (retries failed submissions).

✅ Handles Skipped Surveys (Email Fallback)

The custom object tracks which customers skipped the survey, enabling email follow-ups.

✅ Avoids OMS XML Parsing Issues

Since the survey data is stored separately (not embedded in the order XML), it prevents OMS parsing failures.

Why Not Other Options?

❌ A. Session-based storage

Session data is volatile (lost if the user leaves or the session expires).

Not reliable for retries or email follow-ups.

❌ C. Hybrid (Real-time + Job)

Real-time API calls can still fail, requiring retries.

Less efficient than a pure batch/job approach (Option B).

❌ D. Force real-time retries + OMS parser update

Does not guarantee data persistence (if API keeps failing).

Depends on OMS changes (out of the Architect’s control).

Best Practice & Reference:

Use Custom Objects for transient but critical data (e.g., surveys).

Batch jobs (instead of real-time) for resilient third-party integrations.

Salesforce B2C Commerce recommends asynchronous processing for reliability.

An ecommerce site has dynamic shipping cost calculation. it allows the customers to see their potential shipping costs on the Product Detail Page before adding an item to the cart.

For this feature, shipping touts are calculatedusing the following logic:

• Set the shipping method on the Basket

• Add the item to the basket, calculate the basket total and get the shipping cost for this method

• Remove the item from the Basket to restore the original state

• The above process isrepeated for each shipping method

During the testing it was discovered that the above code violates the spi.basket.addResolveInSameResquest quota.

What should the Architect do to resolve this issue and maintain the business requirement?

A. Omit the removal of the Item and speed up the process for the customer by adding the product to the basket for them.

B. Omit the calculation of shipping cost until the customer is ready to check out and has chosen the shipping method they want to

C. Wrap each Individual step of the process its own transaction Instead of using one transaction for all steps.

D. Wrap the adding of product and shipping cost calculation in a transaction which Is then rolled back to restore the original state

Explanation:

Why Option D?

✅ Respects spi.basket.addResolveInSameRequest Quota

By wrapping the operation in a transaction and rolling it back, the system avoids multiple add/remove calls, which trigger quota violations.

The basket remains unchanged after calculation, maintaining the original state.

✅ Maintains Business Requirement (Pre-Checkout Shipping Estimates)

Customers still see real-time shipping costs on the PDP without permanently altering their cart.

✅ Optimized Performance

Single transaction reduces API calls compared to repeated add/remove cycles.

Why Not Other Options?

❌ A. Omit removal & pre-add the product

Forces items into the cart prematurely, hurting UX (customers may not want them yet).

Does not solve quota issues (still requires multiple basket modifications).

❌ B. Delay shipping calculation until checkout

Breaks the business requirement (customers expect upfront shipping estimates).

❌ C. Wrap each step in separate transactions

Still consumes quota (each add/remove counts against spi.basket limits).

Less efficient than a single rolled-back transaction (Option D).

Best Practice & Reference:

Salesforce B2C Commerce quotas limit spi.basket operations per request.

Transactions with rollback are the standard solution for temporary basket changes.

Example:

var transaction = require('dw/system/Transaction');

transaction.begin();

// Add item, calculate shipping

transaction.rollback(); // Restore original basket

During the testing of the login form, QA finds out that the first time the user can log in, but every other login attempt from another computer leads to the homepage and the basket being emptied. Developers tried to debug the issue, but when they add a breakpoint to the login action, it is not hit by the debugger. What should the Architect recommend developers to check?

A. Remove CSRF protection from Login Form Action.

B. Add remote include for the login page

C. Add disable cache page in the template ISML -

D. Check Login Form and any includedtemplates for includes that enable page caching.

Explanation:

Why Option D?

✅ Caching Issue Symptoms Match

First login works, but subsequent attempts fail → Likely cached response being served.

Breakpoint not hit → Requests may bypass the controller due to caching.

✅ Cache Misconfiguration in Login Flow

Login forms must not be cached (security & session integrity).

If templates or includes have cache="true", the system may serve stale pages, causing:

Empty baskets (session mismatch).

Skipped controller logic (debugger breakpoints ignored).

✅ Solution:

Audit ISML templates for

Ensure login-related endpoints are excluded from caching.

Why Not Other Options?

❌ A. Remove CSRF protection

Security risk (CSRF tokens are required for login).

Doesn’t address caching or basket issues.

❌ B. Add remote include

Irrelevant—remote includes are for dynamic content, not login flow fixes.

❌ C. Add disable cache in ISML

Partial solution—only affects the main template. Nested includes may still cache.

Best Practice & Reference:

Salesforce B2C Commerce caching guidelines prohibit caching:

Authentication pages (Login-Form, Account-Show).

Pages modifying session data (e.g., basket updates).

Debugging steps:

1. Check template.xml for cache settings.

2. Search for

3. Use

Northern Trail Outfitters uses an Order Management system (OMS), which creates an order tracking number for every order 24 hours after receiving it. The OMS provides only a web-service interface to get this tracking number. There is a job that updates this tracking number for exported orders, which were last modified yesterday.

Part of this jobs code looks like the following:

Based on the above description and code snippet, which coding best practice should the Architect enforce?

A. Post-processing of search results is a bad practice that needs to be corrected.

B. The transaction for updating of orders needs to be rewritten to avoid problems with transaction size.

C. Configure circuit breaker and timeout for theOMS web service call to prevent thread exhaustion.

D. Standard order import should be used instead of modifying multiple order objects with custom code.

Explanation:

Why Option C?

✅ Prevents System Instability

OMS web service calls can fail or hang, leading to thread pool exhaustion if not properly managed.

A circuit breaker (e.g., dw.system.HookMgr) stops cascading failures by:

Blocking requests after a failure threshold.

Timing out slow calls.

✅ Matches the Scenario

The job processes yesterday’s orders in bulk—unreliable OMS responses could stall the job indefinitely.

Why Not Other Options?

❌ A. Post-processing search results

While inefficient, post-filtering isn’t the critical issue here (thread safety is).

❌ B. Transaction size

The snippet doesn’t show large transactions (but even if present, thread exhaustion is the bigger risk).

❌ D. Standard order import

Custom code is necessary to fetch OMS tracking numbers (no native "import" for this).

Best Practice & Reference:

Circuit Breaker Pattern: Essential for external service calls in B2C Commerce.

Example:

var CircuitBreaker = require('dw/system/CircuitBreaker');

var breaker = new CircuitBreaker('OMS_Tracking_Service');

breaker.execute(function() { /* Call OMS */ });

Timeout: Set via dw.system.Site preferences.

The Client has requested an Architect’s help in documenting the architectural approach to a new home page. The requirements provided by the business are:

• Multiple areas of static image content, some may need text shown at well

• The content page must be Realizable

• A carousel of featured products must be shown below a banner 101191

Recommended categories will be featured based on the time of year Which two solutions would fulfil these requirements?

(Choose 2 answers)

A. Leverage B2C Commerce ContentManagement Service

B. Leverage B2C Commerce locales in Business Manager

C. Leverage B2C Commerce content slots and assets

D. Leverage B2C Commerce Page Designer with a dynamic layout.

Explanation:

Option C: Content Slots & Assets

✅ Fulfills Requirements:

Static image content with text: Content slots allow flexible placement of images, text, and rich media.

Realizable (Responsive): Assets adapt to different screen sizes.

Carousel & Dynamic Categories: Slots can be populated with product carousels or seasonal category recommendations via Business Manager rules.

Option D: Page Designer with Dynamic Layout

✅ Fulfills Requirements:

Drag-and-drop editor simplifies placement of banners, carousels, and recommended categories.

Responsive by default: Page Designer layouts auto-adapt to devices.

Time-based rules: Dynamic layouts can swap content seasonally (e.g., winter vs. summer categories).

Why Not A & B?

❌ A. Content Management Service (CMS):

Overkill for a homepage (typically used for blogs/marketing pages).

Doesn’t natively support product carousels or dynamic category rules.

❌ B. Locales in Business Manager:

Manages language/region settings, not page content or layouts.

Best Practices:

Content Slots: Use for reusable, rule-driven content (e.g., "Summer Sale Banner").

Page Designer: Ideal for structural page changes (e.g., moving the carousel position).

A developer is remotely fetching the reviews for a product. Assume that it's an HTTP GET request and caching needs to be implemented, what consideration should the developer keep in mind for building the caching strategy?

A. Cache the HTTP service request

B. Remote include with caching only the reviews

C. Use custom cache

D. Cached remote include with cache of the HTTP service

Explanation:

A. Cache the HTTP service request

B2C Commerce Service Framework does support response caching in the service definition:

cacheTTL="600"

/>

BUT… that Service-level cache:

Is global across all instances of that service definition.

Does not always cache per product unless you parameterize the cache key.

Has fewer controls compared to custom cache (e.g. invalidation, logic for different products).

So it’s possible — but less flexible.

→ Possible, but limited. Not best practice for per-product caching.

B. Remote include with caching only the reviews

A remote include calls a B2C controller and injects HTML into the page.

You can set caching on the include (e.g. iscache tags).

BUT:

Still requires generating HTML on every include call.

Doesn’t efficiently cache just the raw review data (e.g. JSON).

Introduces more moving parts vs. simply caching the service result.

→ Not ideal. We want data caching, not merely HTML caching.

C. Use custom cache

✅ Custom cache is the most precise solution.

You can store the HTTP response (JSON) keyed by product ID.

You fully control:

TTL

Cache key

Invalidation logic

Works perfectly for reviews:

Fetch once from the remote service

Serve quickly from cache for subsequent requests

Example:

var CacheMgr = require('dw/system/CacheMgr');

var reviewsCache = CacheMgr.getCache('ProductReviews');

var cachedReviews = reviewsCache.get(productId);

if (!cachedReviews) {

var result = callRemoteService(productId);

reviewsCache.put(productId, result, 3600);

} else {

var result = cachedReviews;

}

→ Recommended best practice. This gives you fine-grained, per-product caching.

D. Cached remote include with cache of the HTTP service

You could cache both the remote include AND the HTTP service call.

That’s basically duplicating caching layers:

Service cache

Remote include cache

→ Overengineering. One layer of caching (custom cache) is sufficient.

A new version of the Page Show controller is required for implementation of Page Deserter

specific look. It requires implementation of a specific, cache period for Page Designer pages, which b not currently available in the base Storefront Reference Architecture (SFRA) cache.js module.

What two steps should the Architect instruct the developer to implement?

(Choose 2 answers)

A. Create new Page.js controller in client s cartridge. Copy code from base and modify the Page-Show route to include the new cache middleware function.

B. Create new ceche,js client's cartridge. Copy cache,js from app_storefront_base and add a function for the Page Designer caching.

C. Create new Page,js controller in client's cartridge. Extend the code from base and prepend the new cache middleware function to Page-Show route.

D. Create new cache,js in client's cartridge. Extend cache,js from app_storefront_baseand adda function for the Page Designer caching.

Explanation:

The client wants a Page Designer-specific cache period, which SFRA’s base cache.js does not provide. The best practice is to extend, not copy, the base modules.

Why C is correct:

Extend the base Page.js controller instead of replacing it. Use server.prepend to add the custom cache middleware to the Page-Show route without duplicating code.

Example:

'use strict';

var server = require('server');

server.extend(module.superModule);

var cache = require('*/cartridge/scripts/middleware/cache');

server.prepend(

'Show',

cache.cachePageDesigner

);

module.exports = server.exports();

Why D is correct:

Instead of copying the entire cache.js file, extend it and add a new function for Page Designer caching. This approach is safer for upgrades and avoids duplication.

Example:

var base = require('app_storefront_base/cartridge/scripts/middleware/cache');

module.exports = {

...base,

cachePageDesigner: base.custom({

mode: 'server',

expires: 300

})

};

Why A and B are incorrect:

Copying entire controllers or scripts increases technical debt and maintenance costs. It’s better to extend existing modules to stay compatible with future SFRA upgrades.

There Is an Issue with the site when the domain Is opened from Google search results. After researching the problem. It turns out that the site returns * 404 page error when accessed with a parameter in the URL. What should the Architect recommend to fix that issue?

A. Add dynamic catch-all rule to redirect to home page.

B. Add this snippet to the aliases configuration for the domain:

C. Add this snippet to the aliases configuration for the domain

D. Add dynamic redirect if the URL contains parameter to Home Show. Add this snippet to the aliases configuration for the domain

Explanation:

Why?

The issue occurs when users click Google search results that include URL parameters (e.g., ?utm_source=google), causing a 404 error instead of loading the intended page. This happens because:

B2C Commerce may not recognize parameterized URLs by default.

The aliases configuration defines which URL patterns should resolve properly.

Solution:

1. Modify the aliases.json file in the site’s root directory to allow parameters:

{

"aliases": {

"default": {

"validUrlParams": ["utm_source", "utm_medium", "utm_campaign", "gclid"]

}

}

}

2. This ensures URLs with common marketing parameters (e.g., UTM tags) won’t trigger 404s.

Why Not Other Options?

❌ A. Dynamic catch-all redirect to homepage

Problem: Hurts SEO (loses original page context) and UX.

❌ C. Same as B, but typo in option text (invalid).

❌ D. Dynamic redirect based on parameters

Problem: Overly complex; parameters should not force redirects (they’re meant to track traffic).

Best Practice:

Always whitelist common parameters in aliases.json (e.g., UTM, gclid).

Test with Google’s URL Inspection Tool to verify fixes.

During discovery, the customer required a feature that is not inducted in the standard Storefront Reference Architecture CSFRA). In order to save budget, the Architect needs to find the quickest way to implement this feature. What is the primary resource the Architect should use to search for an existing community Implementation of the requested feature?

A. Salesforce Commerce Cloud GitHub repository

B. Salesforce Commerce Cloud Trailblazer community

C. Salesforce Trailblazer Portal

D. Salesforce B2C Commerce Documentation

Explanation:

✅ Why A is Correct:

Official place for SFRA source code and community cartridges.

Contains sample integrations and real code examples.

Best starting point for quickly finding existing implementations.

❌ Why Not B (Trailblazer Community):

Great for discussions and questions

Not a code repository

❌ Why Not C (Trailblazer Portal):

Meant for support cases and account management

Not for community code

❌ Why Not D (B2C Commerce Documentation):

Documentation explains features and APIs

Does not include downloadable community cartridges

✅ Recommended Practice:

Check Salesforce Commerce Cloud GitHub for shared code

Saves budget and development time

A client has a single site with multiple domains, locales, and languages. Afterlaunch, there is a need for the client toperform offline maintenance. The client would like to show the same maintenance page for each locale.

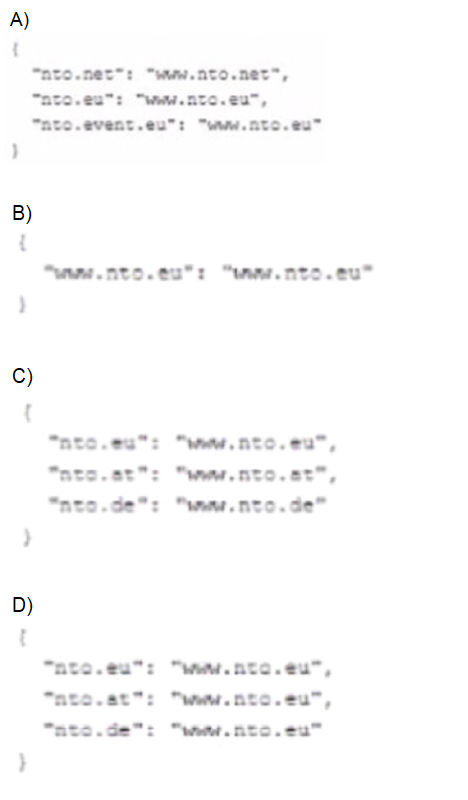

Which version of aliases,Json file below will accomplish this task?

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

✅ Option A

{

"nto.net": "www.nto.net",

"nto.eu": "www.nto.eu",

"nto.event.eu": "www.nto.eu"

}

→ This maps various domains individually to their own or to www.nto.eu. However:

This would serve different hostnames depending on domain.

It doesn’t consolidate everything under a single domain for maintenance.

Not correct.

✅ Option B

{

"www.nto.eu": "www.nto.eu"

}

→ Only maps www.nto.eu → www.nto.eu.

No redirection for other domains (nto.at, nto.de, etc.).

Would leave other domains unreachable during maintenance.

Not correct.

✅ Option C

{

"nto.eu": "www.nto.eu",

"nto.at": "www.nto.at",

"nto.de": "www.nto.de"

}

→ This keeps each country on its own domain.

So www.nto.at would still show a local version, not a universal maintenance page.

Not correct.

✅ Option D

{

"nto.eu": "www.nto.eu",

"nto.at": "www.nto.eu",

"nto.de": "www.nto.eu"

}

✅ This correctly:

Redirects all regional domains (nto.at, nto.de) to one single domain www.nto.eu.

Allows the maintenance page to exist once at www.nto.eu.

Ensures all traffic hits the same maintenance page regardless of locale.

→ This is the correct answer.

✅ Why D is Correct

During maintenance, you want:

One canonical domain for all traffic

Simplified display of the same maintenance page

Avoid maintaining separate maintenance pages for each domain

| Page 1 out of 6 Pages |