Topic 4: Mix Questions

You have an Azure subscription that contains an Azure Blob storage account bolb1. You need to configure attribute-based access control (ABAC) for blob1. Which attributes can you use in access conditions?

A. blob index tags only

B. blob index tags and container names only

C. file extensions and container names only

D. blob index tags, file extensions, and container names

Summary:

Attribute-Based Access Control (ABAC) in Azure allows you to grant access based on attributes assigned to security principals (users), resources, and the environment. For Azure Blob Storage, the primary resource attributes you can use in conditions are blob index tags. These are key-value pairs that you define and assign to your blobs, which can then be referenced in conditional expressions within role assignments to create granular, dynamic access policies.

Correct Option:

A. blob index tags only:

Blob index tags are the native and intended attribute mechanism for implementing ABAC on Azure Blob Storage resources.

You can create a role assignment with a condition that checks for the presence or value of these tags (e.g., @Resource

[Microsoft.Storage/storageAccounts/blobServices/containers/blobs/tags:Project<'Alpha>']).

This allows you to build scenarios where access to a blob is granted not just by the RBAC role but also by the specific attributes (tags) the blob possesses, enabling fine-grained, attribute-driven access control.

Incorrect Options:

B. blob index tags and container names only:

While the container name is part of the blob's path, it is not used as a filterable attribute in ABAC conditions in the same way blob index tags are. Access to a container is managed by the role assignment scope, not by a conditional attribute check.

C. file extensions and container names only:

File extensions are not a recognized attribute for ABAC conditions in Azure Blob Storage. While you could theoretically try to parse a blob's name in a custom application, the native ABAC engine does not support file extensions as a condition attribute.

D. blob index tags, file extensions, and container names:

This is incorrect because, as explained, only blob index tags are supported as resource attributes for ABAC conditions. File extensions are not supported, and container names are managed through scope, not conditions.

Reference

Microsoft Learn, "What is Azure attribute-based access control (Azure ABAC)?": https://learn.microsoft.com/en-us/azure/role-based-access-control/conditions-overview

Microsoft Learn, "Create role assignments for blobs using blob index tags (preview)": https://learn.microsoft.com/en-us/azure/storage/blobs/storage-manage-find-blobs?tabs=azure-portal#create-role-assignments-for-blobs-using-blob-index-tags-preview

You have an Azure subscription that contains an Azure key vault named Vault1 and a

virtual machine named VM1. VM1 has the Key Vault VM extension installed.

For Vault1, you rotate the keys, secrets, and certificates.

What will be updated automatically on VM1?

A. the keys only

B. the secrets only

C. the certificates only

D. the keys and secrets only

E. the secrets and certificates only

F. the keys, secrets, and certificates

Summary:

The Key Vault VM (Azure Key Vault virtual machine extension) is specifically designed to automatically refresh certificates stored in Azure Key Vault when they are rotated. When a new version of a certificate becomes available in the vault, the extension detects this change and pulls the updated certificate to the virtual machine. This process is automatic and does not apply to rotated keys or secrets, which require application-level logic or manual intervention to update.

Correct Option:

C. the certificates only:

The primary function of the Key Vault VM extension is to manage the lifecycle of certificates on the VM. It polls the key vault periodically.

When you rotate a certificate in Vault1 (by creating a new version), the extension detects that the current version on the VM is outdated.

It automatically retrieves the new certificate version and updates the local certificate store on VM1, ensuring services using that certificate have the latest version without manual deployment.

Incorrect Options:

A. the keys only:

Incorrect. The VM extension does not automatically manage or update cryptographic keys used by applications. The application itself must be coded to reference the key URI, which always points to the latest version, or to handle key rotation programmatically.

B. the secrets only:

Incorrect. Similar to keys, secrets (like connection strings) are not automatically updated on the VM by this extension. Applications must be designed to fetch the latest version of a secret from the key vault at runtime.

D. the keys and secrets only:

Incorrect. This combination is not automatically managed. Neither keys nor secrets are refreshed on the VM by the extension.

E. the secrets and certificates only:

Incorrect. While certificates are updated, secrets are not. Therefore, this option is only half correct and thus invalid.

F. the keys, secrets, and certificates:

Incorrect. This is too broad. Only certificates benefit from the automatic update functionality of the Key Vault VM extension.

Reference

Microsoft Learn, "Key Vault virtual machine extension for Windows": https://learn.microsoft.com/en-us/azure/virtual-machines/extensions/key-vault-windows

The documentation states: "The Key Vault VM extension... automatically refreshes certificates stored in Azure Key Vault when they are rotated." It makes no such claim for keys or general secrets.

You have an Azure subscription that contains an Azure Kubernetes Service (AKS) cluster

named AKS1.

You have an Azure container registry that stores container images that were deployed by

using Azure DevOps Microsoft-hosted agents.

You need to ensure that administrators can access AKS1 only from specific networks. The

solution must minimize administrative effort.

What should you configure for AKS1?

A. an Application Gateway Ingress Controller (AGIC)

B. a private cluster

C. authorized IP address ranges

D. a private endpoint

Summary:

The requirement is to restrict administrative access to the AKS cluster's API server (the control plane) to specific networks, such as a corporate office's public IP. The feature designed specifically for this purpose is "authorized IP address ranges." This allows you to define a whitelist of public IP addresses or CIDR ranges that are permitted to communicate with the AKS API server, blocking all other traffic. It is the most direct and least effort solution for this network-level access control.

Correct Option:

C. authorized IP address range:

This feature, also known as API server authorized IP ranges, is the direct and intended solution for restricting access to the AKS API server based on source network.

You simply specify the public IP ranges of your administrators' networks in the AKS cluster configuration. This minimizes administrative effort as it is a one-time configuration managed directly within the AKS resource.

It effectively blocks all management traffic (via kubectl, Azure CLI, etc.) from IP addresses not in the allowed list, meeting the security requirement without changing the cluster's fundamental networking model.

Incorrect Options:

A. an Application Gateway Ingress Controller (AGIC):

AGIC manages application ingress traffic (HTTP/HTTPS) to your services and pods. It does not control access to the Kubernetes API server used for cluster administration. It solves a different problem related to user-facing application traffic.

B. a private cluster:

While a private cluster does restrict API server access by placing it on a private virtual network, it would block the Azure DevOps Microsoft-hosted agents (which have dynamic public IPs) from deploying images. This would break your deployment pipeline and require a self-hosted agent on a VNet, which increases administrative effort.

D. a private endpoint:

A Private Endpoint provides a private IP for a PaaS service. While an AKS private cluster uses a similar concept for its API server, configuring a standalone private endpoint is not the correct control for this specific public IP restriction requirement. The "authorized IP ranges" feature is the simpler, more direct solution.

Reference:

Microsoft Learn, "Secure access to the API server using authorized IP address ranges in Azure Kubernetes Service (AKS)": https://learn.microsoft.com/en-us/azure/aks/api-server-authorized-ip-ranges

You have an Azure subscription that contains an Azure SQL database named sql1.

You plan to audit sql1.

You need to configure the audit log destination. The solution must meet the following

requirements:

Support querying events by using the Kusto query language.

Minimize administrative effort.

What should you configure?

A. an event hub

B. a storage account

C. a Log Analytics workspace

Summary:

The requirement to support querying events using Kusto Query Language (KQL) directly points to using a Log Analytics workspace as the destination. Azure SQL Database auditing can send logs directly to Log Analytics, where the data is stored and can be immediately queried using KQL. This also minimizes administrative effort, as Log Analytics is a fully managed service, requiring no infrastructure management for the log storage and query engine.

Correct Option:

C. a Log Analytics workspace:

A Log Analytics workspace is the native destination for log data that needs to be queried using the Kusto Query Language (KQL). Sending the SQL audit logs here meets the primary requirement directly.

This solution minimizes administrative effort because Azure fully manages the underlying infrastructure of the Log Analytics workspace. There is no need to provision servers, manage storage capacity, or maintain the query engine.

The integration is seamless; you configure the audit policy once, and logs flow directly into the workspace, ready for analysis with KQL.

Incorrect Options:

A. an event hub:

An event hub is a streaming data service. It is designed for real-time ingestion and to relay data to other services (like a third-party SIEM or a storage account). It does not support querying the data directly with KQL. Using an event hub would require an additional, custom step to land the data in a queryable store, increasing administrative effort.

B. a storage account:

While a storage account is a valid destination for SQL audit logs and is useful for long-term archival, it does not support querying with KQL. To analyze data in a storage account, you would first need to export it to another service or use a different query method, which increases administrative overhead and complexity.

Reference:

Microsoft Learn, "Azure SQL Auditing": https://learn.microsoft.com/en-us/azure/azure-sql/database/auditing-overview#overview

This documentation specifies the destinations and their use cases, confirming that Log Analytics is the destination for "analyzing with Log Analytics, Power BI, or Excel."

You have 10 virtual machines on a single subnet that has a single network security group

(NSG).

You need to log the network traffic to an Azure Storage account.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Install the Network Performance Monitor solution.

B. Enable Azure Network Watcher.

C. Enable diagnostic logging for the NSG.

D. Enable NSG flow logs.

E. Create an Azure Log Analytics workspace

Summary

To log network traffic for VMs to a storage account, you need to use NSG flow logs, a feature of Azure Network Watcher. NSG flow logs capture information about IP traffic flowing through a Network Security Group. Enabling Network Watcher in the region is a prerequisite for this feature to function. Once enabled, you can configure NSG flow logs to send the captured flow data directly to an Azure Storage account.

Correct Options:

B. Enable Azure Network Watcher.

Network Watcher is a regional service. It must be enabled in the region where your virtual network and NSG reside before you can use its diagnostic features.

NSG flow logs are a core component of Network Watcher. Without this service enabled, the flow logging capability is unavailable.

Enabling it is a one-time prerequisite that involves minimal administrative effort, typically done via the portal, PowerShell, or CLI.

D. Enable NSG flow logs.

This is the specific action that starts capturing the network traffic data. NSG flow logs record metadata about IP flows (source/destination IP/port, protocol, decision to allow/deny) for all resources associated with the NSG.

During the configuration, you explicitly select a target Azure Storage account where the logs will be written.

This directly fulfills the requirement to "log the network traffic to an Azure Storage account."

Incorrect Options:

A. Install the Network Performance Monitor solution:

This is a monitoring solution within Azure Monitor that focuses on connectivity, performance, and network topology. It does not log raw network traffic flow data to a storage account and is not the correct tool for this requirement.

C. Enable diagnostic logging for the NSG:

While this sounds similar, "Diagnostic logs" for an NSG refer to a different set of logs (rule counter and event logs). These logs show which rules were applied to a VM and how many times, but they do not contain the per-flow data with source/destination IPs that "NSG flow logs" provide.

E. Create an Azure Log Analytics workspace:

A Log Analytics workspace is used for querying and analyzing log data with KQL. While NSG flow logs can be sent to a workspace, the requirement specifically states the destination must be an Azure Storage account. Therefore, this step is not necessary for the given solution.

Reference

Microsoft Learn, "Introduction to flow logging for network security groups": https://learn.microsoft.com/en-us/azure/network-watcher/network-watcher-nsg-flow-logging-overview

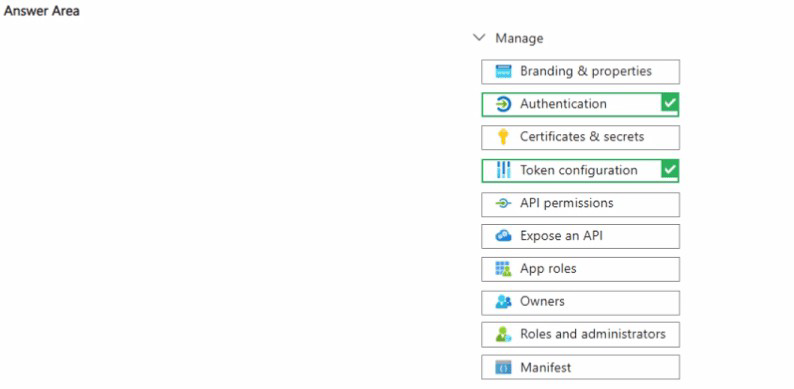

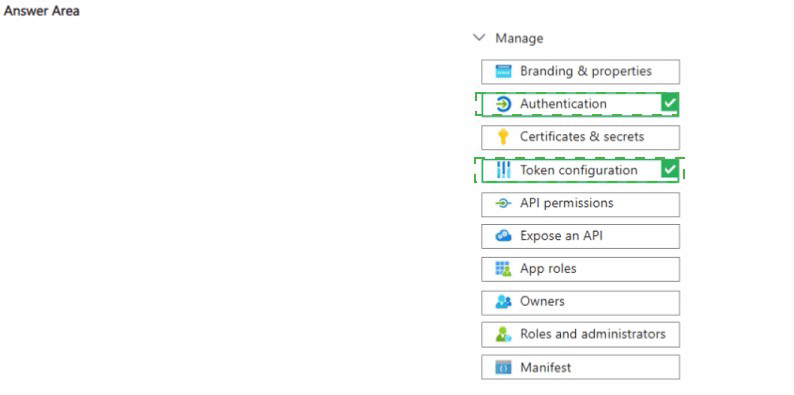

You have an Azure subscription that is linked to a Microsoft Entra tenant named

contoso.com. In contoso.com, you register an app named App1. You need to perform the

following tasks for App1:

• Add and configure the Mobile and desktop applications platform.

• Add the ipaddr optional claim.

Which two settings should you select for App1? To answer, select the appropriate settings

in the answer area.

NOTE; Each correct selection is worth one point.

Summary:

To configure the specified settings for a Microsoft Entra app registration (App1), you need to access two different blades within its management pane. Adding and configuring the "Mobile and desktop applications" platform is performed under the Authentication section, where you define the supported account types and redirect URIs for this type of application. Adding the ipaddr optional claim is performed under Token configuration, where you can customize the claims present in the tokens issued to the application.

Correct Options:

Authentication:

This is where you configure the fundamental platform and authentication flows for your application.

To add the "Mobile and desktop applications" platform, you would go to the Authentication blade, click "+ Add a platform," and then select "Mobile and desktop applications."

This action configures the necessary settings (like redirect URIs) optimized for native client applications.

Token configuration

This blade is specifically designed for customizing the security tokens (ID tokens, access tokens) that Microsoft Entra ID issues to your application.

To add the ipaddr claim, which provides the client IP address of the user who authenticated, you would go to Token configuration, click "+ Add optional claim," select the token type, and then choose the ipaddr claim from the list.

Incorrect Options:

The other management categories are not used for these specific tasks:

Branding & properties:

Used for setting the app's display name, logo, and homepage URL.

Certificates & secrets:

Used for managing client secrets and certificates for authentication.

API permissions:

Used for defining what permissions the app requires to access other APIs.

Expose an API:

Used to define custom scopes and roles for an API that your app exposes.

App roles:

Used to define application-specific roles for role-based access control.

Owners:

Used for managing the list of users who can administer the app registration.

Roles and administrators:

This is a tenant-level setting for managing Entra ID roles, not app-specific configuration.

Manifest:

A JSON file representing the app's configuration; while you could edit the manifest directly to make these changes, the portal blades provide a more user-friendly interface, and "Manifest" is not the primary recommended method.

Reference:

Microsoft Learn, "Configure authentication in a sample native client application": https://learn.microsoft.com/en-us/azure/active-directory/develop/quickstart-configure-app-access-web-apis

Microsoft Learn, "Provide optional claims to your app": https://learn.microsoft.com/en-us/azure/active-directory/develop/optional-claims

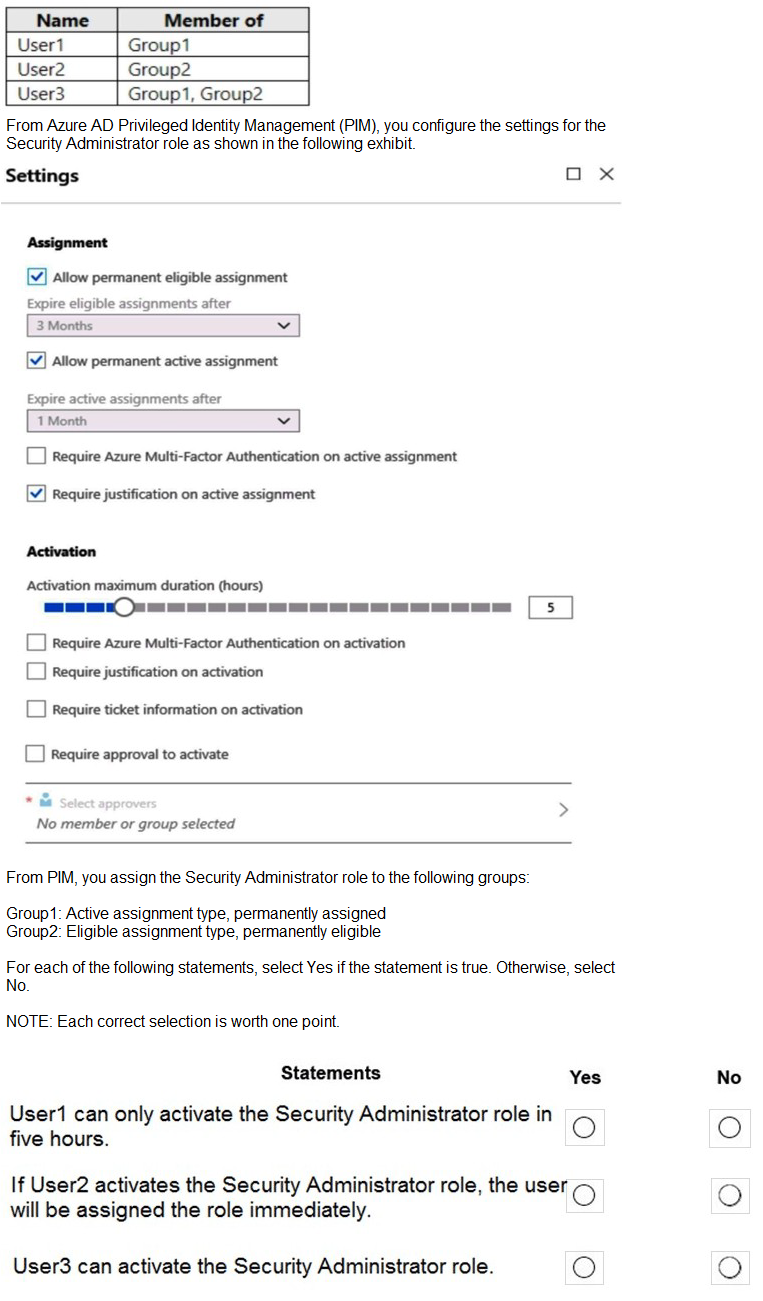

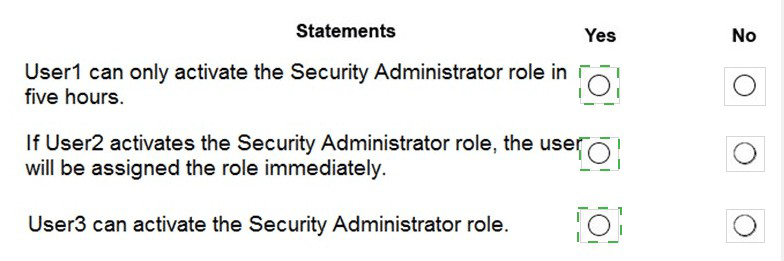

You have an Azure Active Directory (Azure AD) tenant that contains the users shown in the

following table.

Summary

The scenario involves Permanent Active and Permanent Eligible assignments for the Security Administrator role in PIM. User1 is an active member, User2 is an eligible member, and User3 is both. The PIM settings define activation rules (like a 5-hour maximum duration and MFA requirements) that apply only to eligible members when they activate their role. Permanent active assignments are always active and do not require activation.

Statement 1: User1 can only activate the Security Administrator role in five hours.

Correct Selection: No

User1 is a member of Group1, which has a Permanent Active assignment to the Security Administrator role.

A permanent active assignment means the role is always active. The user does not need to, and indeed cannot, "activate" the role.

Therefore, the statement is false because activation (and its time limit) is not applicable to User1.

Statement 2: If User2 activates the Security Administrator role, the user will be assigned the role immediately.

Correct Selection: Yes

User2 is a member of Group2, which has a Permanent Eligible assignment.

The PIM configuration for the role shows that "Require approval to activate" is enabled, but the "Select approvers" field states "No member or group selected."

When no approvers are selected, the activation request is automatically approved, and the role is assigned immediately upon the user completing the activation process (which includes any other requirements like MFA or justification).

Statement 3: User3 can activate the Security Administrator role.

Correct Selection: Yes

User3 is a member of both Group1 (Active) and Group2 (Eligible).

Because User3 has an Eligible assignment through Group2, they have the ability to activate the role.

The existence of an active assignment does not remove or block the eligible assignment; it simply means the user already has the role permanently active from another source. They can still choose to activate their eligible assignment if needed, which would be tracked as a separate, time-bound instance.

Reference

Microsoft Learn, "Assign eligibility for a privileged access group in Privileged Identity Management": https://learn.microsoft.com/en-us/azure/active-directory/privileged-identity-management/groups-assign-member-owner

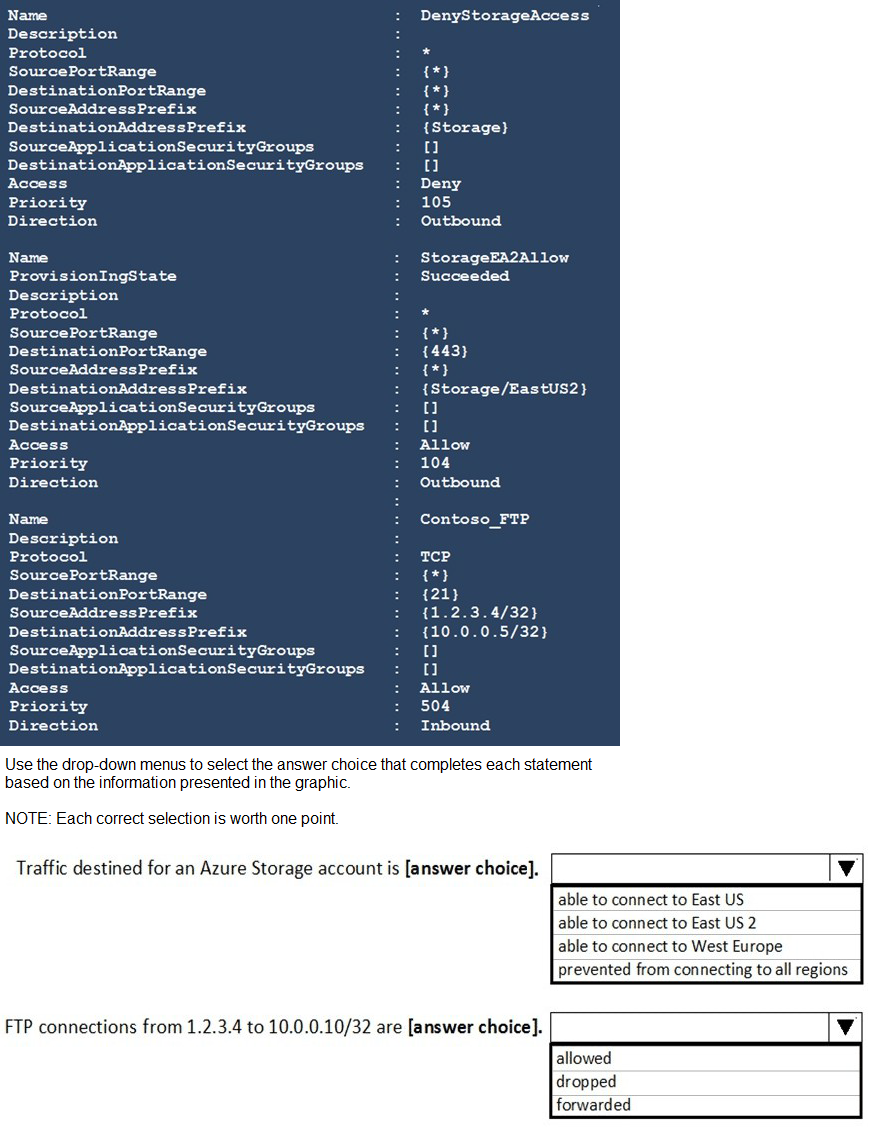

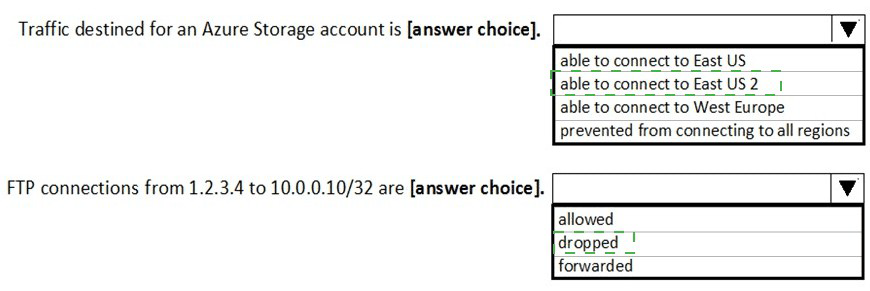

You have a network security group (NSG) bound to an Azure subnet.

You run Get-AzureRmNetworkSecurityRuleConfig and receive the output shown in the

following exhibit.

Summary

NSG rules are processed in priority order, from lowest number (highest priority) to highest number (lowest priority). The first rule that matches the traffic is applied, and processing stops. For outbound storage traffic, a high-priority Deny rule blocks all storage traffic, but an even higher-priority Allow rule creates an exception for a specific region. For inbound FTP traffic, the explicit Allow rule only applies to a specific destination IP, and a connection to a different IP does not match any rule, resulting in the default behavior.

Statement 1: Traffic destined for an Azure Storage account is...

Correct Selection: able to connect to East US 2

Analysis: There are two key outbound rules for storage:

StorageEA2Allow (Priority 104 - Allow): This rule explicitly allows outbound traffic on port 443 to the Storage/EastUS2 service tag.

DenyStorageAccess (Priority 105 - Deny): This rule denies all outbound traffic to the broader Storage service tag.

Because the Allow rule (104) has a higher priority (lower number) than the Deny rule (105), traffic destined for storage in East US 2 is permitted. Traffic destined for storage in any other region (like East US or West Europe) will match the Deny rule (105) and be blocked.

Statement 2: FTP connections from 1.2.3.4 to 10.0.0.10/32 are...

Correct Selection: dropped

Analysis: There is one inbound FTP rule:

Contoso_FTP (Priority 504 - Allow): This rule allows TCP traffic from source IP 1.2.3.4/32 to destination IP 10.0.0.5/32 on port 21 (FTP).

The traffic in question is from 1.2.3.4 to 10.0.0.10. This traffic does not match the Contoso_FTP rule because the destination IP (10.0.0.10) is different from the rule's destination IP (10.0.0.5).

Since this traffic does not match any explicit Allow rule, it is evaluated against the default NSG security rules. The default rules include a catch-all "DenyAllInbound" rule (priority 65500), which will drop this FTP connection attempt.

Reference

Microsoft Learn, "How network security groups filter network traffic": https://learn.microsoft.com/en-us/azure/virtual-network/network-security-group-how-it-works

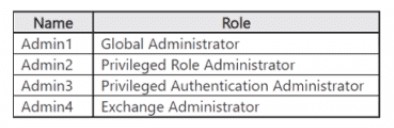

You have a Microsoft Entra tenant that uses Microsoft Entra Permissions Management and

contains the accounts shown in the following table:

Which accounts will be listed as assigned to highly privileged roles on the Azure AD

insights tab in the Entra Permissions Management portal?

A. Admin1 only

B. Admin2 and Admin3 only

C. Admin2 and Admin4 only

D. Admin1. Admin2, and Admin3 only

E. Admin2. Admin3, and Admin4 only

F. Admin1. Admin2, Admin3. and Admin4

Summary

Microsoft Entra Permissions Management categorizes Microsoft Entra roles into tiers based on their level of privilege. The "highly privileged" tier includes roles that have the most far-reaching and powerful permissions across the tenant. The Global Administrator, Privileged Role Administrator, and Privileged Authentication Administrator roles are all classified within this highest tier of privilege due to their ability to manage other roles and control critical authentication policies.

Correct Option:

D. Admin1, Admin2, and Admin3 only

Admin1 (Global Administrator):

This is the highest-privileged role in Microsoft Entra ID, with unrestricted access to manage all aspects of the tenant. It is definitively classified as highly privileged.

Admin2 (Privileged Role Administrator):

This role has permissions to manage role assignments in Microsoft Entra ID, including elevating other users to privileged roles. This ability to delegate administrative access makes it a highly privileged role.

Admin3 (Privileged Authentication Administrator):

This role allows a user to manage authentication methods for all users, including resetting passwords and MFA settings for highly privileged administrators. This control over authentication security places it in the highly privileged tier.

Incorrect Options:

A, B, C, E:

These options are incomplete as they exclude one or more of the correctly identified highly privileged roles (Admin1, Admin2, Admin3).

F. Admin1, Admin2, Admin3, and Admin4:

This is incorrect because it includes Admin4 (Exchange Administrator). The Exchange Administrator role is considered an administrative unit-scoped privileged role or a service-specific administrator. While powerful within the Exchange Online service, it does not have the tenant-wide, cross-service impact that defines the "highly privileged" tier in Permissions Management.

Reference:

Microsoft Learn, "Microsoft Entra built-in roles - Permissions Management": https://learn.microsoft.com/en-us/azure/active-directory/roles/permissions-management-overview#microsoft-entra-built-in-roles

This documentation outlines the role tiers in Permissions Management, explicitly listing Global Administrator, Privileged Role Administrator, and Privileged Authentication Administrator among the roles classified with high privilege.

You have an Azure Active Directory (Azure AD) tenant named contoso.com

You need to configure diagnostic settings for contoso.com. The solution must meet the

following requirements:

• Retain loqs for two years.

• Query logs by using the Kusto query language

• Minimize administrative effort.

Where should you store the logs?

A. an Azure Log Analytics workspace

B. an Azure event hub

C. an Azure Storage account

Summary:

The requirement to query logs using Kusto Query Language (KQL) directly points to using a Log Analytics workspace as the destination. While an Azure Storage account can meet the two-year retention requirement, it does not support native KQL querying; data in storage would need to be exported to another service for analysis. A Log Analytics workspace natively supports KQL and can be configured for long-term retention, minimizing effort by providing a single, managed service for both querying and retention.

Correct Option:

A. an Azure Log Analytics workspace

A Log Analytics workspace is the native destination for log data that needs to be queried using Kusto Query Language (KQL). This directly fulfills the primary technical requirement.

It minimizes administrative effort because it is a fully managed service. You can configure the two-year retention period directly within the workspace's data retention settings, requiring no further infrastructure management.

Using a single service (Log Analytics) for both querying and long-term retention is more efficient than a multi-step architecture involving storage and another service for analysis.

Incorrect Options:

B. an Azure event hub:

An event hub is a streaming data ingestion service. It is designed for real-time processing and relaying data to other systems. It is not a storage solution and does not support data retention or direct querying with KQL, thus failing to meet the core requirements.

C. an Azure Storage account:

While an Azure Storage account is excellent for long-term, cost-effective archival and can easily meet the two-year retention requirement, it does not support querying the data with KQL. To analyze logs in a storage account, you would need to export them to a Log Analytics workspace or use another method, which increases administrative effort and complexity.

Reference:

Microsoft Learn, "Azure Active Directory activity logs in Azure Monitor": https://learn.microsoft.com/en-us/azure/active-directory/reports-monitoring/concept-activity-logs-azure-monitor#overview

This documentation outlines the integration of Azure AD logs with Azure Monitor, highlighting Log Analytics as the destination for querying with KQL.

You have an Azure subscription that contains a user named UseR1. You need to ensure

that UseR1 can perform the following tasks:

• Create groups.

• Create access reviews for role-assignable groups.

• Assign Azure AD roles to groups.

The solution must use the principle of least privilege. Which role should you assign to

User1?

A. Groups administrator

B. Authentication administrator

C. Identity Governance Administrator

D. Privileged role administrator

Summary:

The required tasks involve three distinct high-level permissions: creating any type of group, creating access reviews (a feature of Identity Governance), and assigning Azure AD roles (the highest level of privilege management). The Groups Administrator role can create groups but cannot manage access reviews or assign roles. The Identity Governance Administrator can manage access reviews but cannot create role-assignable groups or assign roles. Only the Privileged Role Administrator has the necessary permissions to assign Azure AD roles and create role-assignable groups, and it can also manage access reviews for those groups.

Correct Option:

D. Privileged role administrator:

Assign Azure AD roles to groups:

This is the core permission of the Privileged Role Administrator role. No other role with lesser privilege can perform this task.

Create access reviews for role-assignable groups:

The Privileged Role Administrator can manage all aspects of PIM, including creating access reviews for privileged objects like role-assignable groups.

Create groups:

While not its primary function, the Privileged Role Administrator can create groups, including the role-assignable groups required for this scenario. Combining this role with the Groups Administrator is unnecessary as it already encompasses the required permissions, and using a single, more powerful role is justified here because the tasks require the highest level of privilege.

Incorrect Options:

A. Groups administrator:

This role can create groups and manage their settings. However, it cannot create role-assignable groups (which is a prerequisite for assigning Azure AD roles to them) and it cannot assign Azure AD roles or manage access reviews for privileged access.

B. Authentication administrator:

This role is focused on helping users reset their passwords and manage MFA methods. It has no permissions related to group management, role assignment, or access reviews.

C. Identity Governance Administrator:

This role can create and manage access reviews and manage other governance features like entitlement management. However, it cannot create role-assignable groups or assign Azure AD roles to them.

Reference:

Microsoft Learn, "Microsoft Entra built-in roles": https://learn.microsoft.com/en-us/azure/active-directory/roles/permissions-reference

Compare the permissions for Privileged Role Administrator (which can microsoft.directory/roleAssignments/create) and Groups Administrator (which cannot create roleAssignments).

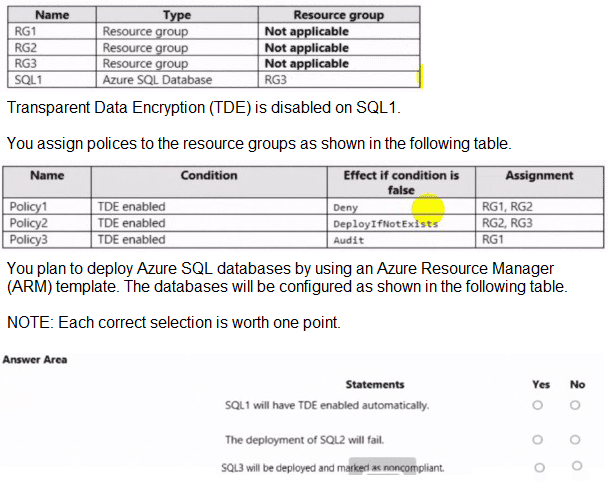

You have an Azure subscription that contains the resources shown in the following table.

Summary

Azure Policy assignments are inherited by resources within the assigned scope (resource group). The Deny effect prevents resource creation/modification that violates the policy. The DeployIfNotExists effect remediates non-compliant resources by deploying the required configuration after creation. The Audit effect only reports on compliance without blocking or fixing resources.

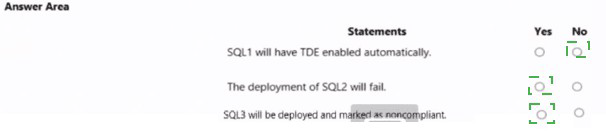

Statement 1: SQL1 will have TDE enabled automatically.

Correct Selection: Yes

Analysis: SQL1 resides in RG3. RG3 has Policy2 (DeployIfNotExists) assigned to it.

Policy2's condition checks if TDE is enabled. For SQL1, this condition is currently false because TDE is disabled.

When a DeployIfNotExists policy is evaluated and the condition is false, it triggers the remediation task. This task will automatically execute the deployment defined in the policy to enable TDE on the non-compliant resource (SQL1).

Therefore, SQL1 will be remediated and have TDE enabled automatically.

Statement 2: The deployment of SQL2 will fail.

Correct Selection: Yes

Analysis: SQL2 is planned for deployment in RG1. RG1 has Policy1 (Deny) assigned to it.

Policy1's condition requires TDE to be enabled. If SQL2 is deployed with TDE disabled, it will violate this policy.

A policy with a Deny effect prevents the creation or update of any resource that does not comply. Therefore, the deployment of SQL2 (with TDE disabled) will be blocked and will fail.

Statement 3: SQL3 will be deployed and marked as noncompliant.

Correct Selection: Yes

Analysis: SQL3 is planned for deployment in RG2. RG2 has both Policy1 (Deny) and Policy2 (DeployIfNotExists) assigned.

The deployment will succeed because RG2 also has Policy2 (DeployIfNotExists). A DeployIfNotExists policy does not block deployment; it remediates after the resource is created. The resource is allowed to be created in a non-compliant state, and then the policy acts on it.

Since SQL3 is being deployed with TDE disabled, it will initially be non-compliant with both Policy1 and Policy2. It will be marked as non-compliant in Azure Policy compliance reports. Policy2 will then likely trigger a remediation task to enable TDE after deployment.

Reference:

Microsoft Learn, "Understand Azure Policy effects: Deny": https://learn.microsoft.com/en-us/azure/governance/policy/concepts/effects#deny

Microsoft Learn, "Understand Azure Policy effects: DeployIfNotExists": https://learn.microsoft.com/en-us/azure/governance/policy/concepts/effects#deployifnotexists

| Page 1 out of 42 Pages |