Topic 5: Describe features of conversational AI workloads on Azure

For each of the following statements, select Yes if the statement is true. Otherwise, select

No.

NOTE: Each correct selection is worth one point.

Explanation:

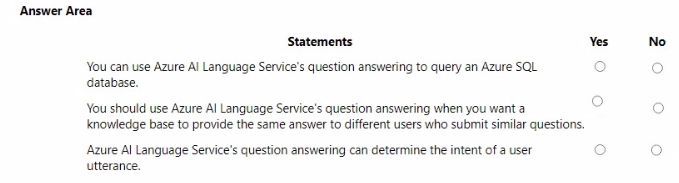

This question tests the specific capabilities and intended use cases of the Azure AI Language service's question answering feature (formerly QnA Maker). It requires distinguishing its function as a knowledge-base query tool from other natural language processing tasks like intent recognition or direct database querying.

Correct Selections and Explanations:

Statement 1:

You can use Azure AI Language Service's question answering to query an Azure SQL database.

Selection: No

Correct Option Reasoning:

The question answering feature is designed to query a knowledge base built from documents, URLs, or manual entries. It is not a tool for running SQL queries or directly interrogating structured databases like Azure SQL. A different approach, such as custom logic or Azure OpenAI, would be needed for that.

Statement 2:

You should use Azure AI Language Service's question answering when you want a knowledge base to provide the same answer to different users who submit similar questions.

Selection: Yes

Correct Option Reasoning:

This is the core strength of question answering. It extracts consistent Q&A pairs from source content to build a knowledge base. It uses natural language understanding to match varied user questions to the correct, pre-defined answer, ensuring consistent responses for semantically similar queries.

Statement 3:

Azure AI Language Service's question answering can determine the intent of a user utterance.

Selection: No

Correct Option Reasoning:

Determining user intent (e.g., "BookFlight" or "CheckBalance") is the primary function of a custom question answering project (Conversational Language Understanding (CLU)), not the standard question answering feature. Standard question answering finds the best matching answer in its knowledge base; it does not classify utterances into distinct, predefined intents for triggering different app logic.

Reference:

Microsoft Learn, "Get answers from your documentation with question answering": This module explains that question answering is used to create a conversational layer over data to provide consistent answers, contrasting it with other Language service features like intent classification.

Which scenario is an example of a webchat bot?

A. Determine whether reviews entered on a website for a concert are positive or negative, and then add a thumbs up or thumbs down emoji to the reviews.

B. Translate into English questions entered by customers at a kiosk so that the appropriate person can call the customers back.

C. Accept questions through email, and then route the email messages to the correct person based on the content of the message.

D. From a website interface, answer common questions about scheduled events and ticket purchases for a music festival.

Explanation:

This question asks you to identify the classic use case for a webchat bot, also known as a chatbot. The key characteristics are: a conversational interface (typically embedded in a website), providing interactive responses in real-time, and handling common, predefined queries from users.

Correct Option:

D. From a website interface, answer common questions about scheduled events and ticket purchases for a music festival.

This is the definitive example. It describes a bot deployed on a website ("website interface") that engages in direct, interactive dialogue with users to provide instant answers to frequent, predictable questions (FAQs about events and tickets), which is the core purpose of a webchat bot.

Incorrect Options:

A. Determine whether reviews entered on a website for a concert are positive or negative...

This describes sentiment analysis, a Natural Language Processing (NLP) task for classifying text. It is an automated analysis of static text, not an interactive, two-way conversation with a user, which a bot requires.

B. Translate into English questions entered by customers at a kiosk...

This describes a translation service. The system converts text from one language to another and then passes it to a human. There is no conversational AI component that understands or responds to the query's content.

C. Accept questions through email, and then route the email messages to the correct person...

This is an example of email classification or routing using NLP. While it automates a workflow, it does so via email, not through a real-time, interactive chat interface, which is the defining trait of a webchat bot.

Reference:

Microsoft Learn, "Describe features of conversational AI workloads on Azure": This module defines conversational AI as enabling interaction through natural language, with common applications including webchat bots that answer frequently asked questions on websites.

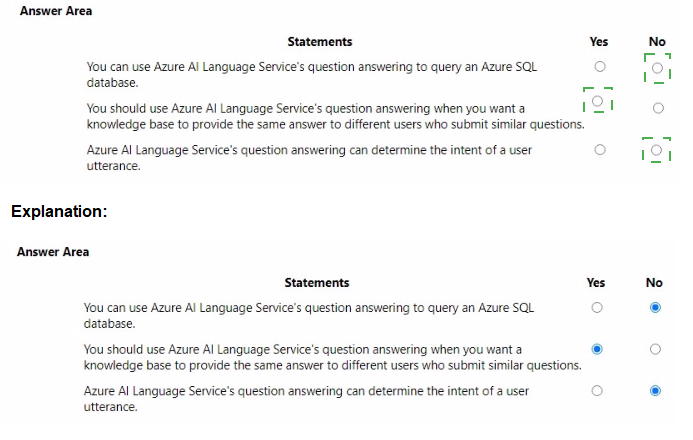

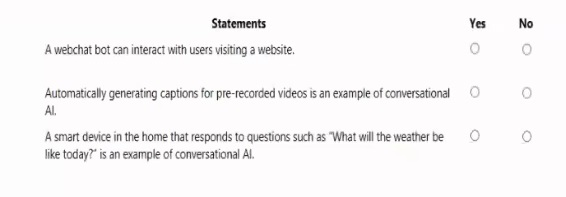

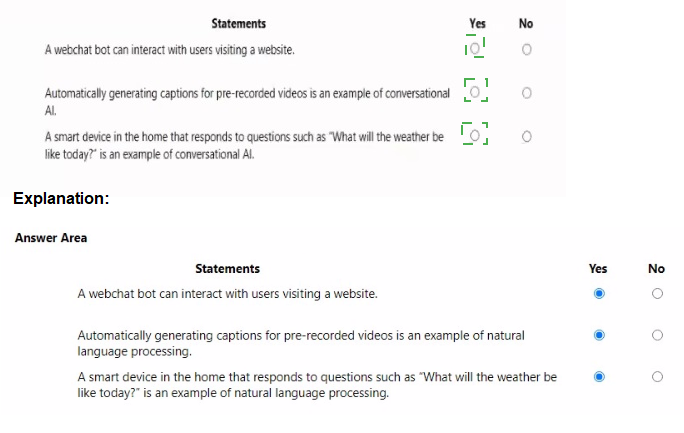

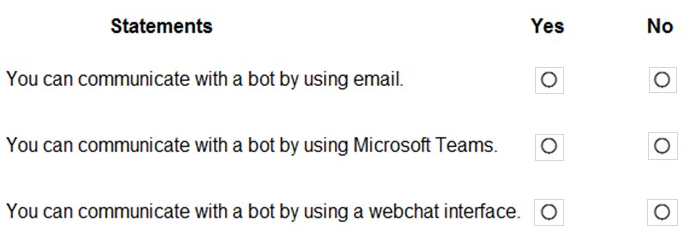

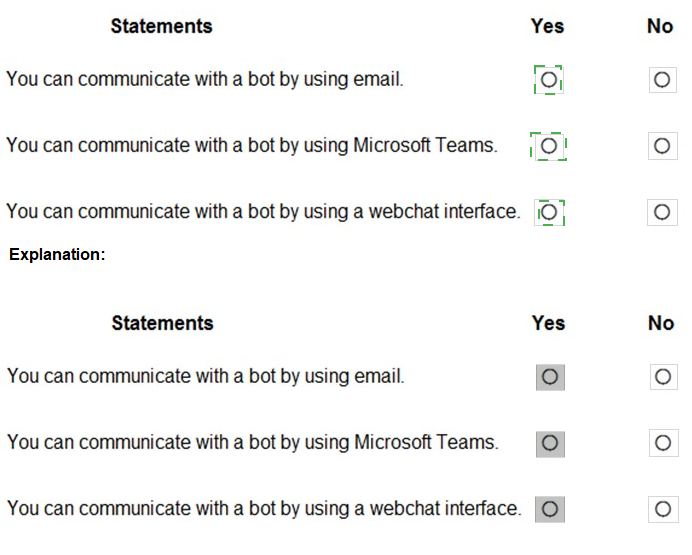

For each of the following statements, select Yes if the statement is true. Otherwise, select

No.

NOTE: Each correct selection is worth one point.

Explanation:

Conversational AI is a subset of artificial intelligence that enables computers to simulate human-like interactions. It primarily focuses on the exchange of information through natural language, whether via text-based interfaces (like webchat bots) or voice-activated systems (like smart speakers). This field relies heavily on Natural Language Processing (NLP) to understand user intent and provide contextually relevant responses in real-time or near real-time, facilitating a dialogue between a human and a machine.

Correct Option:

Statement 1 (Yes):

A webchat bot is a core implementation of Conversational AI. It functions by receiving text input from website visitors, processing the intent through a language model, and providing an appropriate response, thereby facilitating a two-way digital conversation.

Statement 3 (Yes):

Smart home devices that answer spoken questions exemplify voice-based Conversational AI. This process involves Speech-to-Text to capture the query, NLP to interpret the intent, and Text-to-Speech to deliver the answer, fulfilling the requirement of a conversational exchange.

Incorrect Option:

Statement 2 (No):

Automatically generating captions for pre-recorded videos is not Conversational AI; it is an application of Speech-to-Text and Computer Vision (Azure AI Video Indexer). Because there is no back-and-forth dialogue or interaction between a user and the system, it lacks the "conversational" element. It is a one-way transcription process rather than an interactive exchange of information typical of bot-driven or voice-assistant platforms.

Reference:

What is Azure AI Bot Service?"

What are three stages in a transformer model? Each correct answer presents a complete

solution.

NOTE: Each correct answer is worth one point.

A. object detection

B. embedding calculation

C. tokenization

D. next token prediction

E. anonymization

Explanation:

The transformer model architecture is designed to process sequential data, such as text, by focusing on the relationships between different words in a sentence. Unlike older models that process words one by one, transformers analyze the entire sequence simultaneously using an attention mechanism. This allows the model to understand context and nuance by calculating how much "attention" each word should pay to others, eventually allowing the system to generate human-like text by predicting subsequent parts of a sentence.

Correct Option:

B. Embedding calculation:

Once text is broken into tokens, the model converts each token into a numerical vector called an embedding. These embeddings are multi-valued numbers that represent the semantic meaning of a word. In this multi-dimensional space, words with similar meanings (like "cat" and "dog") are positioned closer together, allowing the model to "understand" relationships mathematically.

C. Tokenization:

This is the initial stage where raw input text is decomposed into smaller units called tokens. Tokens can be whole words, subwords, or even individual characters and punctuation. This step is essential because machine learning models cannot process raw text; they require these discrete units to assign unique numerical identifiers for further processing.

D. Next token prediction:

This is the final generative stage of the transformer. The model uses the calculated embeddings and attention scores to determine the most probable next token in a sequence. This is an iterative process: the model predicts one token, appends it to the existing text, and then uses that new sequence as input to predict the following token until the response is complete.

Incorrect Option:

A. Object detection:

This is a task within Computer Vision, not a stage of the transformer architecture itself. While some specialized models (like Vision Transformers) can be used for image tasks, object detection is a specific workload used to identify and locate items within an image, which is unrelated to the standard text-processing stages of a language transformer.

E. Anonymization:

This is a data privacy or preprocessing step used to remove personally identifiable information (PII) from a dataset. While it is an important part of Responsible AI and data preparation, it is not a technical stage or functional component of the transformer model's internal architecture or inference process.

Reference:

https://learn.microsoft.com/en-us/training/modules/fundamentals-generative-ai/

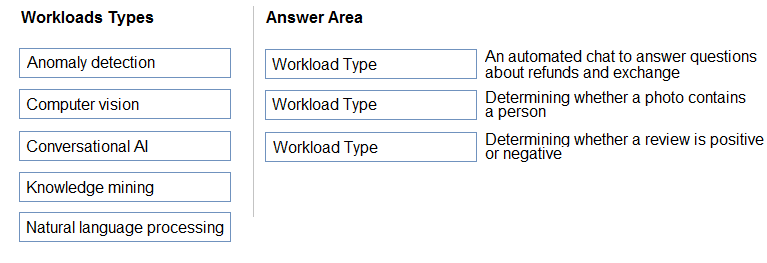

Match the types of AI workloads to the appropriate scenarios.

To answer, drag the appropriate workload type from the column on the left to its scenario on the right. Each workload type may be used once, more than once, or not at all.

NOTE: Each correct selection is worth one point.

Explanation:

Microsoft Azure categorizes AI capabilities into specific workloads to help developers choose the right services. These workloads range from Computer Vision, which interprets visual data, to Natural Language Processing (NLP), which analyzes text. Understanding the distinction between these is vital for the AI-900 exam, as many scenarios overlap. For instance, while a chatbot uses NLP to understand words, the overall interactive experience is classified as Conversational AI. Proper classification ensures that the AI system is optimized for its specific human-interaction or data-analysis goal.

Correct Option:

Conversational AI (An automated chat to answer questions about refunds and exchange):

This scenario describes a dialogue-based interface where a machine interacts with a human. Conversational AI combines NLP with bot services to manage the flow of conversation, maintain context, and provide automated support in a way that mimics a real customer service agent.

Computer vision (Determining whether a photo contains a person):

This is a classic image classification or object detection task. Computer Vision involves using algorithms to identify and process patterns within digital images or videos. In this case, the model is trained to recognize human features to distinguish people from other objects in the frame.

Natural language processing (Determining whether a review is positive or negative):

This specific task is known as Sentiment Analysis. NLP focuses on extracting meaning, emotion, and intent from written or spoken language. By analyzing the words used in a review, the NLP service can quantify the "sentiment" score to determine if the feedback is favorable or critical.

Incorrect Option:

Anomaly detection:

This workload is used to identify unusual patterns or outliers in time-series data, such as credit card fraud or equipment failure. It is incorrect here because none of the scenarios involve monitoring data streams for sudden, unexpected deviations from a known baseline.

Knowledge mining:

This involves searching and indexing vast amounts of unstructured data (like PDFs and images) to create a searchable database (Azure AI Search). While it uses NLP and Vision, it is incorrect because the scenarios focus on real-time classification and interaction rather than deep document indexing.

Reference:

Microsoft Learn: Fundamentals of Azure AI (AI Workloads)

Which OpenAI model does GitHub Copilot use to make suggestions for client-side JavaScript?

A. GPT-4

B. Codex

C. DALL-E

D. GPT-3

Explanation:

GitHub Copilot is an AI-powered code assistant that functions as an "AI pair programmer." It works by processing the context of the code you are currently writing—including comments, function names, and surrounding files—to suggest relevant code snippets or entire functions. While newer versions of Copilot have integrated various Large Language Models (LLMs) for chat and advanced reasoning, the fundamental engine historically associated with its real-time autocomplete suggestions in the AI-900 curriculum is a specialized version of GPT-3.

Correct Option:

B. Codex:

OpenAI Codex is the correct answer in the context of AI fundamentals. It is a descendant of the GPT-3 model that has been specifically fine-tuned on a massive dataset of public code from GitHub. This specialization allows it to understand the syntax and patterns of dozens of programming languages, including JavaScript, enabling it to transform natural language comments into functional code or provide accurate line-by-line completions.

Incorrect Option:

A. GPT-4:

While modern versions of GitHub Copilot (specifically Copilot Chat) use GPT-4 for complex reasoning and explanations, the core "completion" engine mentioned in standard AI-900 materials is Codex.

C. DALL-E:

This model is designed for image generation from text prompts. It has no capability for understanding or generating programming code like JavaScript.

D. GPT-3:

Although Codex is based on the GPT-3 architecture, GPT-3 itself is a general-purpose language model. Without the specific fine-tuning on code provided by the Codex variant, it would not be as effective at writing technical JavaScript suggestions.

Reference:

Introduction to GitHub Copilot

For each of the following statements, select Yes if the statement is true. Otherwise, select

No.

NOTE: Each correct selection is worth one point.

Extracting relationships between data from large volumes of unstructured data is an example of which type of Al workload?

A. computer vision

B. knowledge mining

C. natural language processing (NLP)

D. anomaly detection

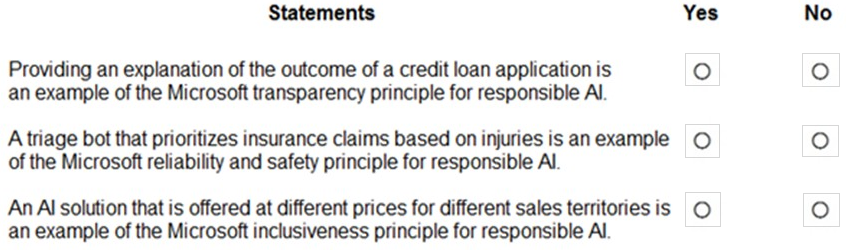

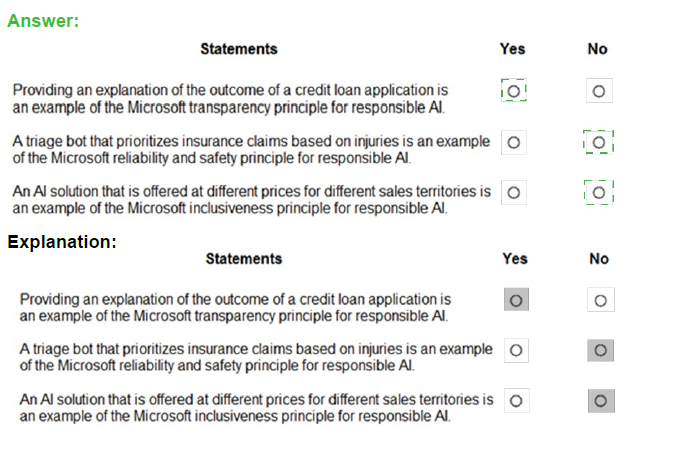

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Box 1: Yes

Achieving transparency helps the team to understand the data and algorithms used to train the model, what transformation logic was applied to the data, the final model generated, and its associated assets. This information offers insights about how the model was created, which allows it to be reproduced in a transparent way.

Box 2: No

A data holder is obligated to protect the data in an AI system, and privacy and security are an integral part of this system. Personal needs to be secured, and it should be accessed in a way that doesn't compromise an individual's privacy.

Box 3: No

Inclusiveness mandates that AI should consider all human races and experiences, and inclusive design practices can help developers to understand and address potential barriers that could unintentionally exclude people. Where possible, speech-to-text, text-to- speech, and visual recognition technology should be used to empower people with hearing, visual, and other impairments.

You need to provide content for a business chatbot that will help answer simple user

queries.

What are three ways to create question and answer text by using QnA Maker? Each

correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Generate the questions and answers from an existing webpage.

B. Use automated machine learning to train a model based on a file that contains the questions.

C. Manually enter the questions and answers.

D. Connect the bot to the Cortana channel and ask questions by using Cortana.

E. Import chit-chat content from a predefined data source.

Explanation:

Automatic extraction

Extract question-answer pairs from semi-structured content, including FAQ pages, support

websites, excel files, SharePoint documents, product manuals and policies.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/qnamaker/concepts/contenttypes

You have 100 instructional videos that do NOT contain any audio. Each instructional video has a script. You need to generate a narration audio file for each video based on the script. Which type of workload should you use?

A. speech recognition

B. language modeling

C. speech synthesis

D. translation

Select the answer that correctly completes the sentence.

| Page 3 out of 27 Pages |

| Previous |