Topic 5: Describe features of conversational AI workloads on Azure

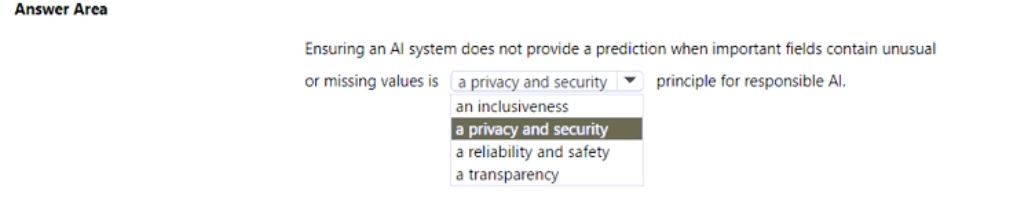

Select the answer that correctly completes the sentence

Summary:

This question tests your understanding of Microsoft's Responsible AI principles. The scenario describes a safeguard to prevent the AI system from making a prediction when its input data is flawed (unusual or missing). This is a measure to ensure the system operates correctly and safely, preventing erroneous or potentially harmful outputs that could result from poor-quality data.

Correct Option:

a reliability and safety

This is the correct principle. Reliability and safety require that AI systems perform consistently and correctly, even in the face of errors or unexpected inputs. By refusing to provide a prediction when critical data is missing or anomalous, the system is demonstrating operational safety. It prevents making a potentially unreliable and unsafe decision that could have negative consequences, thereby upholding this principle.

Incorrect Option:

an inclusiveness

Inclusiveness focuses on ensuring AI systems are fair and work well for all people, regardless of ability, gender, sexuality, ethnicity, or other characteristics. It addresses bias and fairness, not data quality or operational safeguards for missing data.

a privacy and security

This principle concerns the protection of personal data from unauthorized access and ensuring data is used in accordance with privacy laws. While important, it does not directly address the system's behavior when faced with unusual or missing input values.

a transparency

Transparency involves understanding how an AI model makes decisions (interpretability) and being open about its capabilities and limitations. It is about explaining why a prediction was made, not about implementing a safety check to withhold a prediction due to bad input data.

Reference:

Microsoft Responsible AI Principles - Reliability & Safety

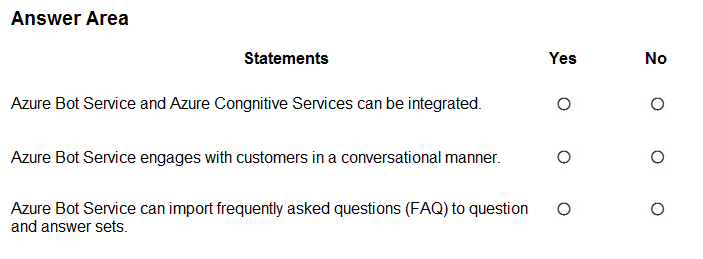

For each of the following statements, select Yes if the statement is true. Otherwise, select

No.

NOTE: Each correct selection is worth one point.

Summary:

This question assesses your understanding of the core capabilities and integration features of the Azure Bot Service. It's important to know that this service is designed specifically for creating conversational AI agents, that it can be enhanced with other AI services, and that it has built-in functionality for handling common support queries via FAQs.

Correct Option:

Azure Bot Service and Azure Cognitive Services can be integrated.

Answer: Yes

Explanation:

This is true and a key strength of the Azure AI ecosystem. The Azure Bot Service is designed to be extended by integrating with various Azure Cognitive Services. For example, you can integrate with the Language service for question answering, use Speech service for voice interactions, or employ Translator to make the bot multilingual.

Azure Bot Service engages with customers in a conversational manner.

Answer: Yes

Explanation:

This is the primary purpose of the Azure Bot Service. It provides a framework for building, testing, and deploying conversational AI agents (bots) that can interact with users through natural language on platforms like websites, Microsoft Teams, and Telegram.

Azure Bot Service can import frequently asked questions (FAQ) to question and answer sets.

Answer: Yes

Explanation:

This is a true and supported feature. The Azure Bot Service can be integrated with the Question Answering feature in the Azure AI Language service. This feature allows you to easily create a knowledge base by importing existing FAQ documents from URLs, files, or manually edited content, which the bot can then use to answer user queries.

Incorrect Option:

Azure Bot Service and Azure Cognitive Services can be integrated.

Selecting "No" would be incorrect, as integration with Cognitive Services is a fundamental and documented capability for enhancing a bot's intelligence.

Azure Bot Service engages with customers in a conversational manner.

Selecting "No" would be incorrect, as this is the core definition and function of the service.

Azure Bot Service can import frequently asked questions (FAQ) to question and answer sets.

Selecting "No" would be incorrect, as importing FAQs to build a QnA knowledge base is a standard and well-documented process for creating a support bot.

Reference:

What is the Azure Bot Service?

Create a question answering bot with Azure Bot Service

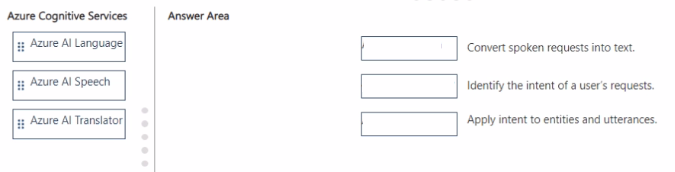

Match the Azure Al service to the appropriate actions.

To answer, drag the appropriate service from the column on the left to its action on the right

Each service may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

Summary:

This question tests your ability to match core Azure AI services to their primary functions. The key is to distinguish between services that process and understand spoken language, written language, and the specific task of intent recognition within a conversational context.

Correct Matches:

Convert spoken requests into text.

Service: ii. Azure AI Speech

Explanation: This is the core function of the Speech-to-Text capability within Azure AI Speech. It is specifically designed to accurately transcribe spoken audio (a "spoken request") into written text.

Identify the intent of a user’s requests.

Service: i. Azure AI Language

Explanation: This is the primary function of the Conversational Language Understanding (CLU) feature within Azure AI Language. CLU is designed to take a user's input (from text or transcribed speech) and determine their goal or intent, such as "BookFlight" or "CheckBalance."

Apply intent to entities and utterances.

Service: i. Azure AI Language

Explanation: This describes the process of building and training a CLU model. You define the intents (what the user wants to do), entities (key data points like dates or locations), and provide example utterances (phrases a user might say). The Azure AI Language service then learns to apply the correct intent and extract the relevant entities from new user utterances.

Incorrect Service:

iii. Azure AI Translator

This service was not used. Azure AI Translator is used for converting text from one language to another. It does not handle speech-to-text conversion or intent/entity recognition.

Reference:

What is Azure AI Speech? - Speech-to-text

What is Conversational Language Understanding?

Which two scenarios are examples of a conversational AI workload? Each correct answer

presents a complete solution.

NOTE: Each correct selection is worth one point.

A. a smart device in the home that responds to questions such as “What will the weather be like today?”

B. a website that uses a knowledge base to interactively respond to users’ questions

C. assembly line machinery that autonomously inserts headlamps into cars

D. monitoring the temperature of machinery to turn on a fan when the temperature reaches a specific Threshold

Summary:

A conversational AI workload involves a system that uses natural language processing (NLP) to interact with humans through dialogue. The core function is to understand spoken or written language, process the intent, and provide a relevant, conversational response. This distinguishes it from robotic automation or simple sensor-based triggers.

Correct Option:

A. a smart device in the home that responds to questions such as “What will the weather be like today?”

This is a classic example of conversational AI. The device uses speech recognition to convert the spoken question into text, natural language understanding to determine the user's intent (get a weather forecast), and speech synthesis to speak the answer back to the user in a conversational manner.

B. a website that uses a knowledge base to interactively respond to users’ questions

This is another key example, often implemented as a chatbot. The system uses NLP to interpret the user's text-based questions, queries a knowledge base to find the most relevant information, and then responds in a conversational, interactive style, mimicking a human support agent.

Incorrect Option:

C. assembly line machinery that autonomously inserts headlamps into cars

This is an example of robotic process automation (RPA) or physical robotics. While it is an intelligent automation workload, it does not involve any form of natural language conversation or interaction with a human. The machinery is performing a pre-programmed physical task.

D. monitoring the temperature of machinery to turn on a fan when the temperature reaches a specific threshold

This is an example of sensor-based automation or a simple control system. It involves a straightforward "if-then" rule with no element of language understanding, dialogue, or conversational interaction, which are the hallmarks of a conversational AI workload.

Reference:

What is a bot? - Azure Bot Service

What is Conversational Language Understanding? - Azure AI Language

What is an advantage of using a custom model in Form Recognizer?

A. Only a custom model can be deployed on-premises.

B. A custom model can be trained to recognize a variety of form types.

C. A custom model is less expensive than a prebuilt model.

D. A custom model always provides higher accuracy.

Summary:

Azure AI Document Intelligence (formerly Form Recognizer) offers both prebuilt models for common documents and custom models for unique scenarios. The primary advantage of a custom model is its ability to be tailored to recognize and extract specific data from form layouts and document types that the prebuilt models are not designed to handle, such as company-specific invoices, proprietary contracts, or custom application forms.

Correct Option:

B. A custom model can be trained to recognize a variety of form types.

This is the core advantage of a custom model. While prebuilt models are fixed for specific document types (like receipts, invoices, IDs), a custom model can be trained on your own labeled datasets to understand the unique structure, fields, and tables in virtually any document format your organization uses. This flexibility is essential for processing proprietary or non-standard forms.

Incorrect Option:

A. Only a custom model can be deployed on-premises.

This is incorrect. Both prebuilt and custom models are primarily cloud-based services. While Azure AI services offer some on-premises containers for certain scenarios, this capability is not exclusive to custom models and depends on the specific service offering, not the model type.

C. A custom model is less expensive than a prebuilt model.

This is generally false. Developing a custom model incurs costs for training and requires a significant investment of time and resources to prepare a large, accurately labeled dataset. Using a prebuilt model for a common task like processing a standard invoice is typically more cost-effective as there are no training costs.

D. A custom model always provides higher accuracy.

This is incorrect. A custom model's accuracy is highly dependent on the quality, quantity, and representativeness of the training data provided. For common document types like receipts, a prebuilt model, which is trained on a vast and diverse dataset, will likely achieve higher accuracy with zero effort. A custom model provides higher accuracy only for the specific, unique forms it was trained on.

Reference:

What is Azure AI Document Intelligence? - Custom models

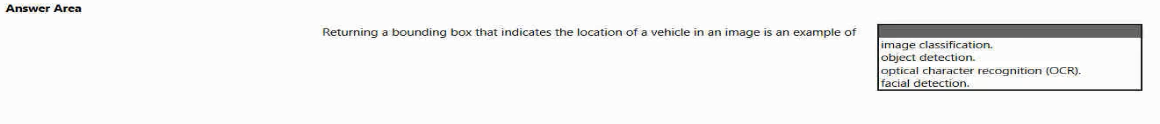

To complete the sentence, select the appropriate option in the answer area.

Summary:

This question describes a core computer vision task that goes beyond simply identifying what is in an image. The key action is pinpointing the spatial location of a specific object by drawing a box around it. This task is distinct from classifying the entire image or reading text within it.

Correct Option:

object detection

Object detection is the correct answer. This technology is designed to identify and locate multiple instances of objects within an image. It does this by drawing bounding boxes around each detected object (e.g., cars, people, animals) and providing the coordinates of those boxes. The primary output is the "where" (location via bounding box) in addition to the "what" (object label).

Incorrect Option:

optical character recognition (OCR)

OCR is used to detect and read text within images. It is not used for identifying and locating general objects like vehicles. Its purpose is to convert textual elements into machine-encoded text.

image classification

Image classification assigns a single label to an entire image (e.g., "city street," "highway"). It does not provide any information about the location, number, or specific position of objects within that image. It answers "what is in this picture?" but not "where are the objects?"

image analysis

Image analysis is a very broad term that can encompass many techniques, including object detection and image classification. However, it is not the specific name for the task of returning a bounding box. "Object detection" is the precise term for that specific capability.

Reference:

What is computer vision? - Object Detection

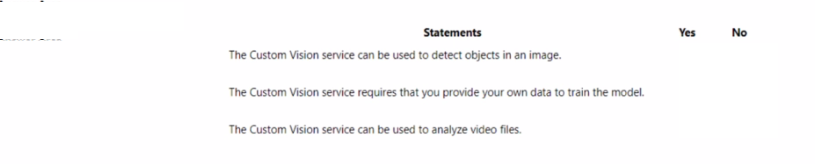

For each of the following statements. select Yes if the statement is true. Otherwise, select

No. NOTE; Each correct selection is worth one point

Summary:

This question tests your understanding of the capabilities and requirements of the Azure Custom Vision service. It's crucial to know that Custom Vision is designed for building custom image analysis models (both classification and object detection) using your own data, and that it is intended for still images, not video.

Correct Option:

The Custom Vision service can be used to detect objects in an image.

Answer: Yes

Explanation:

This is true. Azure Custom Vision supports two types of projects: Image Classification (tagging images) and Object Detection. The Object Detection feature is specifically designed to identify and locate multiple objects within a single image by drawing bounding boxes around them.

The Custom Vision service requires that you provide your own data to train the model.

Answer: Yes

Explanation:

This is a fundamental characteristic of the service. Unlike pre-built vision services, Custom Vision is a platform for creating custom models tailored to your specific needs. This requires you to provide your own set of labeled images to train the model to recognize your unique objects or tags.

The Custom Vision service can be used to analyze video files.

Answer: No

Explanation:

This is false. Custom Vision is designed to analyze still images. To analyze video, you would need to use a different service, such as Azure AI Video Indexer, which can process video frames and may even integrate with Custom Vision for specific frame analysis, but Custom Vision itself does not natively ingest video files.

Incorrect Option:

The Custom Vision service can be used to detect objects in an image.

Selecting "No" would be incorrect, as object detection is a core, documented feature of the service.

The Custom Vision service requires that you provide your own data to train the model.

Selecting "No" would be incorrect, as the entire purpose of the service is to build custom models from user-provided data.

The Custom Vision service can be used to analyze video files.

Selecting "Yes" would be incorrect, as the service is built for image analysis, not direct video processing.

Reference:

What is Custom Vision? - Azure AI services

Object detection in Azure Custom Vision

During the process of Machine Learning, when should you review evaluation metrics?

A. After you clean the data.

B. Before you train a model.

C. Before you choose the type of model.

D. After you test a model on the validation data.

Summary:

Evaluation metrics are quantitative measures used to assess the performance and accuracy of a trained machine learning model. They are essential for understanding how well the model generalizes to new, unseen data and for comparing the performance of different models or tuning attempts.

Correct Option:

D. After you test a model on the validation data.

This is the correct and primary time to review evaluation metrics. After a model is trained, it must be evaluated on a separate dataset that it did not see during training (the validation or test set). Metrics like accuracy, precision, recall, and F1-score are calculated based on the model's predictions on this held-out data. This process tells you how well the model is likely to perform in the real world.

Incorrect Option:

A. After you clean the data.

Data cleaning is a preparatory step focused on handling missing values, correcting errors, and standardizing formats. Evaluation metrics require a trained model to make predictions, which does not exist at this stage. You cannot measure model performance before a model exists.

B. Before you train a model.

This is too early in the process. A model must be trained before its performance can be measured. Before training, you are preparing data and defining the problem, not evaluating a model's output.

C. Before you choose the type of model.

The initial choice of model type (e.g., decision tree, logistic regression) is often based on the problem type, data size, and other heuristics. While evaluation metrics from simple baseline models can inform later model selection, the formal and critical review of metrics happens after a candidate model has been trained and tested.

Reference:

Monitor and view run metrics in Azure Machine Learning

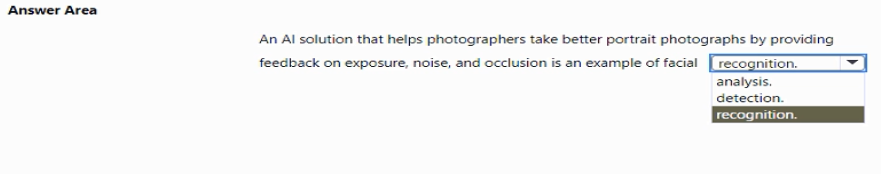

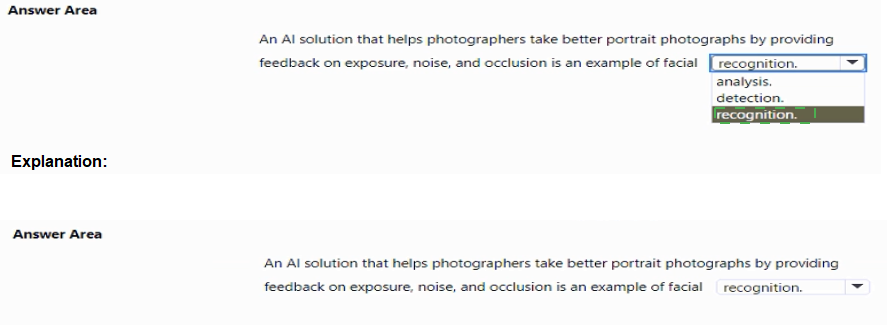

To complete the sentence, select the appropriate option in the answer area.

Explanation:

This question tests the understanding of specific computer vision workloads. The described solution analyzes technical attributes of a photo (exposure, noise, occlusion) to provide improvement feedback. This is a classic example of analyzing visual features for quality assessment, not identifying individuals or verifying identities.

Correct Option:

Analysis:

Computer vision analysis extracts detailed information from images, such as assessing quality, detecting features (like facial landmarks), or evaluating conditions (exposure, occlusion). The solution analyzes a portrait's attributes to guide the photographer, aligning perfectly with the "analysis" workload.

Incorrect Options:

Detection:

While detection locates objects or faces within an image, the core task here is not merely finding a face but evaluating its photographic characteristics for quality feedback, which goes beyond simple detection.

Recognition:

Recognition involves identifying or verifying individuals (facial recognition). The scenario is about improving photo quality, not naming or authenticating the person in the portrait.

Reference:

Microsoft Learn, "Describe features of computer vision workloads": This module distinguishes between detection, analysis, and recognition, noting analysis includes extracting attributes like facial features and expressions.

What are three Microsoft guiding principles for responsible AI? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A. knowledgeability

B. decisiveness

C. inclusiveness

D. fairness

E. opinionatedness

F. reliability and safety

Explanation:

This question tests knowledge of the core ethical principles that underpin Microsoft's approach to developing trustworthy artificial intelligence. These principles are designed to ensure AI systems are built and used in a way that benefits society, minimizes harm, and treats all people equitably.

Correct Options:

C. Inclusiveness:

AI systems should empower everyone and engage people. This principle mandates that AI must be designed to understand and meet the needs of people from diverse backgrounds, abilities, and cultures, avoiding the creation of solutions that inadvertently exclude groups.

D. Fairness:

AI systems should treat all people fairly and not affect similarly situated groups in different ways. This involves proactively identifying and mitigating biases in data and algorithms to prevent discrimination and ensure equitable outcomes.

F. Reliability and Safety:

AI systems must perform reliably and safely under all intended conditions. They should be resilient to manipulation or error and operate in a secure, consistent, and predictable manner to earn user trust and prevent harm.

Incorrect Options:

A. Knowledgeability:

This is not a formal responsible AI principle. While AI systems should be informed by data and knowledge, the guiding principles focus on ethical deployment rather than a system's raw informational capacity.

B. Decisiveness:

This is not a responsible AI principle. AI systems should make well-justified decisions, but "decisiveness" is not a core ethical tenet and could conflict with principles like fairness if it prioritizes speed over careful, unbiased outcomes.

E. Opinionatedness:

This is not a principle and is contradictory to responsible AI. AI systems should be based on factual data and designed to mitigate bias, not reflect or promote the subjective opinions of their creators.

Reference:

Microsoft Learn, "Describe guiding principles for responsible AI": The official AI-900 learning path lists six principles: Fairness, Reliability and Safety, Privacy and Security, Inclusiveness, Transparency, and Accountability.

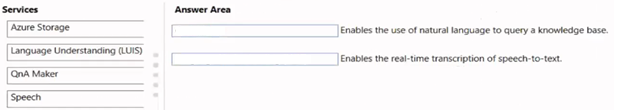

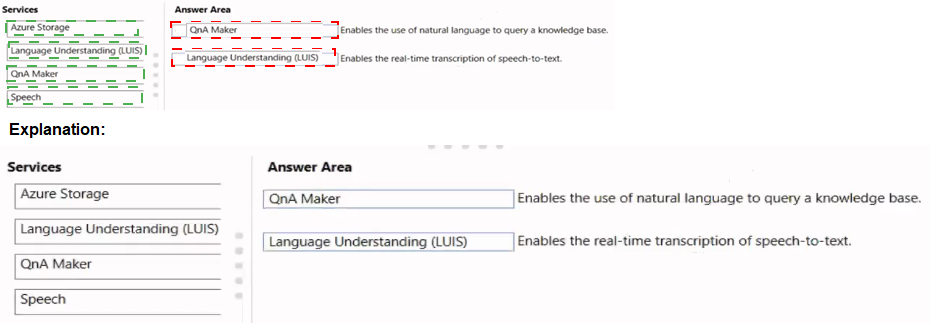

Match the services to the appropriate descriptions.

To answer, drag the appropriate service from the column on the left to its description on the

right. Each service may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

Explanation:

This question requires matching Azure AI services to their core functionalities. You must identify which service directly enables each described capability. The key is knowing the primary purpose of each listed service.

Correct Matches:

"Enables the use of natural language to query a knowledge base." → QnA Maker

QnA Maker is specifically designed to create a conversational question-and-answer layer over existing data. It extracts Q&A pairs from documents or URLs to build a knowledge base, which users can then query using natural language, as described.

"Enables the real-time transcription of speech-to-text." → Speech

The Azure Speech service includes the Speech-to-Text capability, which accurately transcribes spoken audio into readable, searchable text in real-time, which is the exact functionality described.

Incorrect Matches for Each Description:

For the knowledge base query:

Azure Storage is a cloud storage service for data, not for interpreting natural language queries.

Language Understanding (LUIS) is for building custom natural language understanding into apps (like interpreting commands), not specifically for querying a static knowledge base.

Speech handles audio input/output, not querying textual knowledge bases.

For the real-time transcription:

Azure Storage, LUIS, and QnA Maker do not perform real-time audio transcription. LUIS and QnA Maker process text or intent, but the initial conversion from speech to text requires the dedicated Speech service.

Reference:

Microsoft Learn, AI-900 learning path modules: "Describe features of Natural Language Processing (NLP) workloads on Azure" (covers QnA Maker and LUIS) and "Describe features of speech AI workloads on Azure" (covers Speech service capabilities).

You build a machine learning model by using the automated machine learning user interface (UI).

You need to ensure that the model meets the Microsoft transparency principle for responsible AI.

What should you do?

A. Set Validation type to Auto.

B. Enable Explain best model.

C. Set Primary metric to accuracy.

D. Set Max concurrent iterations to 0.

Explanation:

The Microsoft transparency principle for responsible AI requires that AI systems be understandable, and their decisions explainable to users. When using automated machine learning, you must take specific action to generate model explanations that help stakeholders understand how the model makes its predictions.

Correct Option:

B. Enable Explain best model:

This is the direct action that fulfills the transparency principle. Selecting this option in the automated ML UI instructs the service to calculate feature importance for the best model, generating explanations that show which data factors most influenced its predictions. This is the only choice directly related to model interpretability.

Incorrect Options:

A. Set Validation type to Auto:

This configures how data is split for training and validation to assess model performance. It is related to model accuracy and evaluation, not to providing human-understandable explanations for the model's behavior.

C. Set Primary metric to accuracy:

This chooses the performance metric (like accuracy, AUC) used to evaluate and select the best model. It optimizes for predictive performance, not for the interpretability or explainability of the model's decisions.

D. Set Max concurrent iterations to 0:

This setting controls parallel compute resources (iterations). A value of 0 means the system default is used. It is a compute configuration setting and has no bearing on making the model's logic transparent or explainable.

Reference:

Microsoft Learn, "Describe capabilities of automated machine learning": The documentation states that when creating an automated ML experiment, you can enable "Explain best model" to generate featurization and feature importance explanations for the winning model, supporting model interpretability.

| Page 2 out of 27 Pages |

| Previous |